Original URL: https://www.theregister.com/2007/12/23/data_management_tagger_bust/

New kids on the data management block

Keep it old skool

Posted in Channel, 23rd December 2007 10:16 GMT

In the age of Web 2.0, data is a strategic differentiator. Tim O’Reilly, considered the father of Web 2.0, has claimed “data is the Intel inside” of this revolution. Companies that control a particularly unique or desirable set of information drive more customers to their sites.

But the concept of data as a competitive differentiator is not new. So what - if anything - has changed, and what does it mean? I believe that, although Web 2.0 and XML are having a liberating affect on data, there is much they can - and should - borrow from the past.

A tenet in Web 2.0 is that you, the user, control your own data. How many of us now book travel online or execute our own stock trades? With Web 2.0, this concept goes a step further. The consumer expects to be a contributor to the data, not just a consumer - contributing blogs about their favorite travel destinations, creating mashups linking travel itineraries with weather and map information, loading their favorite travel videos to the YouTube.

Traditionally, data was strictly controlled by the business and by IT. Data was stored in proprietary DBMS formats, strictly controlled by a DBA on the operational side, and by a data architecture team on the design side. Business requirements for data were carefully documented in a logical data model and structural designs were built in a physical model that generated DLL for the DBA to implement as the gatekeeper of the DBMS system.

In Web 2.0, XML is touted by many as the open alternative. Basic design principles of XML are to provide interoperability and accessibility of data. XML can be read anywhere: on multiple platforms and operating systems, as well as by human beings themselves.

Also inherent in the design of the XML technology stack is the ability to format the data visually, and there are a growing number of technologies and scripting languages emerging for this purpose. Part of the appeal of the web and one of the reasons for its explosion is the ability for users to see information in an easily understandable and visually appealing format. XML is an evolution from the HTML document mark-up system, which allowed the user to create display tags for easy internet readability.

A core architectural capability that XML added was the ability to structure previously unstructured documents. Metadata tags were added to allow semantic meaning to a document and schemas allowed for structured data typing and validation. Searches could now move from being simply string-based text searches to structurally-based queries.

But is the tag structure that comes with XML enough to provide true data and metadata management? And is the structure provided even being used, or is the focus on the flashy visualization aspects? Many in the new school create an XML document without using schema validation at all, claiming that the document is self-documenting by nature of the tags provided.

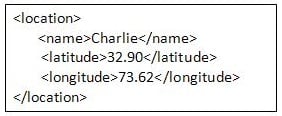

To see the errors in this assumption, let’s consider the following XML snippet:

The self-documenting crowd is correct in that, with tags, we do have more meaning than would be provide with an untagged document - we know that the number 32.90 is the latitude of the location rather than, say, the acreage. An old school data architect, however, would want to capture much more information to make this meaningful for data governance. For example: what is the definition of location? Is this a state, a continent or a store location?

An XML schema, if used, would help provide some additional meaning. Data types could be defined for each element and namespaces could provide context. The <documentation> tag could provide a basic description of the object. Traditional data models and metadata repositories, however, break down “documentation” into many more levels of granularity: such as description, notes, security level, steward and lineage.

Such additional information might clarify that the above location is a top-secret military base, used by the air force and defined by the Central Intelligence Agency, noted that this location was changed in 1992 based on organizational changes in power, and that the source data came from two military databases joined with specific transformation rules.

To put this in context, it helps to refer to the famous - or infamous, depending on your view - long tail. In this case, with an infinite number of contributors and consumers on the web, the volume can be much larger in the tail than in the head of the curve. The same paradigm can be applied to data management.

As noted earlier, data management has revolved primarily around the closely controlled, high-volume database systems - the head. With XML, data can be accessed and manipulated by a large number of internet collaborators – that’s the long tail.

So what’s the risk of loosely managing the long tail, as long as the head is closely controlled? Take an online retailer whose website content is normally driven by a database system managed using the traditional approach, that can now be accessed and transformed via the XML technology stack to a larger audience.

That’s clearly a risk if a retailer doesn’t understand its location information. Now, though, you’re mixing that with Google maps with craigslist.com, and the divergent definitions of location information will cause an error and - in the worst case - your delivery winds up at the wrong house.

This matters even more when those in corporate management start asking to ride the mashup wave and/or obtain quick, pretty reports that haven’t been validated by a data governance team. The slick mashups their teenage whizkid can whip together at home may not be appropriate for their corporate reports. Regulations like Sarbanes-Oxley require the strict governance and data lineage reports that the traditional approach can provide.

As in many areas of life, then, while the new generation has some great ideas and innovations, they also have much to learn from the tried-and-tested lessons of their forebears. ®

Donna Burbank has more than 12 years' experience in data management, metadata management, and enterprise architecture. Currently leading the strategic direction of Embarcadero Technologies architecture and modeling solutions, Donna has worked with dozens of Fortune 500 customers worldwide and is a regular speaker at industry events.