Original URL: https://www.theregister.com/2006/10/26/the_story_of_amds_fusion/

Cool Fusion: AMD's plan to revolutionise multi-core computing

Different cores for different chores

Posted in Personal Tech, 26th October 2006 10:31 GMT

AMD's Fusion CPUs will not be mere system-on-a-chip products, the chip company's chief technology officer, Phil Hester, insisted yesterday. Instead, the processor will be truly modular, capable of forming the basis for a range of application-specific as well as generic CPUs, from low-power mobile chips right up to components for high-performance computing systems.

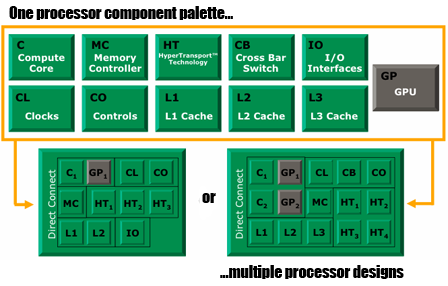

Start equipping CPUs with their own graphics cores and it certainly sounds like you'll have an SoC part on your hands. Not so, says Hester. Fusion will not just link monolithic components on a single die - the traditional SoC architecture - but will break these components down into more basic parts that can be mixed and matched as needed then linked together using AMD's Direct Connect technology.

According to Hester, Fusion's roots lie much further back than discussions between AMD and ATI about graphics technology more tightly connected to the CPU, let alone the more recent takeover negotiations. When 'Hammer', AMD's original 64-bit x86 architecture, was in development, AMD designed the single-core chip to be able to be equipped with a second core as and when the market and new fabrication technologies made that possible. Going quad-core will require a suitably re-tailored architecture, as will moving up to eight cores and beyond to... well, who can say how many processing cores CPUs will need in the future?

Or, for that matter, what kind of cores? According to Hester, this is a crucial question. AMD believes building better processors will soon become a matter not of the number of cores the chip contains but what specific areas of functionality each of those cores deliver.

To build such a chip, particularly if you want to be able to change the mix of cores between different products based on it, you need a modular architecture, he says. So Fusion will break down the chip architecture into its most basic components: computational core, clock circuitry, the memory manager, buffers, crossbar switch, I/O, level one, two and three cache memory units, HyperTransport links, virtualisation manager, and so on - then use advanced design tools to combine them, jigsaw-fashion, into chips tailored for specific needs. Need four general purpose cores, three HT links but no L3 cache? Then choose the relevant elements, and let the design software map them onto the die and connect them together.

At the time, says Hester, AMD didn't necessarily include a graphics core among its list of processor pieces, but it certainly allowed for the possibility other elements might need to be added on, not only 3D graphics rendering but also media processing, for example. However, it quickly became clear the GPU would be the part most suited to this shift, not least because of the graphical demands Windows Vista is going to make in initial and future releases.

Developing an entirely new programmable GPU core in-house is a risky endeavour, Hester admits. It's an expensive process and if you get it wrong, you weaken your entire processor proposition when CPU and GPU are as closely tied as the Fusion architecture mandates.

AMD naturally approached potential partners with graphics chip development expertise - long before the ATI and AMD first began discussing the possibility of a merger, in late December 2005/early January 2006, according to Hester - and while the ultimate choice was ATI, we'd be very surprised if AMD didn't talk to a number of ATI's competitors. In the glow of the post-merger honeymoon period, erstwhile ATI staffers paint a rosy picture of the two firms' highly aligned goals.

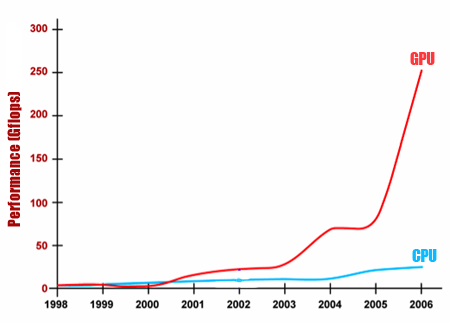

Certainly, ATI had developed an interest in processor technology having seen how researchers were increasingly beginning to turn to programmable GPUs to process non-graphical data. As Bob Drebin, formerly of ATI desktop PC products group but now AMD's graphics products chief technology officer, puts it, science and engineering researchers and users had become attracted to modern GPU's hugely parallel architecture and Gigaflop performance.

A GPU typically takes a heap of pixel data and runs a series of shader program on each of them to arrive at a final set of colour values. As display sizes have grown, magnified by anti-aliasing requirements, GPUs have developed to process more an more pixels this way simultaneously.

But since the result of any of their pixel shader runs is a set of binary digits, and digital data can be interpreted in whatever way the programmer believes is meaningful, modern GPUs are not limited to pixels. Try fluid dyamics data instead, replacing pixels with particle velocities, and using shader code to calculate the effect on a given particle of all the nearby particles. The result this time is a number you interpret as a new velocity value rather than a colour.

That's real physics, but as both ATI and Nvidia have been touting during the past six months or so, it equally applies to the movement of objects and substances in a game.

Crucially, though, this doesn't mean the CPU isn't needed any more, Drebin says. Going forward, it's a matter of matching a given task to the processing resource - GPU or CPU - that will be able to crunch the numbers most quickly. But if you're looking at apps with a much higher demand for GPUs than CPUs, ATI's thinking went, maybe we should be designing products that also provide the more general purpose processing that CPUs do so well.

It's not hard to imagine, incidentally, Nvidia thinking on similar lines, particularly given the rumours that it's exploring x86 development on its own. It's hard to believe it has some scheme to break into the mainstream CPU market - as AMD's Hester says, there are really only two companies that make x86 processors, but "four or five that have tried and failed" - but general-purpose processing units tied to powerful GPUs and aimed at apps that need that balance of functionality.

The beauty of AMD's modular approach is that it allows it to produce not only more CPU-oriented processors but also the GPU-centric devices ATI had been thinking of. And all of them are aligned architecturally and guaranteed a baseline compatibility thanks to their adherence of the x86 instruction set.

Which will itself have to adapt, Hester says, to take on new extensions that will allow coders to access directly the features of the GPU - or, indeed, other modules that are brought on board as and when it makes economic sense to do so. The big users will be the OpenGL and DirectX API development teams, but other software developers are going to want it to access these future x86 extensions for other, non-graphical applications.

AMD's Fusion roadmap calls for the first processors based on this new architecture to ship late 2008/early 2009, out into the 45nm era. Laying the groundwork will be the series of platforms AMD has in mind to promote, each combining multi-core CPUs and one or more separate GPUs to show how mixing and matching these elements will allow vendors to tailor systems better for certain applications. Come Fusion, and the same logic will apply, only this time the choice is which processing unit you buy for a given system rather than which mix of system components.

Not that the discrete GPU is going to go away. Both Drebin and Hester stress certain apps need raw GPU horsepower, and since these chips remain a strong part of ATI's and now AMD's business, you'd hardly expect either man to play it down more than two years before Fusion's due to debut.

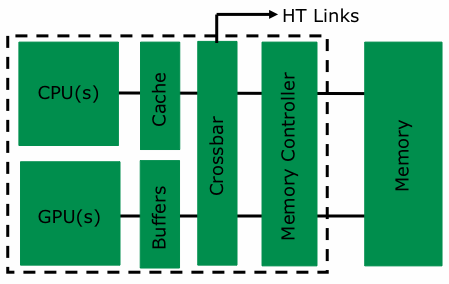

What Fusion represents, then, is the shift of the integrated graphics core off the North Bridge and onto the CPU, improving the speed of its link to the main core and the memory controller, and reducing system power consumption into the bargain.

That said, it might not be unrealistic to expect multi-core Fusion-based GPU-only chips appearing on future add-in cards, or multi-GPU Fusion processors driving gaming-centric PCs. Much depends on how well AMD can build powerful graphics core into the Fusion architecture and whether, come 2008/2009, it reckons there's business advantage to be gained by keeping Fusion GPUs back a generation, as is the case with integrated graphics today.

AMD's ambitions stretch beyond gaming and scientific computing, and Hester talks about Fusion processors extending from the consumer electronics world right up to high-performance computing environments, from palmtops to supercomputers, as he puts it.

Intel's ambitions are not so very different. All its forecasts point to driving the x86 instruction set well beyond the PC and server spaces out into mobile and consumer electronics applications. If Intel financial woes earlier this year hadn't suggested the sale of its XScale ARM-based processor operation, the company's strategic emphasis on x86 would have made the move inevitable.

The battle lines are drawn then not on the basic ISA but on how to extend it with new features to meet emerging computing demands. Given what Intel has said to date, it's focusing on a multi-core future that deliver sufficient processing resources to deliver GPU-like functionality through ISA extensions. Intel's plan for SSE 4, due to debut in the 45nm 'Penryn' timeframe, is a case in point.

Both companies have the transistor budgets to drive each's approach, one bringing more technology off system onto the processor, the other expanding the CPU power of the chip. The issue for Intel will be whether its reliance on the North Bridge will deliver it sufficient bandwidth to make its approach run smoothly enough. Only time will tell - it has already been rumoured to be developing on-CPU memory controllers.

Roll on round two of the multi-core contest. ®