This article is more than 1 year old

Server tech is BORING these days. Where's all the shiny new goodies?

Cisco, Dell, HP - let's get cracking, guys

Blocks and Files Once upon a time, a mere 10 years or so ago, servers had direct-attached disks or network-attached disk arrays. The flash in the arrays – SSDs primarily, but also in the controllers – made data access faster.

However multi-core, multi-threaded CPUs have an almost insatiable appetite for data. Any access latency is bad when the cores have to wait.

The response to this was to start bringing the hottest data into the server itself, initially by caching on PCIe flash cards cards; think Fusion-io and others. In-memory databases were possible, if the return justified the high cost – which it didn't for most applications.

Advanced software put the PCIe flash into the same namespace as memory to form storage memory. This wasn't as fast as RAM but was still cheaper and faster than an access out of the namespace.

Still, PCIe flash is not as fast as memory. So, latterly, fresh technology brings the data even closer to the CPU by placing it on the memory bus, which has half the latency of a PCIe flash card. SanDisk/SMART Storage ULLtraDIMMs and Micron's non-volatile DIMM technology are the main examples of this.

This is federating software pool storage on multiple servers into a single resource. In other words, virtual SAN or VSA software; what VMware calls VSAN. The technology creates a SAN without using a SAN fabric, meaning Fibre Channel.

ILIO and ILIO USX go one step further by using memory itself as a storage, or so it says. That's debatable as RAM is not persistent and needs backing up with non-volatile storage, so some would say that by definition it's a cache, but let's not count the angels on that pinhead.

PernixData provides a federated cache inside VMware's hypervisor.

Each one of these technologies – PCIe flash, storage memory flash, DIMM flash, in-memory virtual machine data, and hypervisor caching – accelerates a server, making it capable of handling more VMs. Deduplication and compression lower the cost of both the flash and RAM and there are cores enough in the servers to run this without affecting the overall VM count too much.

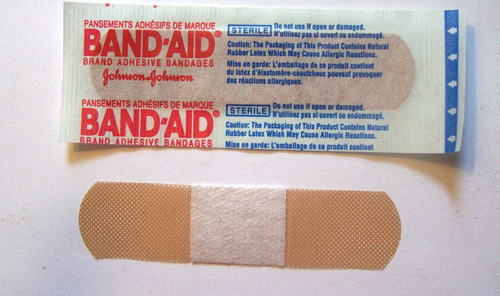

Each one of these technologies is a bandage for the disk and network-attached storage data access latency problem. These bandages have been created by startups, not by the server vendors. Isn't it time there was a rethink of server design with these technologies integrated into the server vendors' offerings by them?

Put another way: why has there been so little server technology innovation from Cisco, Dell, HP, and IBM Lenovo? ®