This article is more than 1 year old

Intel: Now THIS is how you forge physical, virtual switches

Chipzilla wants to take over data center and telco networking

Intel has x86 chips, switch ASICs, and tweaks to the Linux operating system that it says allow it to not only make better physical switches, but is also advancing the idea of using its chip and software tech to build a virtual switch for linking virtualized servers together and to layer network application services onto the network.

So at the Open Networking Summit today, Chipzilla is rolling out two networking reference platforms as part of its long-term goal to significantly expand its networking business and thereby boost the revenues and profits of its Data Center and Connected Systems Group.

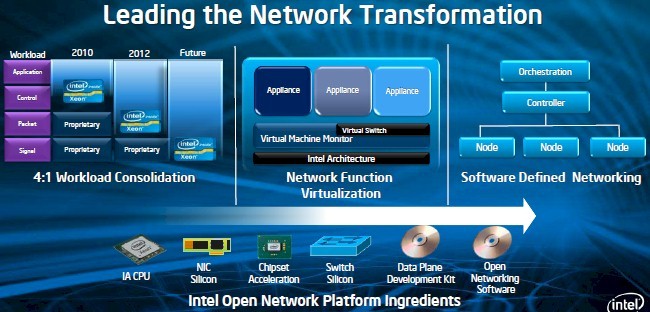

That goal, as Intel explained last year, is to ramp up the use of Xeon and Atom processors in servers and in storage and x86 chips and Fulcrum ASICs in various kinds of switches and routers, which Intel hopes will help it double its data center business to $20bn by 2016.

According to Rose Schooler, who is general manager of the communications and storage infrastructure group at Intel (meaning everything that is not a server), the opportunity is quite large for using Intel technology, and so will be the payoff be for both data centers and telcos/service providers.

"The traditional approaches are very black box and very expensive, with custom ASICs, custom FPGAs, and custom software," explained Schooler in a briefing with the press ahead of her keynote address at Open Networking Summit in Santa Clara on Thursday. "This approach takes away a lot of the oxygen from innovation."

Well, to one way of looking at it. To another way of looking at it, the hegemony of Intel on the desktop and now in the server has taken away the oxygen and the profits in those markets and brought them to Intel. Clearly, Intel wants to do the same maneuver on both storage and networking as the data center build outs, driven by the move towards cloudy data centers and the cloudy apps that are driving them from all kinds of devices.

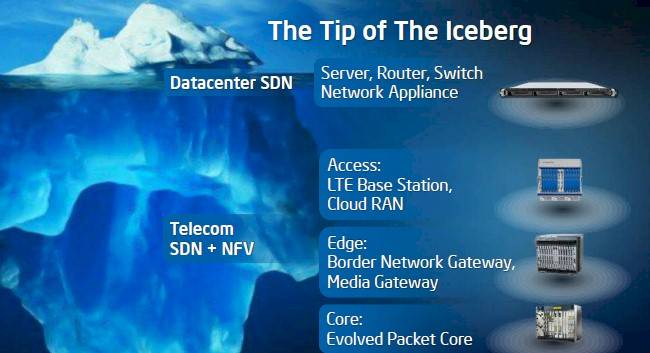

Intel says the data center is just the tip of the iceberg for network virtualization

Intel is absolutely right, as Schooler points out, that the homogenizing of servers with the x86 platform has made it easier to move workloads from one device to another, and that is a real benefit. And there is no doubt that as Intel has ramped up its x86 server business, it has used Moore's Law, its wafer bakers, and volume production to radically increase the performance of processors and reduce their costs.

Intel has repeated this strategy for the chips inside of storage devices already and is gearing up to do the same in networking to lower the capital expenses associated with switches and routers, whether they are physical or virtual.

Intel wants to sell components, not switches and appliances

On the operational side of the IT budget, Intel wants to deploy software-defined networking (SDN) and network function virtualization (NFV), both of which are ways of virtualizing the different layers of the network stack and integrating Layer 2 and 3 switching (that's the SDN part) and network applications that ride up in Layers 4 through 7 (that's the NFV part) all on one device that is essentially a hybrid server/switch.

Schooler trotted out telco Verizon, which has been an early adopter of SDN and NFV technologies, and has decreased the time to deploy a new network and its applications by 90 per cent and chopped capex and opex by between 40 and 60 per cent.

People have been talking about SDN in the data center for servers, routers, switches, but Schooler says this is just the tip of the iceberg. The same SDN and NFV technologies will be deployed in the access layer of the network at telcos and service providers (LTE base stations and cloud RANs), at the edge of the network (which includes border network gateways and media gateways), and in the core switches.

Intel is building reference designs for physical and virtual switching

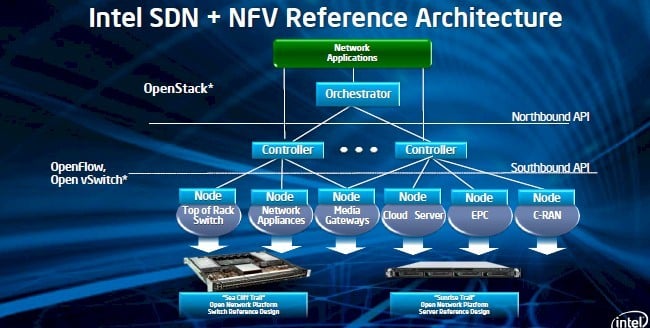

The way Intel sees the networking world evolving, there will be an orchestration layer for networking, servers, and storage (in the example above, it is OpenStack), which then feeds down into OpenFlow-enabled network controllers and Open vSwitch virtual switches tucked inside the hypervisors. And below this come two reference switching platforms, the "Sea Cliff Trail" physical switch and the "Sunrise Trail" virtual switch.

The Sea Cliff Trail reference box is aimed at physical switching

Intel gave us a peek at the Sea Cliff Trail SDN-enabled reference switch back at Intel Developer Forum last September.

The Seacliff Trail switch has 40 10Gb/sec Ethernet ports and four 40Gb/sec Ethernet ports all packed into a 1U rack-mounted chassis, like many other top-of-rack switches these days. The switch employs Intel's Fulcrum FM6764 switch ASIC, which has OpenFlow 1.0 control protocols etched into it.

The FM6764 also supports VXLAN, the extended LAN virtualization protocol espoused by VMware that puts a Layer 2 overlay on top of Layer 3 networks so virtual network links don't break as virtual machines live migrate around server clusters. And the ASIC also supports the NVGRE alternative that Microsoft, Intel, Dell, and HP have offered to VXLAN.

The Seacliff Trail reference platform also includes a 22 nanometer "Gladden" Core processor, which is part of the "Crystal Forest" AMC control plane module using the "Cave Creek" chipset. This control module does deep packet inspection, packet acceleration, encryption/decryption, and other kinds of coprocessing for the Fulcrum ASIC in the switch.

This AMC module also packs an unspecified FPGA and runs the hardened and streamlined Linux kernel created by Wind River. The Seacliff reference switch has 4GB of main memory, 8GB of NAND flash for local storage, and 8MB of SPI flash for the boot BIOS of the switch. There is also an embedded Intel 82599 10GB/sec Ethernet LAN controller.

Sometimes in the network, you are not doing physical switching, but virtual switching with lots of network applications running on the device. (There is no sense wasting money on a Fulcrum ASIC and ports if you are not going to use them.)

Sunrise Trail is for virtual switching and network function virtualization

This device, really a server that is acting like a switch rather than a switch with a server embedded in it as with the Seacliff Trail device, uses Core or Xeon processors and the Intel 89XX series of communications chips.

It also sports Wind River Linux and a set of networking tweaks to that Linux called the Data Plane Development Kit that helps speeds up the operation of the Open vSwitch software when it runs on Intel Core and Xeon processors. Tweaks are done in the Linux kernel to improve memory management, speed up queue and ring functions, and flow classification, among other features.

Schooler tells El Reg that Intel very much believes in supporting multiple controllers and virtual switches and that we can expect for versions of the Data Plane Development Kit for alternatives in the market. Microsoft, Cisco Systems, NEC, and VMware all have their own virtual switches and should see some love on the Sunrise Trail reference platform. ®