This article is more than 1 year old

Storage management tools: Why won't it let me... GRRRR

Big picture vs detail - you can't have it both ways

Comment Is it important to manage an individual device very well, or the entire estate at a much higher level?

The nature of management tools available at the moment means that currently we have to make a choice. I’m going to focus on the storage world, but from what I’ve seen the problem affects most disciplines in IT.

The problem lies in the fact that the same team that develops the device develops most of the management tools - at least initially. The device and the tools grow up side-by-side as the test and QA teams need ways of testing the functionality created by the functional engineering teams and there needs to be a simple way to demonstrate the functionality to customers and early investors.

Initially these user interfaces are designed to be restrictive. If it is too easy to do the wrong thing then an army of users will take that path, which means the new device will quickly fall prey to bad reviews and poor experience. Restricting what can be done with the tools provided keeps the train on the tracks and the code sticking to the well-tested paths. It limits the possible outcomes to an amount that can be far more easily tested in much shorter time and therefore at less cost.

Wait a second - who put THAT in?

The problem is that often, as the product grows and the management of the product becomes the responsibility of a different team, the historical reason for some of the restrictions gets lost in the mists of time. Yet the restrictions persist - stopping the user from performing what might otherwise be very useful tasks with the native software. These "limits" also have another side effect: they push the innovative and industrial user to find other means to create the functionality they would like. This has never been more true than now, when the DevOp is gaining popularity and momentum.

It takes a smart organisation to see this trend and manipulate it by taking a lead from some of the more popular development communities and empowering users to be creative with software. Vendors need to become less precious about the kit they sell and what the end users are asking of it.

One such company that has taken this route is NetApp. I’ve long struggled with Data Fabric Manager (DFM) or as it’s now referred to, OnCommand Core.

As a quick side-rant, a note to all marketing teams... Calling all of your products by a similar name does not a unified approach make. When products perform different tasks, have differing look and feel and are totally independent from one another, don’t call them all Uni-something or OnCommand. They are different things, so give them different names. It's far less confusing when you're talking to large groups about the product with the room being 50 per cent split on which one you are referring to. Rant over.

So back to my struggle with OnCommand Core. It isn’t that it is a poor product, it's quite good. It’s not that it didn’t achieve what NetApp set out for it to do: I’m sure it comes pretty close. The problem is that NetApp’s goal for OnCommand is a country mile away from what most end users wish to achieve with it.

'WFA' and other solutions

Though, as I said before, NetApp has recently taken a more enlightened path in the form of Work Flow Automator. I’m sure it has since been branded OnCommand Flow or some such marketing friendly term, but the community that has formed around the product - I count myself as a member - know it as WFA. I think this was a move of pure genius on NetApp's part.

The product comes from the team that were formally Onaro and the creators of the SanScreen product (now known as OnCommand Insight, Perform if my point needed to be made any more evident etc.) The intelligence in this product comes not from what it does out of the box, which is already pretty nice - although slightly convoluted - but what it allows the user to do.

It presents a very neat set of building blocks, but more importantly, a simple means to create new blocks and extend existing ones using the very powerful Powershell Toolkit. NetApp have always been very good at providing API access and in general they have been pretty good and stable. This has allowed a flourishing partner base to create tools and applications around the platform whilst allowing NetApp themselves to focus on the hardware and core software functionality of the FAS platform.

This has lead to application integration in products like BMC, VMware vSphere and Microsoft Hyper-V, to name but a few, which are very quick, feature-rich and well-supported - all things that vendors with a more closed approach to the ecosystem often struggle with.

Vendors: Use the talent in your user pool...

WFA takes this approach and makes it simpler by using this building block approach. This means that very little time needs to be invested to get an orchestration or management platform that is actually very powerful and tailored quite snugly to individual requirements. Given that most application platforms these days also contain a PowerShell interface of some kind - of varying success - it allows these products to be rolled into the same workflow.

The ecosystem I see growing up around this product has the potential to be a great momentum provider for NetApp when it comes to gaining a foothold in the cloud and automated infrastructure spaces. It’s certainly one of the first platforms I look to now because of this simplicity of management and integration.

I would love to see other vendors follow NetApp's lead with its openness of APIs and the way it enables power users of its kit not only solve their own problems, but provide incentives and opportunity for them to share that work and solve the same problems for other users too. This is how strong communities are built and that is how a product becomes strong.

Use the DevOps, use the talent in your user pool and stop restricting us and treating us like children who would break your toys! We are big enough and ugly enough to take responsibility for mistakes we introduce ourselves. Equip us better with robust guidelines and a thorough understanding and remove the walls to innovation around your products!

As for the end users, we can help by being responsible community members and sharing our solutions, supporting the openness of that community and generally making the effort of the vendors to provide and support the open APIs a worthwhile and rewarding experience for everyone.

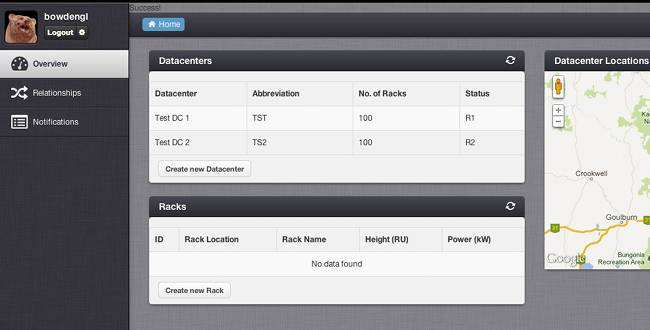

Screenshot of the open-source LUCR data centre management project, aka LucrDCIM (click for more detail)

Lucr* is currently working on a project that will hopefully stimulate some of this sharing and community spirit. Based initially around the technology we work with most - NetApp storage through WFA and API access and VMware virtualisation, combined with industry standards such as SMI-S and CDMI - we are building a data centre-management product that manages the physical and logical construction of IT services.

The community part is this: we will be releasing the whole thing as open source through GitHub. The project is affectionately known as LucrDCIM at the moment, but the name is something we are definitely wanting to collaborate on. We are currently putting together the documentation and collateral to support this move as well as working through the legal quagmire of combining a number of current open-source initiatives that have differing and sometimes competing licensing models, but when we have completed all of that we will be sure to announce further details.

If you are interested in knowing more about the project and would like to get involved at an earlier stage then feel free to get in touch at hello@lucr.com and we can see what we can do about getting everyone involved. We invite vendors and end users alike to contribute and shape the future of what we think is a very exciting project. ®

*Glyn Bowden is the founder and CEO of Lucr Ltd.