This article is more than 1 year old

Dell lends Apache ARM software efforts a hand

Forges 'Zinc' custom Calxeda ARM server

The hardware engineers at Dell's Data Center Solutions custom server unit have bent some metal around Calxeda's EnergyCore ARM server processors and donated a box to the Apache Software Foundation so it can tweak and tune the Apache web server as well as the Hadoop data munching stack and the Cassandra NoSQL data store to run on the EnergyCore EXC-1000 processors and their integrated Layer 2 networking switch.

Don't get too excited about the new "Zinc" ARM servers that the server maker has created for Apache geeks, because you can't have one. Drew Schulke, product marketing manager for the DCS unit, tells El Reg that it was keen on getting Calxeda-based machinery to Apache so they could do software builds.

These will in turn inform the processor and interconnect design work going on at Calxeda and the server design that DCS is also doing on behalf of hyperscale data center operators to see how ARM might fit into the glass house. Not only is this new Zinc server a special, limited box, it is more limited than the typical DCS machinery given how immature the software stack for ARM servers is at the moment.

Rather than just take the skinny sled design of the "Copper" ARM server that Dell announced back at the end of May using Marvell's 40-bit Sheeva-derived Armada XP 78460, Dell did some actual engineering work.

The Copper server is based on the 3U C5000 server sled enclosure, and each Copper enclosure has room for a dozen sleds. Each sled has a single system board with four of the four-core ARM chips, which are ARMv7-A compliant processors running at 1.6GHz and with 40-bit extended memory extensions.

The system board has four memory slots, one per socket, and an integrated "Cheetah" Layer 2 network switch, also from Marvell. The components are similar to those used in Codethink's Baserock Slab server, announced back in September but packaged up completely differently. Anyway, the Copper sleds have four 2.5-inch disks, one per ARM socket, and up to a dozen of the sleds can be slid vertically in the C5000 chassis.

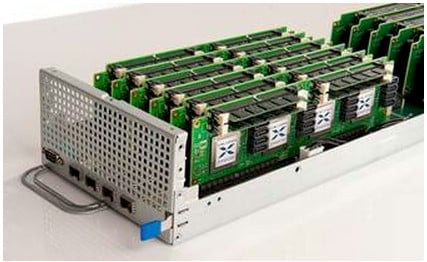

Dell's Zinc ARM server

To make the Zinc servers, Dell did not just do a global replace of Marvell Armada with Calxeda EnergyCore inside the C5000 chassis and the compute and storage sleds. Rather, the company grabbed the 4U "Zeus" C8000 chassis announced in September of this year and engineered it so a lot of Calxeda processing or storage cards can be crammed into a small space.

Schulke says that Dell has been working on the design since earlier this year, and by the way, it is a little denser than the experimental "Redstone" ARM server launched by HP as part of its Project Moonshot hyperscale server effort last November.

Dell's Zinc can put 360 ECX-1000 server nodes in a 4U chassis (five sleds turned on their sides), or 90 nodes per rack unit, while the HP design can do 288 ECX-1000 nodes in a 4U SL6500 enclosure, or 72 nodes per rack unit.

Yes, hyperscale data centers pay attention to deltas like that.

To build the Zinc sled, Dell worked with Calxeda to create a custom backplane that is different from the twelve-slot SP1200 board from Calxeda that a lot of server makers are using to deploy ECX-1000 processors in machines of various shapes and sizes.

Dell and Calxeda cooked up a new backplane that has eighteen slots, which can be populated with the EnergyCard processor cards, which have four EXC-1000 processors, with a memory stick and four SATA ports per socket, or the EnergyDrive storage cards, which put four skinny SATA drives on a card of the same size.

The ECX-1000 processors are based on the 32-bit Cortex-A9 processor, which springs from the ARMv7 spec and which does not have 40-bit memory extensions. You will have to wait until the "Midway" chip, due next year according to the latest roadmap, for Calxeda to shift to Cortex-A15 designs and their 40-bit memory addressing.

The Midway chips are socket compatible with the current ECX-1000s, so Calxeda doesn't need to do a board redesign; it will just swap processors and allow a 16GB memory stick instead of a 4GB one for each socket.

The Zinc board is designed to allow a mix of EnergyCard and EnergyDrive cards on each backplane, and of course, the system-on-chip have what Calxeda is now calling its Fleet Services Fabric Switch, which can glue up to 4,096 nodes (that's 1,025 EnergyCards) together in a flat, distributed Layer 2 network. (This is the real secret sauce of the Calxeda design.)

Full sled view of the Zinc server, with compute and storage

The machine that the Apache Software Foundation got from Dell has six EnergyCards, for a total of 24 server nodes, and a dozen EnergyDrives, for a total of 48 disk drives, yielding two drives per socket or a half drive per core, depending on how you want to look at it.

It will be interesting when GPU coprocessors plug into this puppy, which they can do since the EnergyCard and EnergyDrive interconnect is two PCI-Express slots placed end to end instead of side by side. SeaMicro, now part of AMD, and Calxeda alike are using this approach.

Calxeda is putting the switch on the processor SoC, and SeaMicro is putting it on a central ASIC outside of the processor. One wonders what kind of smoke would come out of the box if you plugged a Calxeda card into the SeaMicro backplane...

The Apache HTTP and the Hadoop and Cassandra projects are not the only ones getting access to the Zinc machine to use as a build server. The Derby, River, Tapestry, and Thrift projects are also getting in line, and so are Traffic Server and Jackrabbit.

Dell would be wise to work with Calxeda to forge similar machines for the Fedora and Ubuntu Linux projects as well, and why not be nice to openSUSE and Debian while you are at it. El Reg is sure Dell and Calxeda can scrape together four more machines.

And moreover, it probably makes sense to give some Copper machines to all of these organizations, too, and to make sure some Zinc machines are in the Dell solution centers.

That is not the plan, according to Schulke, but it should be. And what the hell, send one up to Redmond, too, so they get the message that Windows Server 2012 R2 should be ported to ARM servers, too. ®