This article is more than 1 year old

It's a net neutrality whodunnit: Boffins devise way to detect who's throttling transit

Simple, really – follow the congested links

Back when net neutrality was a thing, engineers at the Center for Applied Internet Data Analysis (CAIDA) tested US interdomain links, and found them mostly flowing freely.

News of Verizon throttling a California fire department's data suggest things have already changed in America, but if CAIDA's work gets legs, the world will at least have a new way to detect that kind of behaviour.

Published this month after winning the ACM's SIGCOMM 2018 "best paper" accolade, "Inferring Persistent Interdomain Congestion" offers an architecture for what its 10 authors called "large-scale third-party monitoring of the Internet interconnection ecosystem".

When the group did its data gathering between March 2016 and December 2017, its focus was on how disputes between different players – ISPs, content providers, clouds, and transit providers – would end up in throttled links and degraded end user performance.

As the paper stated: "There is a dearth of publicly available data that can shed light on interconnection issues." Hence even though an interconnection dispute, throttled links, or even misconfiguration can affect people far beyond the scope of an affected link, the researchers noted that "limited data is available to regulators and researchers to increase transparency and empirical grounding of debate".

Instead, it becomes a matter of accusation and counter-accusation in the tech press (such as when Netflix went public against AT&T in 2014, before the two inked a peering agreement).

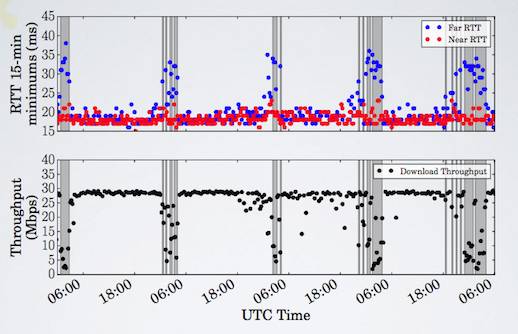

Rising ping times, hinting at latency, correlated well against falling throughput in CAIDA's research

With probes at 86 vantage points in 47 ISPs, CAIDA's boffins created "a system that conducts ongoing congestion measurements of thousands of interdomain links", the paper said. The vantage points were fitted with "Time Series Latency Probes" (TSLP), which the paper said was "consistent with those obtained from more invasive active measurements (loss rate, throughput, and video streaming performance)".

That data was validated by the operators themselves, CAIDA's team said.

The TSLP approach is simplicity itself: as congestion rises, packets get buffered, latency rises – and CAIDA's deep knowledge of the underlying network topology (built into another tool to identify which links to probe) made it possible to infer where the latency, and therefore congestion, occurred.

"If the latency to the far end of the link is elevated but that to the near end is not, then a possible cause of the increased latency is congestion at the interdomain link," the paper said.

The probes also measured packet loss on similar reasoning: an excessively congested link will see packets dropped when their time-to-live (TTL) expires (*CAIDA's Amogh Dhamdhere e-mailed us with a more accurate, and more detailed, description, added below).

There's a presentation slide deck for the paper here (PDF), and CAIDA's analysis scripts, data sets (via a visualisation interface), and query API are available by request to manic-info@caida.org, and the organisation is hoping ISPs will volunteer to help gather data. ®

*Correction: The Register slipped up in summarising the methodology, and Amogh Dhamdhere, a co-author of the paper, has provided a more detailed summary via e-mail.

"The TSLP technique uses the same probing method to measure both latency and packet loss. The method consists of sending probes towards a destination and setting the Time To Live (TTL) of the packets to expire along the path, such that the packets expire at the two ends of the interdomain link we are measuring. We deliberately set the TTLs in this way to get responses from the routers at the endpoints of the interdomain link. We can then use those responses to measure latency and packet loss. The losses we measure are not due to the fact that the TTL expires, but rather because the queue at the router is full.

"The difference between latency and packet loss measurements is that our latency measurements are done at a very low frequency (once every 5 minutes) while the loss rate measurements are done at a higher frequency (once a second)."