This article is more than 1 year old

Train ImageNet for $40 in 18 mins, a robot that can play Where's Wally? etc

Your quick summary of AI news from this week

Roundup Hello, here are a few bits of AI news for the weekend. You don't always need a ton of cash to buy a wad of GPUs to train your models super quickly. You can do it pretty cheaply on cloud platforms. There's also a robot that can play Where's Waldo (Wally in the UK), and Microsoft's computer that is trying to tell if you've found a joke funny.

Public code for training ImageNet super quickly: A group of engineers have managed to train ImageNet to 93 per cent accuracy using hardware rented on public cloud platforms for just $40.

It’s not the fastest time on record for training a convolutional neural network on ImageNet, one of the most popular datasets for image classification. The fastest time yet is four minutes, and goes to researchers from TenCent and Hong Kong Baptist University.

Speeding up the training process requires pumping up the batch size and flinging a huge number of GPUs at the problem. Zipping through ImageNet in four minutes required 1024 Nvidia P40 GPUs - that’s not affordable if you’re not a giant tech conglomerate with deep pockets.

But a team of engineers, including Jeremy Howard, co-founder of Fast.ai, and Andrew Shaw, an engineer who took part in the DawnBench competition and Yaroslav Bulatov, a researcher for Defense Innovation Unit Experimental, a private defense company, have made the prospect a lot more affordable.

They rented 16 public AWS cloud instances, each with 8 NVIDIA V100 GPUs and employed a few clever software tricks, such as training on small images at first before introducing larger ones.

“That way, when the model is very inaccurate early on, it can quickly see lots of images and make rapid progress, and later in training it can see larger images to learn about more fine-grained distinctions,” Howard explained in a blog post.

“DIU and fast.ai will be releasing software to allow anyone to easily train and monitor their own distributed models on AWS, using the best practices developed in this project,” he added.

You can read more about it here.

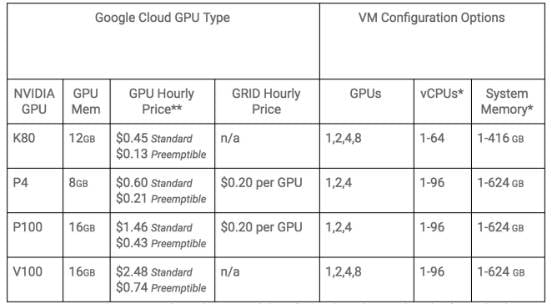

Nvidia’s Tesla P4 on Google Cloud: Keeping to the topic of cloud platforms, Google Cloud announced it was now hosting Nvidia’s P4 GPUs on its service.

It’s not as advanced as the P100 or V100, so it’s a cheaper option for those that are training or running smaller models or for those that don’t mind waiting too long.

They’re now available in a few select zones, including US Central, US East, and Europe West.

You can read more about it here.

Can AI detect good joke from laughter? Microsoft has installed an exhibit at the National Comedy Center in New York that uses its Face API to detect if someone has laughed at a joke or not.

Viewers step up to a screen to enter the Laugh Battle. The machines contain a range pre-installed jokes written by human comedians – AI still sucks at humour. Players take turns choosing the jokes and win by making the other person smile or laugh the most after six rounds.

You can see a video demo below.

Microsoft’s Face API is used to judge whether someone has scored a point or not by scanning people’s faces. The system uses a convolutional neural network that has been trained on over 100,000 faces for sentiment analysis. The pictures are labelled with emotions, such as happiness, sadness, anger, contempt, disgust, fear, neutral and surprise.

“Across cultures, people smile the same way, they get angry the same way, they show disgust the same way,” said Cornelia Carapcea, a principal program manager on the Cognitive Services team.

“If somebody is smiling or frowning, we can detect that and we give back a score for each emotion,” she explained. “It is not like we see a face and we say ‘happy,’ we see a face and say ‘oh, we think happy is maybe 60 percent.’ If the person is also doing more of a Mona Lisa smile we might have happy 60 percent and sad 40 percent.”

Robot can play Where's Waldo?: Developers have combined Google's AutoML image classification service with a robot arm so that it can point to Waldo in the popular picture game book, Where's Waldo?

Waldo is always hidden amongst illustrations filled with other people engaged in complex situations. Although he's always wearing his characteristic red and white stripy bobble hat and jumper and blue jeans, he's not easy to spot.

The aim of the game is to find Waldo as fast as possible by pointing to him. Now, a robot arm can play too and it can spot Waldo in as little as 4.45 seconds. It's hooked up to a Google's AutoML Vision service that has been trained on Waldo's face. If the model detects at least a 95% match to Waldo in a picture, the robot arm with a hanging rubber hand will move to it.

The arm runs is controlled by a Raspberry Pi running on Python.

You can see it in action below... ®