This article is more than 1 year old

Clap, damn you, clap! Samsung's Bixby 2.0 AI reveal is met with apathy

Maybe the audience needed the teleprompter?

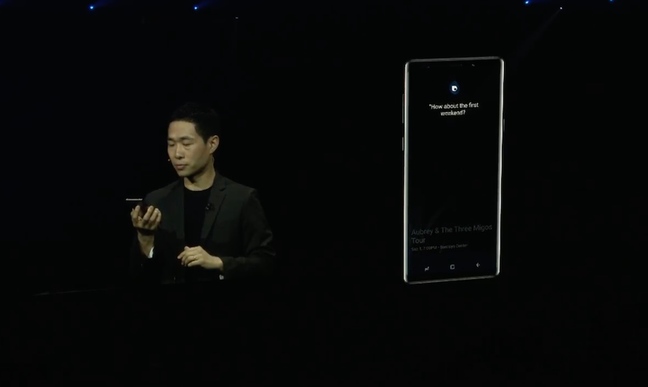

Comment When Samsung's veep of artificial intelligence strategy, the enigmatic Ji Soo Yi, demonstrated Bixby 2.0 at the chaebol's Galaxy event yesterday, he had to prompt the audience for applause. "You can clap," he urged the attendees after stunned silence met another new feature.

This earned instant derision on Twitter, but let's be honest: wowing people with practically useful AI-powered personal assistants isn't so easy in 2018. To paraphrase Samuel Johnson, the parts that are good are not interesting, and parts that are interesting are not good.

Samsung has been said to be losing an "AI arms race", and Bixby 2.0 is an attempt to catch up. The received wisdom is that Google (and Facebook) have amassed so much personal data, and scraped so much useful contextual information from elsewhere, that their personal assistant AIs will always be smarter than anyone else's.

That's allied to another highly questionable piece o' received wisdom: that speech becomes the dominant way we'll interact with applications and services*. Put these two Deep Thoughts together, and you can reach the conclusion that Google will own AI, and through it, the consumer electronics industry. Samsung has a broad range of white goods and premium consumer AV gear alongside its IT kit, like smartphones, so naturally it feels this threat keenly.

Always a great sign when the presenter needs to give the audience a prompt to applaud. That's the power of Bixby. #Unpacked #youcanclap

— Michael Fisher (@theMrMobile) August 9, 2018

But what if the received wisdom is wrong? What if quality of data matters more than the quantity? That seems to be what Samsung is betting on with Bixby 2.0. Despite a modest academic record, Mr Ji has been chosen to lead Bixby 2.0 development and when you see what they're doing, it doesn't appear to be a bad bet. Maybe what Samsung needs most right now is not a machine learning guru – and Mr Ji is no machine learning guru.

What drew mass apathy from the vast Galaxy event audience was Mr Ji finding a restaurant and ordering a taxi. It's quite excusable then, you might think. Another example was finding a "concert in Brooklyn over Labor Day weekend". These are things that standard probabilistic analysis, plus a little location information, ought to be able to help with. No AI magic required.

Mr Ji was quite proud that Bixby had sourced the information about the restaurant – and the gigs listing guide – without the user having to install Yelp or TripAdvisor, suggesting Samsung has been busy licensing content behind the scenes. I'll take an unfashionable view here and say that might be all it needs to create a better Bixby. You can see the demo here at 44:40.

There's a conundrum facing anyone trying to implement consumer AI assistants at the moment, which is that to show your software is "smart" means making it fundamentally intrusive and annoying.

Wow, OK, I get it, you have AI

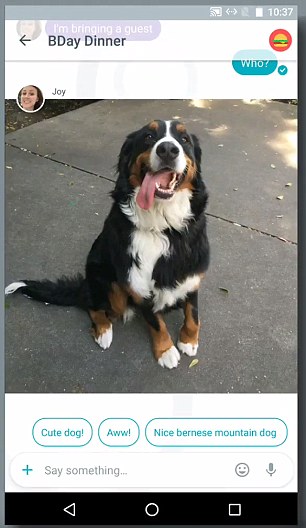

As an example, at some point in the next few days my Pixel 2 XL running Android 9 Pie will be laboriously reverted back to the superior Android 8 Oreo. Pie breaks a few things, but only offers nagware in return. Stuff that's on by default, designed to show that "machine learning has been implemented here". One example: canned replies to text messages from loved ones. No thanks, Google, you're being weird, creepy and intrusive.

Machine learning that optimises my application startup time and increases my battery life is fine by me, but the Clippy The Paperclip stuff isn't. It's worse than the original Clippy ever was, because it squirts itself into every minute of your waking day, not just when you're "trying to write a letter". And in 9 Pie, the weird intrusive stuff is all that's new, really.

Perhaps this stuff goes down well in Asian markets, but it runs into the issue that people guard every bit of privacy they have quite fiercely here. For example, last year Apple introduced a machine learning (no doubt) feature which advised you when to go to bed. I have the least rock 'n' roll lifestyle imaginable as a dad, and my bedtime doesn't vary much at all. But I'm damned if I'm having a robo-nanny telling me when that should be. Fuck right off.

Telling you what to say, but really showing off its image recognition skillz

So there's only so much "help" an AI personal assistant can give that's actually useful and welcomed. Anyone now attempting to build ML-powered advice into your life runs smack into Samuel Johnson's aphorism: the bits you'll create that are interesting just ain't any good.

There's a great irony here, of course, as neural networks helped speech recognition improve in leaps and bounds. It's the one undisputed area where the concept has been a practical boon. Speech recognition is now good in a way unimaginable a few years ago. But that's largely because a language model is a well-defined problem space: there are a limited number of phonemes and a finite number of words they could represent (pioneer Tony Robinson has a lucid explanation of how this works here).

That's the part that technology companies need to get absolutely perfect. Mr Ji's first command to Bixby yesterday went unrecognised – it failed to understand him. Technology should forget about trying to anticipate us with "smart" tat, and get the basics right.

And maybe forget about wowing us at all with this stuff. Either that, or maybe in the future, equip members of the audience with their own teleprompter, "COOL FEATURE: CLAP". No more embarrassing silences. ®

*Bootnote

No, me neither. Because speaking a trivial command out loud is still too intrusive in both office, and a noisy family home. Perhaps not if you're Tony Stark or Larry Page.