This article is more than 1 year old

GitHub looses load-balancing open-source code on netops world

GLB Director keeps those packets humming even when new servers are added to pools

If you’ve got a big bare-metal data center, or if you’re just BM-curious, head on over to GitHub, where there’s a new load balancer on offer by, um, GitHub.

Like anybody handling a lot of incoming requests, GitHub needs a way to spread web and git traffic around its server warehouses, and in this blog post on Wednesday by Theo Julienne, Microsoft's code-hosting outfit explained why it decided to write its own load balancer.

Putting a bunch of machines behind a single IP address is a common enough trick, however, Julienne wrote that there’s a particular challenge for an organization like GitHub: network changes are frequent, and many load balancing technologies can break if there’s a change in the server lineup.

The GitHub Load Balancer Director, open-sourced here, acts as an OSI layer-four balancer – that's the transport layer – and rather than trying to replace services such as HAProxy or Nginx, it puts a shield in front of them so they can scale across multiple machines without each host having a separate IP address.

The netops problem GitHub is trying to solve is this: the existing load balancing approaches it chose would drop connections on a change of server configuration. TCP is a stateful protocol; once a connection is assigned to one proxy in a load balancing config, only that proxy knows the state of the connection.

In a dynamic hosting environment, if a server is added to a pool, there’s no problem in it picking up new connections – but trying to reconfigure the pool without dropping existing connections is painful.

Take equal-cost multipath routing, which is one approach to load balancing inside a data center: if you decide you need three servers announcing an IP address instead of two, “connections must rebalance to maintain an equal balance of connections on each server,” Julienne wrote, and because traffic is routed to IP addresses, the new member of the pool may receive packets for connections it doesn’t know about, and those connections fail.

Another popular approach is to load balance across Linux virtual servers (LVS), and again, Julienne said GitHub ran into problems that made the approach unsuitable for its needs.

Proxy war

As with a router-based load balancing solution, he wrote, an LVS Director still needs to propagate state information around the network to reflect a configuration change. It “also still requires duplicate state – not only does each proxy need state for each connection in the Linux kernel network stack, but every LVS director also needs to store a mapping of connection to backend proxy server,” Julienne explained.

To get around these issues, the GitHub GLB Director doesn’t try to duplicate state. Instead, it relies on the proxy servers already in the network to handle TCP connection state, because that’s already part of their job.

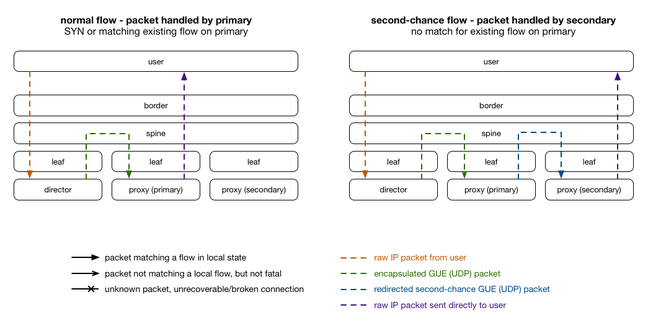

The GLB Director assigns a connection to a primary server and a secondary server. When a new proxy is added to a pool, it becomes the primary; and the old primary becomes the secondary. “This allows existing flows to complete, because the proxy server can make the decisions with its local state, the single source of truth. Essentially this gives packets a ‘second chance’ at arriving at the expected server that holds their state,” he wrote.

GLB Director is deployed between the routers (which think they’re sending packets to one host that’s announced an IP address) and the proxies actually sending traffic. The director tier is stateless as far as the TCP flows are concerned, and as a result “director servers can come and go at any time, and will always pick the same primary/secondary server providing their forwarding tables match (but they rarely change).”

GLB Director gives packets a "second chance" to find their way home when the proxy pool changes ... Click to embiggen (Source: GitHub)

An approach called Rendezvous Hashing manages the process of adding and removing proxies, making sure the “drain and fill” process is consistent when the load balancing environment changes.

Julienne added that Intel’s DPDK (data plane development kit) provides enough packet-processing grunt on bare metal that even in complex load balancing environments, it can achieve 10Gbps line rate processing. ®