This article is more than 1 year old

Decision time for AI: Sometimes accuracy is not your friend

When intuition lets you down, you're stuck between ROC and a hard place

Machine learning is about machines making decisions and, as we have already discussed, we can produce multiple models for any given problem and measure their accuracy. It is intuitively obvious that we would elect to use the most accurate model and most of the time, of course, we do.

But there are times when we will actually elect to use one of the less accurate ones. The underlying reason is that the estimates we make of accuracy, whilst very useful, take no account of the cost of being right and being wrong.

So, just to recap the story so far. We might be trying to identify which of our customers on a clothing website are women and which men so that our recommendation engine makes the appropriate clothing suggestions. The model can be right about a given individual in two ways:

- accurately predict a female – we call this a True Positive

- accurately predict a male – True Negative

It can also be wrong in two ways:

- predict female when the customer is actually a male – False Positive

- predict male when customer is a female – False Negative

Note that in this case we are assuming that an identification of female is considered positive. The logic works equally well (except the classes are reversed) if we assume male is positive.

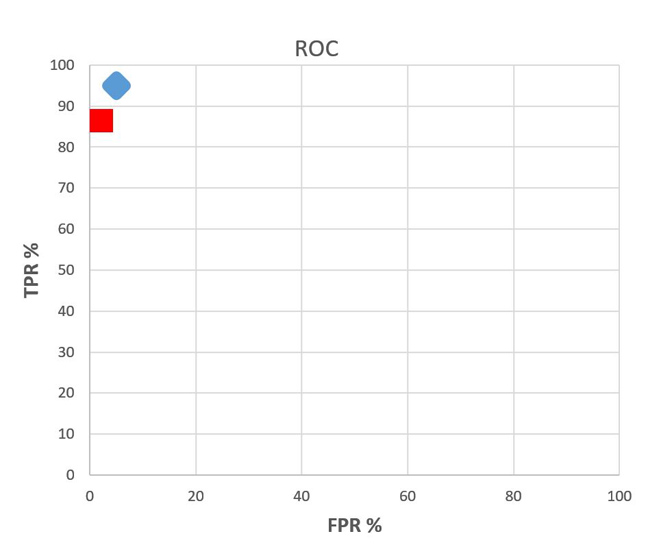

Now, suppose that we have two machine learning models. One gets 95 per cent of both the females and the males correct. The second one gets 97 per cent of the males correct but only 87 per cent of the females. In ROC* space terms the first one (blue) is nearer the top left-hand corner and therefore clearly better.

But suppose – and this is merely supposition – most men will decide not to shop with you anymore if your algorithm incorrectly identifies them as women and recommends women's clothes to them. Let's say when your system makes the same mistake in reverse for women, they mostly stay loyal customers.

So, in this case, the consequences/cost of a false positive far outweigh those of a false negative and we may well favour the second algorithm even if it is less accurate overall.

Now trying to protect the fragile egos of some males is all very well but, as the stakes are raised, we have more and more reason to take account of the differing costs of false negatives and positives.

Sick algorithms! Well, quite

For example, suppose our algorithm is looking at a vast amount of data and making a decision about whether a person has a disease. A false positive (the person is actually fine but the algorithm says that they have the disease) may result in a wasted course of treatment that costs £50. A false negative (the person is infected but the algorithm says they are fine) may result, not only in that person's potential death, but in, say, 100 more cases as that person spreads the infection.

And, even if our algorithm is 98 per cent efficient, two of those 100 will not be spotted and will infect another 200 and so on. Looks like we’ve got ourselves an epidemic and all because the "costs" of false positives and negatives are wildly unequal.

So, one thing to bear in mind is that ROC space (brilliant though it is) takes no account of the cost of the two types of error that we have.

We also have to bear in mind that the efficiency of the algorithm is not the same as the probability of it working in a given case.

Imagine the following scenario. We are trying to catch fraudulent insurance claims. We develop, train and test an algorithm and it turns out to be 99 per cent efficient. That efficiency is symmetrical, by which I mean that it correctly identifies fraudulent claims 99 per cent of the time and also spots honest claims correctly 99 per cent of the time.

So we go live with the algorithm and start feeding the new claims into it as they arrive. We know from other work that only one claim in a thousand is fraudulent so most of the claims are flagged as honest. We wait patiently and, after three days, a claim is flagged to us as fraudulent. The crucial question is: "What are the chances that the claim is actually fraudulent?"

Clearly the obvious answer is 99 per cent and equally clearly that is going to be the wrong answer otherwise I wouldn’t have raised the question. The correct answer is that it has only about a 9 per cent chance of being fraudulent, not 99 per cent. At this point you may want to stop reading and work this out for yourself.

One easy way is simply to start with, say, 1,000,000 claims and work through the problem. How many of those 1,000,000 are fraudulent (given that one in 1,000 are fraudulent) and how many honest? How many of each group are correctly and incorrectly classified? How many of those that are classified as fraudulent actually are?

OK, we start with 1,000,000 claims. We know that one in 1,000 are fraudulent, so 1,000 are fraudulent and 999,000 are honest.

Let’s focus first on the 1,000 fraudulent ones. The algorithm is 99 per cent accurate so 990 are correctly classified – they are fraud and the algorithm correctly nails them (true positives). Ten are fraudulent but the algorithm says they are honest (false negatives).

Now the 999,000 honest claims. Ninety-nine per cent (which is 989,010) are correctly classified – they are honest and the algorithm agrees (true negatives). One per cent (which is 9,990) are honest but the algorithm says they are fraudulent (false positives).

We are only interested in the ones that are identified by the algorithm as fraudulent. Out of 1,000,000 claims the algorithm identifies 990 + 9,990 = 10,980 as fraudulent. But note that, of these, only 990 (approximately 9 per cent) are actually fraudulent.

So, back to our three-day wait and the first claim that is flagged as fraudulent. It actually only has about a 9 per cent chance of being dishonest, despite the fact that the algorithm is 99 per cent accurate.

This is initially unintuitive but it is correct. And if you really want to work in machine learning, it is important not just to be able to solve this single problem, but to get a feel for how and why it works.

So, here are another couple of brainteasers to assist you toward a zen understanding of the problem. In this case we intuitively expect 99 per cent because that is the efficiency of the algorithm but instead we get 9 per cent.

What is the factor that makes the number we get different from the expected?

Answer: it is the probability of any one claim being fraudulent, which in this case is one in 1,000. If you vary that number, then the probability of any one given claim being fraudulent increases or decreases. Next question: What probability of any one claim being fraudulent WILL give us the expected answer of 99 per cent? I’ll leave that with you. ®

* Receiver Operating Characteristic (ROC) curve – a plot of true positive rate versus false positive rate (FPR) at various threshold settings.

We'll be examining machine learning, artificial intelligence, and data analytics, and what they mean for you, at Minds Mastering Machines in London, between October 15 and 17. Head to the website for the full agenda and ticket information.