This article is more than 1 year old

The only way is Ethics: UK Lords fret about AI 'moral panic'

Oh, and look out for data monopolies, peers warn

The House of Lords wants to make sure data used by AI systems is not monopolised and the technology is developed on ethical guidelines.

That’s not impossible, Lord Tim Clement-Jones, chair of the Lords’ Select Committee on Artificial Intelligence told us.

“We looked to see where it had been done well and done badly. GM Foods is where it was done badly, and human embryo research is where it has been done well.”

The report was designed “to avoid a moral panic,” Clement-Jones told us.

The Committee gathered oral evidence from over 200 witnesses - including “Captain Cyborg”, Professor Kevin Warwick, and a witness from The Register - last year. The report avoided the boosterism associated with often overheated media reporting of AI, and sensibly steered clear of making sweeping predictions about effects on the job market caused by automation. In fact, such claims are contested, it noted.

“The representation of artificial intelligence in popular culture is light-years away from the often more complex and mundane reality,” the Report stated. Researchers and academics were a bit fed up with this, they told the Select Committee, the report also noted that thanks to the media, the public “were concentrating attention on threats which are still remote, such as the possibility of "superintelligent" artificial general intelligence, while distracting attention away from more immediate risks and problems.

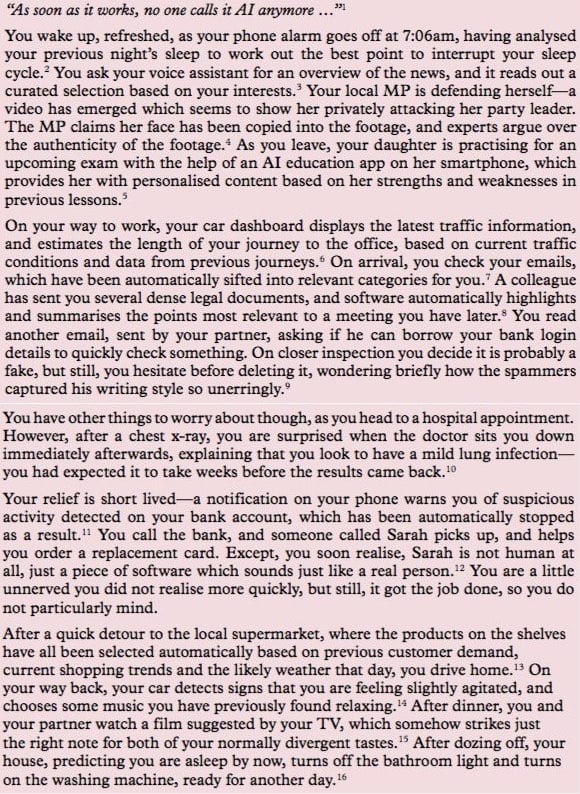

Elon Musk, the late Professor Stephen Hawking and Professor Nick “simulation hypothesis” Bostrom were guilty of regularly chiming in with doomsday scares. The Lords painted a near-future scenario that they want to avoid: where decisions are taken on people’s behalf.

It’s notable how much of this - phishing and recommendations - have already used statistical techniques, without "AI". The report noted that when it comes to liability for autonomous systems, the lawyers can’t agree. Some think AIs should be given legal personhood, others don’t. The Committee recommended the Law Commission get stuck in to “provide clarity”.

The Lords also want a role for the Competition and Markets Authority to ensure data isn’t hogged by five big companies.

“We call on the Government, with the Competition and Markets Authority, to review proactively the use and potential monopolisation of data by big technology companies operating in the UK.”

There’s a risk five companies will simply “maintain their hegemony”. The Lords also envisage an active, post-Brexit role for the Information Commissioner. “We don’t want people to wake up to situations where the use of the data has been unfair or biased,” the Chairman of the Committee told us.

In terms of the “Fourth Industrial Revolution”, a sweeping restructuring of the employment predicted by futurologists, the Lords noted that “the economic impact of AI in the UK could be profound”.

The UK has a poor productivity story - largely due to a shift to more services-based economy - and has seen no productivity growth since 2008. Here they found that important sectors, specifically many SMEs and the NHS, are failing to pocket the benefits of IT, never mind AI. The NHS is the largest purchaser of fax machines in the world. Therefore, the Lords said, it’s as important to improve broadband.

“The NHS does not have a joined up approach to data, how it’s used, or licensed or shared,” Clement-Jones told us.

The report noted that AI is a “boom and bust field”, and that the last time the Government chucked money at AI in the Expert Systems era, it didn’t end well. The rule-based AIs were characterised by “high costs, requirements for frequent and time-consuming updates, and a tendency to become less useful and accurate as more rules were added”. The equivalent of a billion quid was invested in AI in the 1980s, with the taxpayer chipping in the equivalent of £200m. We haven’t quite reached that stage yet.

The Lords enquiry began in the heat of the hype about AI last autumn, since when the ardour has cooled somewhat. Professor Geoffrey Hinton’s qualms about techniques reaching their limit are noted. Early this year neuroscientist, author and entrepreneur Gary Marcus as made some pointed criticism in his paper Deep Learning: A Critical Appraisal.

In December, Monsanto had reportedly attempted 50 deep learning experiments and experienced a “95 per cent failure rate”. That means at least 49 failed completely, and one half worked.

You can find the report here (PDF). ®

Bootnote

Last October I gave oral evidence to the Committee in a session on the media and AI. The main message was that hopes of radical improvements in industrial automation may be misplaced, as machine learning didn't do a lot to enhance robotics, and great results in microworlds or games didn't necessarily translate to real world progress: something acknowledged by the experts as Moravec's Paradox. By conflating driverless cars with AI, the media may have raised expectations far beyond what the technology can achieve. An unscripted digression did make it in:

This was prompted by the thought that if you had twenty minutes in which to place some new knowledge inside Donald Trump's brain (that his brain would somehow miraculously retain), the world would be safer if that knowledge was some history, say, rather than some ML technique.