This article is more than 1 year old

Facebook opens up Big Basin Volta plans to share the server wealth

AI hardware plans up for grabs

Facebook has revealed its updated GPU-powered server design known as Big Basin v2 as part of the Open Compute Project.

The OCP was set up by a community of engineers trying to build efficient servers, storage and data centers by openly sharing intellectual property. Companies such as Nokia, Intel, Cisco, Lenovo, Apple and Google are also part of the project.

Big Basin v2 is basically the same structure as its previous Big Basin model, but has been upgraded with eight of Nvidia’s latest Tesla V100 graphics cards. It’s Tioga Pass CPU unit used as the head-node and has also been doubled the PCIe bandwidth used to shuttle data to and from the CPUs and GPUs.

In a blog post, Facebook said it has seen a 66 per cent increase in single-GPU performance compared to the previous Big Basin design, after increasing the bandwidth for the OCP network card.

It means that the researchers and engineers can build larger machine learning models and train and deploy them more efficiently. Facebook monitors a user’s interactions to make predictions about what a particular user will want to see.

Machine learning is used to rank Facebook’s news feed, personalize adverts, search, language translation, speech recognition and even suggests the right tags for your friends in images uploaded.

Most of the machine learning pipelines run through FBLearner, the company’s AI software platform.

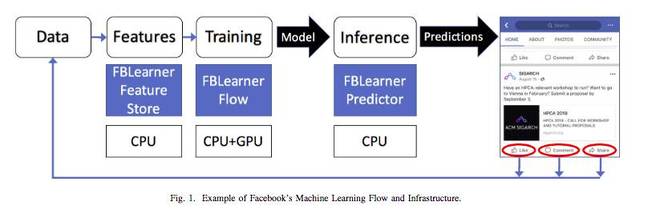

Image of the data pipeline for Facebook's machine learning infrastructure. Image credit: Facebook Inc.

It’s split into different components: Feature Store, Flow, and Predictor.

“Feature Store generates features from the data and feeds them into FBLearner Flow. Flow is used to build, train, and evaluate machine learning models based on the generated features,” Facebook explained.

"The final trained models are then deployed to production via FBLearner Predictor. Predictor makes inferences or predictions on live traffic. For example, it predicts which stories, posts, or photos someone might care about most."

It’s interesting to see the hardware blueprints used to power a major company’s AI and machine learning efforts. It makes sense for Facebook as it isn’t really competing in the AI Cloud business. Other tech giants like Google or Amazon probably won’t be as forthcoming about their systems.

“We believe that collaborating in the open helps foster innovation for future designs and will allow us to build more complex AI systems that will ultimately power more immersive Facebook experiences,” it said. ®