This article is more than 1 year old

FYI: AI tools can unmask anonymous coders from their binary executables

To ensure privacy, stay offline, don't maintain public repos that trace back to you

Talk about the ultimate Git Blame.

Programmers can be potentially identified from the low-level machine-code instructions in their software executables by AI-powered tools.

That's according to boffins from Princeton University, Shiftleft, Drexel University, Sophos, and Braunschweig University of Technology, who have described how stylometry can be applied to binary files.

That's kinda bad news for people who wish to develop software, such as privacy-protecting apps, anonymously, as this technology can be used to potentially unmask them. It's also kinda good news for crimefighters trying to identify malware authors.

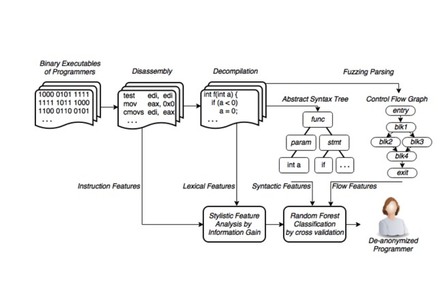

Essentially, the machine code can be decompiled back into a C-like language, and then traced back to known programmers using machine-learning algorithms.

Forensics

Source code stylometry – analyzing the syntax of source code for clues about the author – is an established technique used in digital forensics.

As the US Army Research Laboratory (ARL) puts it, "Stylometry research has proven that anonymous code contributors can be de-anonymized to reveal the original author, provided the author has published code before."

The technique can help identify virus makers as well as unmask the creators of anti-censorship tools and other outlawed programs. It has the potential to pierce the privacy that many programmers assume they have.

Source code is designed to be human-readable, but binaries – typically produced by compiling or assembling source code – have fewer characteristics that may suggest authorship. Toolchains can be instructed to strip out variable names, function names and other symbols and metadata – which may say something about the author – and alter the structure of code through optimization.

Nonetheless, the researchers – Aylin Caliskan, Fabian Yamaguchi, Edwin Dauber, Richard Harang, Konrad Rieck, Rachel Greenstadt and Arvind Narayanan – building on work described in a 2011 paper, demonstrate that binary files can be analyzed using machine-learning and stylometric techniques.

"We show that programmers who would like to remain anonymous need to take extreme countermeasures to protect their privacy," the eggheads explained in their paper, presented last month at the Network and Distributed System Security Symposium (NDSS 2018) in California, USA.

The researchers analyzed solutions submitted to Google's Code Jam challenges and, with eight training samples per programmer, were able to accurately attribute authorship 96 per cent of the time given a pool of 100 candidate programmers and 83 per cent of the time with a pool of 600.

They also found that advanced programmers are more easily identified than novices because the highly skilled develop more distinctive styles.

Specifically, the researchers took C++ Code Jam solutions, compiled them into 32-bit x86 ELF Unix executables using the GCC toolchain, and ran them through their decompile-identify process.

The boffins tested their technique on samples culled from GitHub, which were more difficult to analyze than the Google Code Jam dataset because GitHub repos often contain modules written by someone other than the repo owner. Nonetheless, they were able to achieve an accuracy rate of 65 per cent with a set of 50 programmers.

For programmers with a large number of GitHub samples – seven in one case and eleven in another – accuracy increased to 100 per cent.

The paper described a four-step process: disassembling the program from binary to get its machine code instructions using Radare2 and ndisasm; decompiling the program into C-like pseudo code using the Hex-Rays decompiler; dimensionality reduction, by which the code is reduced to the most salient potential identifiers; and processing the code with a random-forest classifier, a machine-learning algorithm.

Carry (bit) conceal

Programmers interested in preserving their privacy might believe that they can obfuscate their code to conceal their authorship. However, the boffins report success identifying even deliberately munged code.

They used the GNU strip utility to remove all symbol information from binaries, and while that reduced classification accuracy by 24 per cent, they concluded "stripping symbol information from executable binaries is not effective enough to anonymize an executable binary sample."

Along similar lines, they tried processing binaries with tools like Obfuscator LLVM, which can swap instructions out with a semantic equivalent, alter control flow graphs, and even add unnecessary instructions. They still managed 88 per cent accuracy in identifying authors.

The caveat here is that the researchers focused on programmers not trying to obscure their authorship and they acknowledge that there are more sophisticated anti-forensic techniques they did not evaluate.

In an email to The Register on Friday this week, Princeton postdoctoral researcher Aylin Caliskan said while she had not analyzed the impact of code formatting tools like linters, which could have some effect, the impact would be insufficient to anonymize.

"Especially if the programmer uses the same formatting methods in a deterministic way, it will have zero effect on de-anonymization," she said. "Even obfuscation or symbol stripping does not anonymize binaries."

"The countermeasures are obfuscation in a nondeterministic way using different set of tools, randomizing all kinds of malware and code properties and packing, hiding time patterns as well as malware specific things such as command and control servers, etc," she explained. "Simple obfuscations such as lexical and layout refactoring (which are the current off the shelf obfuscators) are not effective at all since it does not affect the grammatical structure of the code. This would require using for example function virtualizers."

Recommendations

For developers looking to preserve their privacy, the researchers have some recommendations, though they're difficult to follow.

One is not having any public repositories that could serve as a basis for stylometric comparison.

Another is using a different identity for every bit of code released. Then there's adopting a different coding style for each piece of software written. Finally, they suggest applying different optimizations and obfuscations.

If privacy is the goal, Caliskan suggested as a starting point not maintaining public code repositories online.

"More important is not having any presence over the Internet," she said. "Randomized pseudonyms with randomized code and malware (unless a malware author is planning to share only one code sample with the entire world), as well as the above methods is a starting step." ®