This article is more than 1 year old

How Google's black box Knowledge Graph can kill you

Factoid flu

Comment When Knowledge Graph – Google's apparently authoritative box to the side of the search results – sneezes, the world catches a factoid flu.

This was vividly dramatised with a recent NYT article where writer Rachel Abrams found she had "died" four years ago – according to the Infobox. She then tried to convince Google she was actually alive. This wasn't easy.

Nick Carr, a writer who focuses on technology and culture, raises a further issue about Google's algorithms mangling two well-known John Grays in a Knowledge Graph.

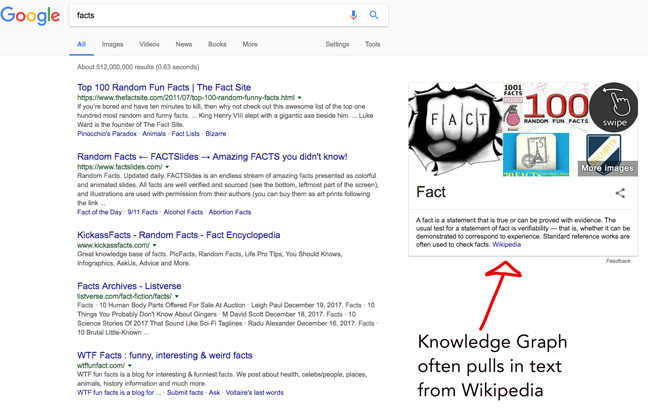

For both, you can thank Google's "Infobox" or Knowledge Graph – a box of Google-selected "facts" about your search term or terms you'll find to the right hand side of your Google results page.

In an article here two years ago, the then co-editor of Wikipedia Signpost, Andreas Kolbe, exploited the consequences. But the proliferation of information from the Infobox since Kolbe's 2015 piece has been dramatic, as it now provides the source for popular new devices such as Amazon's Echo. These gadgets spray out facts on demand, but they don't necessarily tell the listener where the information came from.

"It's a black box. What goes in there, Google doesn't tell us," Kolbe explained today. "Very often it's from Wikipedia, but not always."

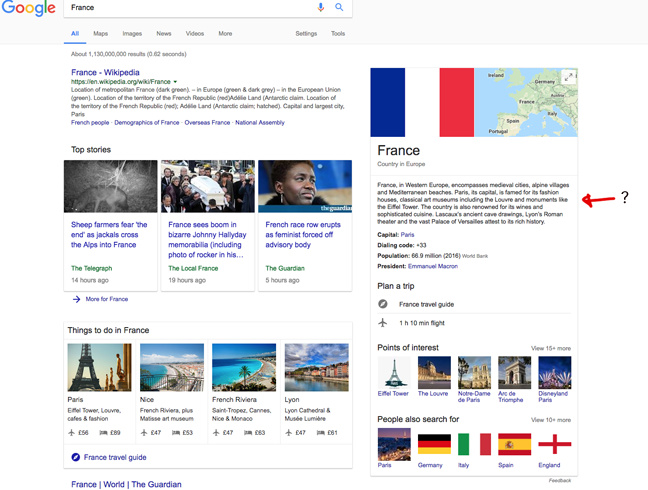

Have a look at the InfoBox for France.

For once, the source isn’t Wikipedia. However, it is not attributed. And finding the source isn't easy – if you select the initial phrase "France, in Western Europe, encompasses medieval cities, alpine villages and Mediterranean beaches...." and search for websites where it appears – you'll find it proliferates across hundreds of search results, which don't acknowledge the source either.

Wikipedians are concerned too.

"The value of Wikipedia is as a tool to find sources. For that, it's quite good. But now we have devices such as Amazon Echo and Siri reading Wikipedia content without necessarily even telling people it's from Wikipedia; [that] link has been broken. The content becomes detached from its sources, and people just repeat it. That's a huge structural weakness to have in a knowledge management system," Kolbe cautioned.

Carr's essay poses the question: "Is it OK to run an AI when you know that it will spread falsehoods to the public – and on a massive scale? Is it OK to treat truth as collateral damage in the supposed march of progress?"

As Kolbe wrote in 2015, apparently authoritative data with no accountability trail is "a propagandist's dream. Anonymous accounts. Assured identity protection. Plausible deniability. No legal liability. Automated import and dissemination without human oversight. Authoritative presentation without the reader being any the wiser as to who placed the information and which sources it is based on. Massive impact: search engines have the power to sway elections."

A start might be to require the giant platforms that use Wikidata to insert an accountability trail, an "according to...".

Wikimedia's strategy statement has grand ambitions for the project: "The essential infrastructure of the ecosystem of free knowledge."

But Kolbe is wary. To him, it sounds like a single point of failure.

It also increases inequality: the generators of the data are unpaid volunteers, but the "customers" of the data are huge for-profit corporations. The paradox at the heart of free culture profits greater inequality, he points out. Google can afford it – witness how much it forks out to be the default search engine. ®