This article is more than 1 year old

What on Earth is Terraform: Life support for explorers of terrifying alien worlds

It can create or destroy. Yes, we're still talking about IT infrastructure...

Terraform is taking over as one of the critical new technologies for managing composable infrastructure both in and out of the cloud. Where does it fit in a world with Docker, Kubernetes, Puppet and other tools that all seem to be important elements in this space?

Terraform is an open source infrastructure automation tool created by Hashicorp. They make a bunch of other tools in the infrastructure-as-code space as well, most of them designed to work together. Infrastructure automation tools like Terraform create and destroy basic IT resources such as compute instances, storage, networking, DNS, and so forth.

If you've used AWS, you've probably used CloudFormation. This is infrastructure automation. Terraform can basically be thought of as CloudFormation, but without the single-vendor lock-in. Infrastructure automation is a critical piece of the composable infrastructure puzzle.

Terraform can manage infrastructure for multiple public cloud providers, service providers, and on-premises solutions (such as VMware's vSphere). At the moment, it appears to be the dominant open source infrastructure automation tool. If you plan on using more than one infrastructure provider, you're probably going end up having to learn Terraform at some point.

Spawning versus managing

Infrastructure automation tools have a complicated relationship with Configuration Management (CM) tools. The big four CM tools being Puppet, Chef, Ansible and Saltstack, which you may have heard of.

Terraform spawns and destroys infrastructure. This could include, for example, creating a virtual data center inside vSphere, configuring that data center with appropriate resources and networking, and then registering all of these infrastructure components with DNS. Terraform could just as easily bring up a new DigitalOcean droplet, or configure resources in the AWS region of your choice.

Anything Terraform can find a provider for, it can create or destroy. This is where infrastructure automation tools and configuration management tools start to step on one another's toes.

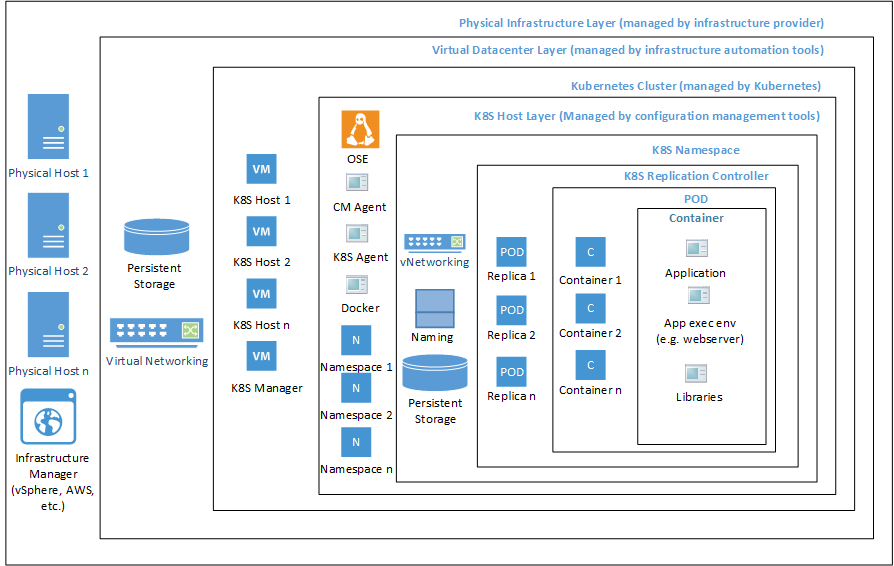

If everything were neatly separated, Terraform would create the infrastructure – including spawning all relevant VMs – and then hand VMs off to CM tools. CM tools would then configure Operating System Environments (OSEs). This includes managing patching, and making sure additional operating systems agents are installed.

In reality, all of the CM tools are trying to be infrastructure automation tools, but they're nowhere near as good at infrastructure automation as the purpose-built offerings. Similarly, Terraform providers exist that allow Terraform to manage individual applications – especially databases – and there is even a Terraform provider allowing it to boss Chef around.

Containers

When composable infrastructure is discussed, containers are not far behind in the discussion. To understand where they fit we need to understand a little bit more about the role of CM tools.

While CM tools can do a great many things, in the real world their primary purpose is to keep OSEs in line. You define the state for your OSE in code, and through the CM agent installed in the OSE, the CM management platform ensures that state is enforced. If someone tries to alter the state, then the CM tool throws an error and attempts remediation.

If the OSE in question is the final application wrapper, then CM tools would be used to install the VM tools agent, configure the OSE for the application, configure the application and so forth. If the OSE in question is to be part of a container cluster, the CM tools would install kublet and hand the system off to Kubernetes. This is where we get into containers.

Think of Kubernetes as "vSphere for containers". Like vSphere, a Kubernetes controller lashes together nodes assigned to it into clusters. It handles networking for those nodes, including reverse proxies and service discovery for containers.

Again, like vSphere, Kubernetes reads a configuration – in this case a YAML text file – and then spawns namespaces on hosts under its control. Namespaces are like virtual data centers for containers. They contain storage, networking and name resources.

A replication controller lives inside a namespace and manages how many copies of a pod exist. Pods contain one or more containers. Docker is used to install and configures applications inside individual containers.

This is a lot of layers. So why go to all the bother? What's so special about infrastructure as code?

Immutable Infrastructure

Infrastructure as code allows IT teams to treat infrastructure like traditional code libraries; one can write their infrastructure code once and then use it many times. The code that defines the infrastructure can be versioned, forked and rolled back, just like any other code.

This brings us to the concept of "immutable infrastructure". If infrastructure design is codified then any variances from that design can be discovered and addressed in a programmatic fashion. In other words, if infrastructure has been manually modified, you destroy that infrastructure and respawn a clean copy.

Because all changes must be defined in code, then a good versioning system will allow anyone looking to trace the source of a problem to simply look through the history of changes in the design files. This allows infrastructure teams to roll back to known good designs quickly. It also allows them to find out who made changes to a given design and – hopefully – why.

In practice

That's great in theory, but there are some practical considerations.

In order to spawn OSEs, Terraform can deploy Packer images. With Packer, one defines characteristics of the OSE. These characteristics include the instance type to use, the operating system image to use, and any provisioners. Provisioners would do things like copy in a shell script and execute it, or install a CM agent.

These images can be a problem. For composability to work the images used need to be configured to allow Packer to log in after image instantiation and upload scripts. One can specify secrets in the packer config – or use Vault to securely manage your secrets. Vault is yet another project managed by Hashicorp.

For tracking infrastructure under management, Terraform keeps state files for the infrastructure it has created and needs to manage. These files can be backed up in the traditional fashion in case something happens to the Terraform application itself, allowing a newly recreated Terraform instance to resume managing infrastructure abandoned by a crashed instance. Hashicorp also manages a project called Consul, which can serve as a back-end to securely manage state files for Terraform.

If you're wishing Hashicorp would just integrate all of this into a single "vSphere for infrastructure as code", you're not the only one!

Success with composable infrastructure still depends on the infrastructure provider. How do they make images available, and do they offer a method to define initial image context so that something like Packer can perform initial configuration and inject CM agents?

Where the infrastructure is VMware or other on-premises solution, someone still has to physically rack the servers, connect them to the network and install a hypervisor. That hypervisor has to be connected to an infrastructure management solution.

Alternately, one could install an OSE and CM agent on the metal and make it a metal Kubernetes box. The downside to this is that if the OSE on metal deviates from design it's more difficult to destroy it and respawn than if it were a big VM living on top of a hypervisor.

Combatting YOLO-Ops

The ability to tear down entire virtual data centres and respawn them elsewhere in seconds is a long way from the YOLO-Ops approach of needing to manually configure, monitor and manage each workload. Infrastructure automation tools like Terraform aren't going to magically make infrastructure composable.

All of the tools mentioned above are just one piece of the puzzle, and each has to be mastered and implemented before the real fun begins. Once one has achieved composability, however, the sky's the limit.

Organisations can move entire virtual data centres between on-premises infrastructure, various service providers and the major public clouds. With a little bit of work organisations can even tie Terraform into something that checks spot prices for infrastructure from various providers and move entire virtual data centres around as spot prices change. It's a nice dream, but an open question as to how many organisations will achieve it. ®