This article is more than 1 year old

Apple whispers how its face-fingering AI works

Secretive biz opens up a little

Apple has let us all in on a little secret: how its deep-learning-based face detection software works in iOS 10 and later.

In a blog post published this week, the Cupertino giant described in a fair amount of detail how its algorithms operate. The biz revealed its code is based on OverFeat, a model developed by researchers at New York University, that teaches a deep convolutional neural network (DCN) to classify, locate and detect objects in images.

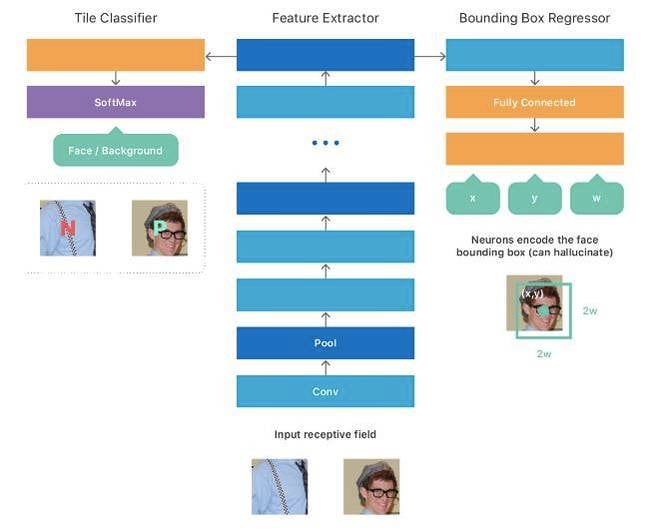

It works by using a binary classifier to detect whether or not a face is present somewhere in an image, and a bounding box regression network to perform the actual job of locating the faces in the snap so they can be, for example, tagged by apps.

The pictures are run through a feature extractor to break down the images into shapes and portions, and these are passed to the binary classifier and the regressor to process.

To train the classifier, the software was shown a big bunch of photos of stuff you'd normally see in the background of photos, with no sign of any faces, described as a negative class, and an assortment of human faces, described as a positive class. The task being to teach the software to confidently confirm whether or not a photo contains at least one person's face or not.

Meanwhile, the regressor was taught how to mark out faces on the photo with bounding boxes. The software is capable of picking out multiple faces in a given shot.

Deep-learning algorithms can be computationally intensive even for today's relatively powerful mobile processors and coprocessors. The DCNs in Apple's system have several components, some with more than 20 neural-network layers, and it’s not easy shoving all that expertise into a battery-powered iThing.

Thus, Apple turned to a teacher-student training technique to reduce the resources needed to run face detection on handsets and fondleslabs. Essentially, a teacher model is developed and trained on beefier computers, and then smaller student models are trained to produce the same outputs as the teacher for a given set of inputs.

The smaller student models can be optimized for speed and memory, and crammed into iOS handhelds. As a result, it takes less than a millisecond to run through each layer, we're told, allowing faces to be detected in the blink of an eye.

“Combined, all these strategies ensure that our users can enjoy local, low-latency, private deep learning inference without being aware that their phone is running neural networks at several hundreds of gigaflops per second,” Apple boasted.

The iGiant's weapons-grade secretiveness hampers its efforts to recruit machine-learning experts, who usually prefer the open nature of academia. This week's blog post is an attempt to open up a bit more and lure fresh talent.

Overall, the document offers a gentle explanation of how Apple’s face detection software works. It is light on the nitty gritty details of the DCNs compared to the academic papers the blog post references. Application programmers can access the face-detection system on iThings via the Vision API. ®

Editor's note: This story was corrected after publication to make clear the documented technology is Apple's face detection API, and not the Face ID security mechanism as first reported. Face ID is a separate technology that uses infrared sensors in the iPhone X to identify and authenticate device owners. We are happy to clarify this distinction.