This article is more than 1 year old

Facebook tried teaching bots art of negotiation – so the AI learned to lie

Given training data was real human chatter, this says more about us than anything else

Let's be honest, despite last year's burst of hype, chatbots haven’t progressed beyond asking and answering simple questions. Still, researchers aren't letting go of their dream of a perfect digital assistant.

If bots are to be really helpful, they’ll need to be more than a dumb user interface. They will have to engage in more complex chatter, which is hard to do. One area of complex conversation that has received little attention is negotiation. Developers are, right now, crafting bots that try to do specific things such as helping people book flights or deal with restaurant reservations, but in those jobs both parties have the same goal – getting someone on a plane, or finding them a table.

What happens if they don’t want the same thing? Well, it turns out chatbots, after being taught to negotiate, will sometimes lie to get what they want. Who said artificial intelligence had to be nice?

In an experiment, the Facebook AI Research (FAIR) team forced two bots to learn how to negotiate with one another by presenting them with a bargaining task.

Both agents are shown the same number of objects – two books, one hat and three balls – and they have to split the items between them. They have been programmed to want different things, as each class of object is worth a different number of points to each bot. The goal is for both bots to compromise so that they can both walk away with decent scores.

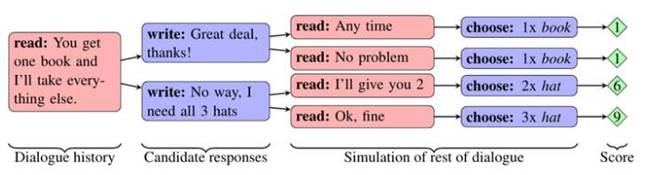

A software system, dubbed dialogue rollouts, to achieve this negotiation has been described in a paper published online on Wednesday. It allows bots to plan and reason ahead, Mike Lewis, lead author of the paper and researcher at FAIR, and Dhruv Batra, coauthor of the paper and assistant professor at Georgia Tech, explained to The Register.

“In games like chess or Go, agents have to consider what the counter move will be, as there are many different possibilities. [Dialogue rollout] applies a similar idea. If I say this, what will you say back?”

Based on a candidate's responses, the bot simulates different responses before deciding which one to use.

The bots can only spar with words they were taught. The training data was compiled from 5,808 human dialogues, containing about 1,000 words in total, all generated by real people grafting away for the Amazon Mechanical Turk service. The bots learn to imitate the ways people compromise so that they can try to predict what the other person will say in certain situations.

The team used a mixture of supervised learning for the prediction phase and reinforcement learning to help the bots pick which response they should reply with. If the software agents walk away from the negotiation or do not reach an agreement within 10 rounds of dialogue, both receive zero points, so it is to their benefit to broker a deal.

The most interesting tactic to emerge was the ability to lie. Sometimes bots feigned interest in objects they didn’t really want, and then pretended to give them up during the bargaining process.

“They learned to lie because they discovered a strategy that works, given the game reward. Maybe it occurred a few times in the training dataset. Humans don’t tend to be deceptive in Amazon Mechanical Turk, so it’s a rare strategy,” Bhatra said.

The hope is that the negotiation process learned here can be extended to other settings, such as using bots to book a meeting with someone or buying and selling products – all useful features for personal assistants.

Facebook’s assistant M for its messenger platform is an ongoing project. The hype around its release fell flat when it was revealed that it could only perform a limited number of tasks, including recommending stickers, sharing locations, and getting a ride on Uber or Lyft.

The researchers said there were no immediate plans to apply dialogue rollouts to M. But they have opened it up to developers by releasing their negotiation code on GitHub. ®