This article is more than 1 year old

AI is all trendy and fun – but it's still a long way from true intelligence, Facebook boffins admit

To be fair, the same goes for many humans, too

Researchers at Facebook have attempted to build a machine capable of reasoning from text – but their latest paper shows true machine intelligence still has a long way to go.

The idea that one day AI will dominate Earth and bring humans to their knees as it becomes super-intelligent is a genuine concern right now. Not only is it a popular topic in sci-fi TV shows such as HBO’s Westworld and UK Channel 4’s Humans – it features heavily in academic research too.

Research centers such as the University of Oxford’s Future of Humanity Institute and the recently opened Leverhulme Centre for the Future of Intelligence in Cambridge are dedicated to studying the long-term risks of developing AI.

The key to potential risks about AI mostly stem from its intelligence. The paper, which is currently under review for 2017's International Conference on Learning Representations, defines intelligence as the ability to predict.

“An intelligent agent must be able to predict unobserved facts about their environment from limited percepts (visual, auditory, textual, or otherwise), combined with their knowledge of the past.

“In order to reason and plan, they must be able to predict how an observed event or action will affect the state of the world. Arguably, the ability to maintain an estimate of the current state of the world, combined with a forward mode of how the world evolves, is a key feature of intelligent agents.”

If a machine wants to predict an event, first it has to be able to keep track of its environment before it can learn to reason. It’s something Facebook has been interested in for a while – its bAbI project is “organized towards the goal of automatic text understanding and reasoning.”

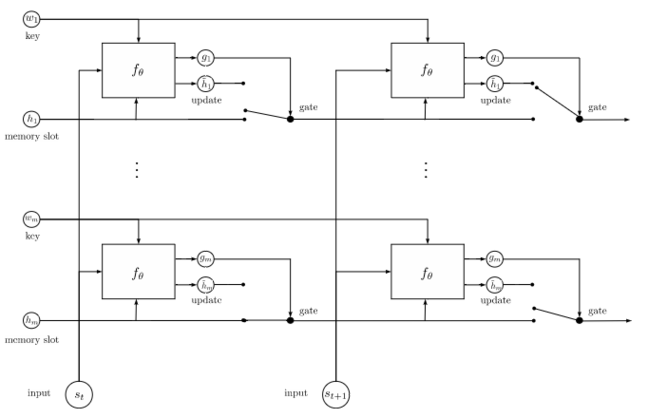

The latest attempt uses a new model called the Recurrent Entity Network (EntNet), which is a recurrent neural network built in a way that it acts like gated memory cells working in parallel. The gates are key to allowing new information to be stored, so it can be recalled during reasoning.

The agent is tested with a series of tasks. First, it’s given a sequence of statements and events in text, and then another set of statements that describes the final world state. It is then given questions that test its ability to keep track of the world state.

For example, a well-trained agent should be able to understand and answer questions from simple sentences such as: “Mary picked up the ball. Mary went to the garden. Where is the ball?” It should reply, “garden.”

It seems impressive, but there’s a catch that can be seen only when examining how EntNet really works.

Machines are still pretty dumb

The gated network is referred to as dynamic memory, and each gate acts like a memory cell. There is no direct interaction between the memory cells, so it can be seen as multiple identical processors working in parallel.

Each cell stores an entity of the world, so in the example scenario it could be Mary or the ball. When the entity changes its state, the gate is unlocked when new information about the entity passes to the correct gate. Old information is rewritten with new, updated information about a particular entity.

This way, the agent can keep track of several entities and only updates the ones that are relevant without wiping any information about the other entities. It can keep tabs on each entity over time, tracking the world state. When it’s asked a question about a certain entity, it goes back to a particular gate to recall information.

Diagram of how EntNet works. Each memory slot is a gate that holds information about a word embedding that can be unlocked using a key, and updated

Identifying important entities

So far, so good. EntNet generally performs better when it is free to work out which information is important and can tag entities by itself. However, when it has to deal with trickier datasets, like the Children's Book Test, encoding the entities directly into the model as a form of "prior knowledge" is crucial. In other words, you have to point out to the AI what's important in the story while it learns.

After training EntNet with a book, the Facebook researchers fed into the AI blocks of 21 sentences derived from the story. The first 20 sentences in each block gave EntNet some context and build up, and a word is removed from the final sentence to form a question – the AI is expected to work out the missing word.

For example, after training the software with Alice in Wonderland by Lewis Carroll, the follow 20 lines of context were given to the AI:

1 So they had to fall a long way.

2 So they got their tails fast in their mouths.

3 So they could n't get them out again.

4 That 's all.

5 "Thank you," said Alice, "it's very interesting.

6 I never knew so much about a whiting before."

7 "I can tell you more than that , if you like," said the Gryphon.

8 "Do you know why it 's called a whiting?"

9 "I never thought about it," said Alice.

10 "Why?"

11 "IT DOES THE BOOTS AND SHOES,"

12 the Gryphon replied very solemnly.

13 Alice was thoroughly puzzled.

14 "Does the boots and shoes!"

15 she repeated in a wondering tone.

16 "Why, what are YOUR shoes done with?"

17 said the Gryphon.

18 "I mean, what makes them so shiny?"

19 Alice looked down at them, and considered a little before she gave her answer.

20 "They're done with blacking, I believe."

And then the final line that's the question:

"Boots and shoes under the sea," the XXXXX went on in a deep voice, "are done with a whiting."

Hopefully, the AI replies with the identity of XXXXX: Gryphon. As noted above, for complex tasks, such as the Children's Book Test, the researchers gave the AI a hint by listing potentially important characters and objects in the narrative. For really simple datasets, such as the bAbI set, the AI can pretty much figure out what's crucial and what's not all by itself.

“If the second series of statements is given in the form of questions about the final state of the world together with their correct answers, the agent should be able to learn from them and its performance can be measured by the accuracy of its answers,” the paper said.

The bAbI task – which is a collection of 20 question-answering datasets that test reasoning abilities – was the only exercise where the researchers didn’t encode the entities first, but the answers were given.

Although the agent correctly stored information about the objects and characters as entities in its memory slots, the bAbI task is simple. Statements are about four or five words long, and they all have the same structure, where the name of the character comes first and the action and object later.

Although EntNet shows machines are far from developing automated reasoning, and can’t take over the world yet, it is a pretty nifty way of introducing memory into a neural network.

Storing information that can be accessed and updated in gates means that researchers don’t have to attach an external memory, unlike DeepMind’s differentiable neural computer. ®