This article is more than 1 year old

NVMe SSDs tormented for months in some kind of sick review game

Cards on the table: These are the issues you'll face

Review NVM Express (NVMe) is the next generation specification for accessing non-volatile memory such as flash. Traditional technologies such as SAS and SATA are just too slow. In order to demonstrate how much of a difference NVMe makes, Micron has provided 12 9100 NVMe flash drives, 800GB each in the HHHL (standard PCIe card) format.

I have spent the past TWO months testing these cards, the past month of which has involved truly tormenting them. I've learned a lot of things. There's the basic "NVMe is faster" that you can get from reading about the theory behind the drives, but there have also been a lot of little practical tidbits that you only get to find out when you run face first into problems.

Performance in parts

When you read a review about some storage something or other you usually expect a lot of neat little charts. This SSD goes this fast when compared to that SSD, etc. I could make you little charts, but they would be mostly pointless.

The truth is that I haven’t tested any cards with similar enough performance profiles to make charts that are relevant. What good is a graph comparing the speed of various sedans with a scramjet?

The only cards I've seen rival the Micron 9100s are other ultra-high-end (and crazy expensive) cards such as Fusion-IO. Even then, the comparison isn't quite right; the performance profiles don't quite match. Of course, bear in mind the last time I played with a Fusion-IO card was 2013, before the advent of NVMe.

Micron 9100 NVMe flash drives, 800GB each in the HHHL (standard PCIe card) format

This NVMe/non-NVMe dichotomy is important. The differences are visible even within the same vendor. Consider that for a while, we had a Micron P420m here in the lab, and it was also pretty fast. Under some circumstances I could get straight-line speeds approaching the 9100s, but in most workload profiles the P420m was nowhere near as fast as the 9100s are.

The reason for the performance difference has less to do with Micron's black magic flash controller algorithms or super-awesome flash chips and more to do with why NVMe exists in the first place. Without writing yet another article on "why" of NVMe, suffice it to say that NVMe allows for many more queues with far greater queue depths than either SAS or SATA.

A single process performing one set of I/O operations against the drive will produce performance very much the same as you'd see from any other flash drive. Throw 10,000 processes' worth of I/O at the drive simultaneously and the 9100s will crush other SSDs like bugs.

Big versus little: speeds and feeds

Depending on the model of the 9100 chosen, putting a Micron 9100 into your workstation is like strapping on a Saturn V to commute to work. As a general rule, to actually realize the full potential of the 9100s they need to be in servers and you need to be throwing network-breaking amounts of traffic at them.

Headline numbers

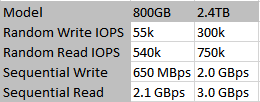

The official write speeds on the 800GB 9100s are mediocre for a modern SSD. They deliver a shrug-worthy 55k IOPS of 4K random I/O or about 650MB of sequential. The reads are more impressive at 540k of random I/O or about 2.1GB/sec of sequential.

The 2.4 TB version of the 9100s are rated to an impressive 300k IOPS or 2 GB/sec write and a mind-numbing 750k IOPS or 3 GB/sec read. Dear Santa: I want 12 of those in the 2.5" form factor. I have no idea whatsoever what I would do them, but by Jibbers I want them.

The numbers I obtained differ slightly from the numbers published by Micron. This could be due to the fact that the 12 drives I have are "engineering samples". It could also be that Micron's official numbers use different I/O profiles.

The top line numbers I was able to pull off the 800GB 9100 drives were closer to 65k write and 600k read for random IOPS and 700MB write and 2.3GB/sec read. Of course, that's using 64K blocks with multiple workers each set to 256 queue depth: basically the ideal conditions for an NVMe SSD.

It's safe to say that Micron isn't lying about the headline numbers of these SSDs. Indeed, they're probably a little conservative. Prolonged testing also showed that queue depth really makes a difference; these drives actually take advantage of what NVMe brings to the table.

Real(ish) world testing

Most of the testing occurred on my Argon server. Argon is a Supermicro SYS-1028U-TNRTP+ server that has done yeoman's work in the lab for many months. Argon's loadout for testing was of 2x Intel Xeon E5 2685 v3 CPUs, 384GB RAM, 2x Micron 800GB 9100s, 4x Intel 520 480GB SSDs (RAID 0) and an onboard quad port Intel 10GBE NIC.

As an aside, did you know that the 2685 v3s don't have hardware virtualization capability? Ability to turn off individual cores, but no virtualization extensions. This "metal only" CPU design is why Argon is my go-to for testing storage.

Before testing, I burned the drives in for 3 weeks. There was no noticeable change in performance at any point during the burn in process. Linux performance was better than Windows. The drives perform better the larger the block size. All perfectly normal.

Once the basic profiling was done, I focused on 4K workloads under Windows, because that is pretty much the worst scenario SSDs are likely to encounter today, and also still a fairly common one. Argon is capable of pinning a single 9100 using Iometer. (Though admittedly just barely.) Things got a lot screwier when I put two 9100s in RAID 0 and tested against that. Things were especially screwy in Windows, so I tested this scenario rather a lot.

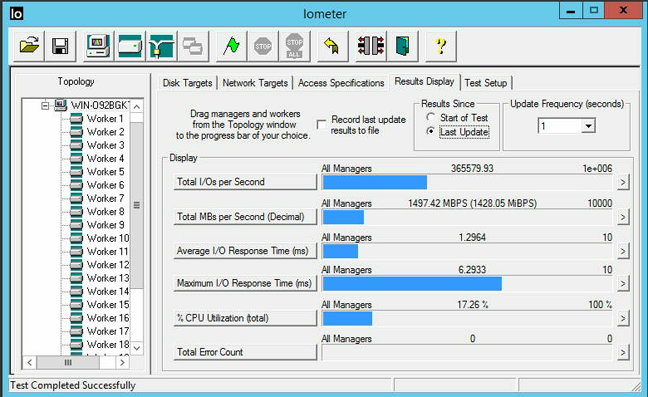

256 queue 4K aligned 50% Read 100% Random RAID 0 of 2 SSDs 4 workers

Here are some of the results delivered by Iometer in Windows 2012 R2 against a 2 SSD RAID 0 that I think are worth discussion:

256 queue, 4K aligned, 50% Read, 100% Random, 4 workers: 1500MB/sec at 365k IOPS

256 queue, 4K aligned, 70% Read, 100% Random, 4 workers: 1575MB/sec at 385k IOPS

256 queue, 4K aligned, 100% Read, 100% Random, 4 workers: 1580MB/sec at 385k IOPS

256 queue, 4K aligned, 100% Read, 100% Random, 1 worker: 715MB/sec at 175k IOPS

256 queue, 4K aligned, 100% Read, 0% Random, 1 worker: 4575MB/sec at 175k IOPS

You can see the difference between one worker and four workers clearly here. The idea that a single user isn't going to challenge these cards is borne out in testing.

For the 100% random, 4 workers set the 50%, 70% and 100% read results are so close as to be almost identical. On the surface, the results are reasonably impressive, but a little bit of digging shows issues. The long story short is that the drives are too fast for the testing configuration used.

Every time I ran the test, 5 CPU cores would pin. Iometer is multithreaded, so I expected each of the workers to consume most of a core. That's fine – Argon certainly has cores to spare! The problem lay in the consumer of the fifth core: Windows itself.

It turns out that Windows' RAID implementation doesn't want to go much past 385K IOPS. It flattens a core and then refuses to go faster. Linux wasn't much better: I tried Devuan as well as CentOS 6 and 7, all fully patched, and ran into similar problems around the 400k IOPS mark.

Being HHHL format cards, there is no RAID controller I can hook them up to in an attempt to go faster. Not that I particularly expect any hardware RAID card to beat a Xeon core at crunching I/O, but I would like something to compare against.

Broken Windows is no fallacy

It's fun to talk about theoretical, but the limits of software RAID implementations have real world implications. These were demonstrated most dramatically in Windows.

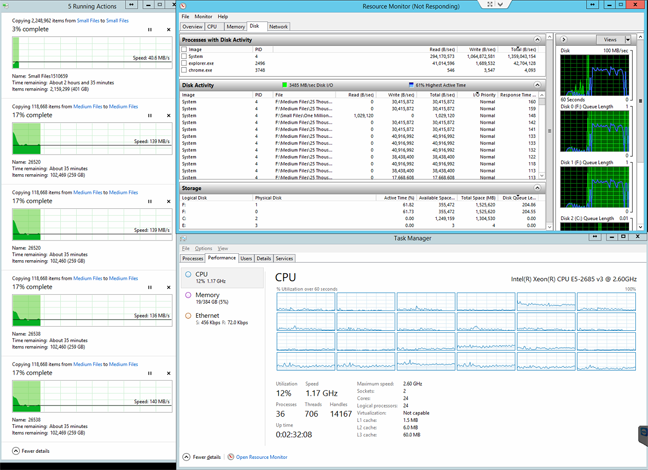

Attempting to simulate actual things I do with my servers, I copied a few million files from the 9100 RAID 0 to itself. The files were of various sizes and are a standard testbed fileset that I use for all sorts of storage testing.

I was easily able to get over 4GB/sec using Windows Explorer. Task Manager reported numerous cores under various amounts of strain, but interestingly nothing seemed to be pinning a whole core, as had happened under the Iometer test. That said, Windows was behaving very badly.

Copying from self to self - on the edge of what Windows can sustain

When I opened Resource Monitor the whole system turned into soup, Resource Monitor froze, then crashed. I repeated the test several times. At one point Resource Manager crashed so hard it cratered the operating system itself and I was forced to reinstall. I still don't know how that happened.

It is worth pointing out that the OS was not installed onto the 9100s, but onto a hardware RAID 0 of 4x 4x Intel 520 480GB SSDs. The behaviour persisted after reinstall. I tried different 9100 cards. I replicated the behaviour using other servers. Windows Explorer just does not like copying millions of files from a drive to itself when the drive is capable of those speeds.

Server 2016 Technical Preview 5 did not crash like Server 2012 R2 did. It also showed moderate but consistent performance increases over Server 2012 R2. Despite this, it still seemed to hit a software RAID wall before maxing out the hardware.

Breaking the network

In trying to get the most from these cards I decided to "separate the data plane from the control plane". Put one card per system, present that storage to a central unit via iSCSI and let the central unit "just be a RAID box". Run the workloads on other units entirely.

The server with the fastest single-threaded performance I have is Neon. Neon is a Supermicro 6028TP-DNCR with 2x E5-2697 v3 CPUs. While the base clock of 2.6Ghz is the same as Argon, Neon's max turbo frequency is 3.6Ghz versus Argon's 3.3Ghz. Neon has 256GB of RAM compared to Argon's 384GB.

With Neon as my RAID controller I begged borrowed and stole 10GbE NICs from everywhere I could. Somehow I found 40GbE cards to put into Neon. I managed to cobble together 10 systems to host cards and even came up with enough cables to give it all a go.

For fun, I tried creating a Windows RAID across the 10 iSCSI devices presented to Neon. The RAID created, but I have no idea how fast it would have gone because as soon as I tried to stress it Server 2012 R2 simply packed it in. Sadly, I don't have any Datacore licenses, or I would have used them instead.

Linux was less of a disappointment. After a week of fussing I was regularly pulling 4M random read IOPS and pushing 500k random write IOPS. My ghetto NVMe SAN was delivering! Or so I thought. Of course, when I looked deeper I found problems.

The network was the bottleneck. After that, the CPU was also a bottleneck. I never managed to build an array that could use all 10 cards to their maximum potential.

2x 10GbE NICs is enough to service one 9100 SSD. Unfortunately, my switch only has 4x 40GbE ports, and this isn't enough to service 10x 9100 SSDs as well as network overhead. I should have been able to pull 5M random read IOPS, not 4M.

Unfortunately, there was nothing I could do to make it go faster. I was out of PCI-E slots on Neon. As it is I was using slots deigned for GPUs in the chassis and holding cards in with tape. I should have been able to get 21GB/sec sequential read, but only ever managed to get 16.5GB/sec. I tinkered with different configurations for a week, but that was the best I managed to achieve.

Storage is no longer the bottleneck

If not running workloads locally, a modern x86 node could expect to service 2x of Micron's 9100 NVMe SSDs at full potential. Throw in some lesser storage and these would make great nodes in a scale out storage solution, assuming your software was decent and you spent a lot of money on your network.

Given that the NVMe drives respond best to heavy workloads from multiple users this is all good. The higher the I/O queue the better the performance you get from NVMe drives, making them ideal for servers.

Of course, most aren't going to run their storage at 100% capacity the whole time. This means that it is perfectly rational to use Micron's 9100s as the local storage on bare metal servers running high I/O workloads or in hyperconverged solutions, but with one important caveat.

NVMe SSDs are fast enough that they reveal design flaws in storage subsystems and/or can occupy a significant percentage of a system's resources just with servicing the storage. Installing NVMe SSDs will make your workload go faster, but only to a point; depending on where your workload bottlenecks the cost of servicing ultra high speed local storage could be too high.

Test your configurations and your workloads. Thanks to NVMe, the old assumptions about bottlenecks are out the window and everything's fair game once again. ®