This article is more than 1 year old

Cambridge’s HPC-as-a-service for boffins, big and small

However, a ‘step change in data storage’ needed

Hawking's baby

Cambridge is also running COSMOS, the supercomputing facility led by a consortium of UK cosmologists brought together by Professor Stephen Hawking in 1997 to study cosmology, astrophysics and particle physics.

Housed at the Department of Applied Mathematics and Theoretical Physics at the uni, COSMOS is an Altix UV2000 system from SGI and is the largest shared-memory computer in Europe. It has 1,856 Intel Xeon E5 processor cores with 14.5TB of globally shared memory and – since late 2012 – 31 Intel Many Integrated Core (MIC) co-processors, providing a hybrid hierarchical SMP/MIC computing platform.

Being loaded down with Intel gear, the facility is also one of 14 Intel Parallel Computing Centres (IPCC) in Europe.

“What COSMOS does in astronomy is very advanced, it’s one of the most advanced centres in the field and they need a lot of performance to do their discoveries,” director of HPC and workstation in EMEA Stephen Gillich said.

“That is obviously an interesting area where we want to be of help, to have people use the latest hardware we have, which has parallelisation features, and also help them within the centre to really make sure they can make use of these resources via the software, and this is what the IPCCs are all about.”

Obviously, none of these big-data science projects would be possible without the advances in parallel computing offered by multi-core processors. But not every science project lends itself handily to parallelisation.

“You have the Xeon Phi family, which has up to 61 cores, 244 threads and even wider vector units for data parallelisation. We have that because the software that does the useful work on those processors is quite different," Gillich said.

“There’s software that’s already kind of parallel; it’s fairly easy to do things in parallel when they don’t depend on each other, you don’t have to wait for one iteration to finish to do the next one. For instance, ray tracing is one of those applications that is fairly parallel by nature, you can do many parts of a picture at the same time,” Gillich added.

“But, there are other applications where you have lots of steps in the algorithm that are depending on each other, every step depends on the other," Gillich said. "These obviously cannot be done in parallel, or they can only be done in parallel if you’re an expert and you can do some things without waiting for others to finish. That’s the motivation behind the new processors.”

It’s these advances that are letting Cambridge University, with Intel, effectively offer HPC-as-a-service to smaller projects or firms.

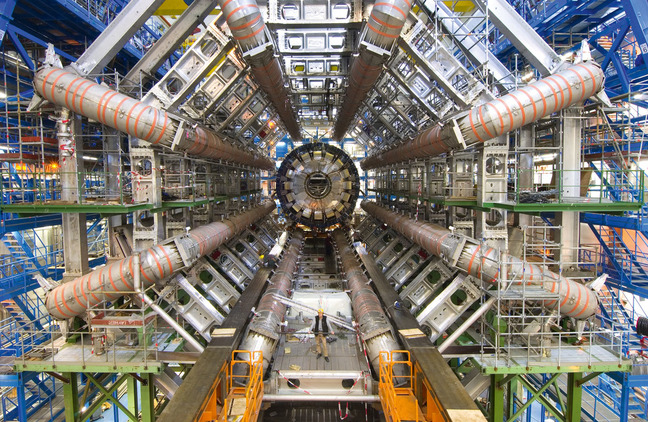

A portion of LHC's data is smashed on the Cambridge machines