This article is more than 1 year old

Elasticsearch tells us all about its weighty Big Data tool

Customers include EMC and Cisco, says firm

Idiot's guide to OpenSource Big Data entities

Let’s get some basic component technologies and entities listed; think the short version of the Dummy’s Guide to Big Data open source software.

- Apache Software Foundation - US non-profit set up to support development of free, open source software and license it

- Compass - search engine framework written in 2004 using Java by Shay Banon and built on top of Lucene. While thinking about v3 of this he realised it a new scalable search engine needed writing. Elasticsearch was the result

- Hadoop - open source software to store and process big data spread across commodity hardware clusters using the Hadoop Distributed File System (HDFS)

- JSON - JavaScript Object Notation. Attribute-value pair data objects in human-readable text form. XML alternative. JSON over HTTP is the Elasticsearch interface

- Lucene - open source library of code for information retrieval. Supported by Apache Software Foundation. Good for indexing full text and searching

- Map/Reduce - programming model and code for generating and processing large data sets. It maps data into categories, so to speak, by filtering and sorting, and then can count numbers in each category

- Solr (pronounced Solar) - open source enterprise search platform coming from the Lucene project and regarded as a joint project with Lucene

What Elasticsearch built

Banon saw banks using Compass to index their transactions and make them searchable. The realisation came that search needed to be used as a real business intelligence tool, but it has to run in real-time to be usable, and not be batch-oriented. That was what Elastic search was built to do. It's built to operate on large volumes of data - more than Autonomy for example - meaning 100s of PB of data, and across 100s of server nodes.

Apache Spark can deliver results in 10 minutes or so, whereas Elasticsearch can provide results in the 10-100m-secs timeframe. IT uses in-memory technology and has files stores on each server node.

Banon comes from a distributed systems background and says you want to run the compute next to the data. All the compute too; not search in one place, BI in another, machine learning in a third. You should do it in a single system.

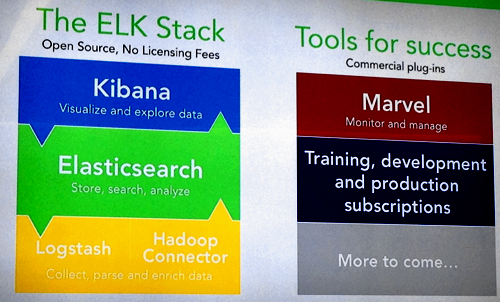

Layered above and below Elasticsearch we have Kibana and Logstash, which make up the so-called ELK stack. Logstash is a collection point (so to speak), while Kibana is a data visualisation tool

ELK stack

Logstash processes data and sends it out to disk. (ETL tool) product management veep Gaurav Gupta said: "Logstash can index log data on servers via agents. It can hook into Twitter [and] contact data sources ... like a Salesforce.com server/account."