This article is more than 1 year old

Kill queues for fast data centres: MIT boffins

Arbitration for in-DC network traffic

MIT researchers hope to speed up networking inside the data centre with concepts that will look familiar to old networking hacks: they propose a central arbiter for network traffic that picks out a predetermined path before a packet is transmitted.

The boffins call the scheme Fastpass, and its other characteristic is that the central arbiter also decides when each packet can best be transmitted.

With the help of Facebook, which provided a chunk of its data centre network for the tests, the Fastpass group says it was able to achieve a reduction in queue lengths from 4.35 MB to 18 KB, a 5,200 times reduction in “the standard deviation of per-flow throughput with five concurrent connections”, and 2.5 times reduction in TCP retransmissions in Facebook's test network.

In this paper, to be delivered at SIGCOMM'2014 in Chicago in August, the researchers explain their reasoning: “Current data center networks inherit the principles that went into the design of the Internet, where packet transmission and path selection deci-sions are distributed among the endpoints and routers. Instead, we propose that each sender should delegate control—to a centralised arbiter—of when each packet should be transmitted and what path it should follow.”

This, they argue, “allows endpoints to burst at wire-speed while eliminating congestion at switches.”

The three components to Fastpass are:

- The timeslot allocation algorithm, which decides when packets are sent;

- The path assignment algorithm, which assigns packets to paths between switches; and

- A control protocol for the arbiter, handling messages from arbiters to endpoints, and providing a replication strategy in case of arbiter or network failures.

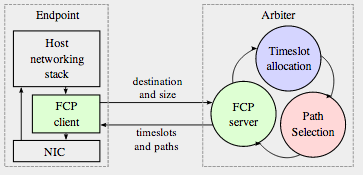

Fastpass relies on code running both at the arbiter and at the endpoints, communicating over a dedicated Fastpass Control Protocol (FCP). The endpoints call send() or sendto() to the arbiter, which tells the endpoint when it can send and by which path.

In other words, Fastpass is an attempt to introduce a determinism in the non-deterministic world of Ethernet at layer 2 and IP-based routing above.

As the image below shows, at the endpoint the FCP sits between the networking stack and the NIC. Before the endpoint sends data, the arbiter gives instructions on scheduling and path.

Fastpass captures networking data before it reaches the NIC. Image: MIT

“Because the arbiter has knowledge of all endpoint demands, it can allocate traffic according to global policies that would be harder to enforce in a distributed setting. For instance, the arbiter can allocate time-slots to achieve max-min fairness, to minimise flow completion time, or to limit the aggregate throughput of certain classes of traffic.When conditions change, the network does not need to converge to a good allocation – the arbiter can change the allocation from one timeslot to the next”, the researchers write.

They also claim that the system is highly scalable. The arbiter implementation can currently run on up to eight cores, and they say an eight-core arbiter can schedule 2.21 Tbps of traffic.

The Fastpass code is at github, here. ®