This article is more than 1 year old

Microsoft 'Catapults' geriatric Moore's Law from CERTAIN DEATH

FPGAs DOUBLE data center throughput despite puny power pump-up, we're told

FPGAs, Godzilla, and property values in Tokyo

So, how did Redmond come to design this technology, what did it learn along the way, and what does this tell us about the most fundamental components of our modern, digital world?.

In the last decade, x86 chips from Intel and AMD have grown more powerful largely by packing more and more transistors into more and more cores, both CPU and GPU. Meanwhile CPU clock rates have maxed out below 4GHz for sustained workloads. There's an encyclopedia of different reasons for this ceiling, but we'll focus on just one: as transistors shrink, the current that powers them tends to leak – despite the best efforts of FinFET, fully depleted silicon-on-insulator, or other chip-baking technologies. Bottom line: it's impractical to scale up clock rates.

This is problem for companies such as Microsoft, Amazon, and Google, as well as other large data-center operators. Just as their search, ranking, and other data-analysis algorithms have grown more complex, the days of easy, reliable 500MHz clock-speed boosts from Intel and AMD have ended.

Some people have labored mightily to solve this problem by creating parallel software systems to lash cores together to boost linear performance, but it's becoming increasingly clear that CPU parallelization is not the Holy Grail that it once appeared to be.

One reason for this is simple: parallel programming is devilishly difficult, so there isn't a large enough supply of developers familiar with the Lovecraftian horrors that emerge when you try to efficiently and effectively munge cores together.

Another approach to speeding up data center performance has been to try to lash more hardware together and correct the ensuing problems in software. As Microsoft researcher James Mickens put it in his seminal article, The Slow Winter [PDF], which detailed this issue from the perspective of a poor semiconductor engineer: "IT WAS THE WORST IDEA EVER. Modern software barely works when the hardware is correct, so relying on software to correct hardware errors is like asking Godzilla to prevent mega-Godzilla from terrorizing Japan. THIS DOES NOT LEAD TO RISING PROPERTY VALUES IN TOKYO."

In a nutshell, the last ten years of processor development have seen more computer processing capacity appearing and requiring less power, but single cores are not getting much faster.

One way to get around this problem is by using distributed file systems and distributed computation systems – which is why Google invented Map Reduce and the Global File System in the early 2000s. These systems make it trivial to partition complex storage and data-querying operations across seas of computer cores, but they do nothing for single-threaded performance scaling.

Microsoft has had enough of this, and a team of its top researchers – some of whom have since gone on to jobs at other megaclouds such as Amazon Web Services and Google, which are presumably carrying out similar albet secret research – have rolled up their sleeves and decided that enough is enough.

Microsoft Catapults out of Moore's Law

With its Catapult system, Microsoft believes it has come up with an approach that will allow it to scale this computational cliff – at least for a subset of its data center workloads.

"Rather than banking on scaling to many, many more cores, let's take a different path," Microsoft researcher Doug Burger told El Reg. "We think specialization is going to be the next big thing – specializing hardware for different workloads."

This approach may have a patchy history, but Microsoft feels it has worked out how to implement it in such a way that makes it both reliable and easy for a sophisticated organization to deploy.

The secret sauce is in how Microsoft's researchers have grouped and tied the FPGAs together. Previous attempts would sit a bunch of FPGAs on a server, then plug the system into a rack and have it communicate with other servers over Ethernet. Yes, this worked – but it was far too slow.

Microsoft, in fact, tried this with a prototype server that had six FPGAs in it. "It turned out that was a bad idea," Burger said. "For every server to use it, it would have to communicate across Ethernet. [Also], what happened if you needed more than six FPGAs? You'd be toast. What happened if you needed fewer? You'd have stranded capacity."

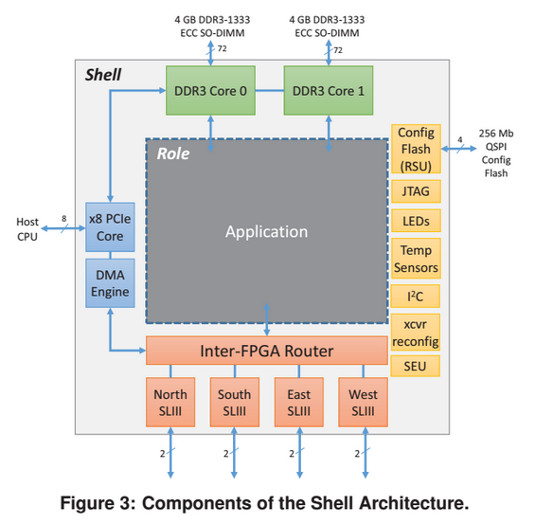

Instead, Microsoft decided to put a single FPGA in each server, then lash them together. Each FPGA is attached to a server via PCIe, and is placed on the server downwind of the heat generated by the CPUs, which could reach 68°C. As a result of this heat bath, Microsoft had to use an "industrial-grade" FPGA rated for temperatures of up to 100°C. It also had to add EMI shielding "to protect other server components from interference from the large number of high-speed signals on the board," as explained in the ISCA paper.

A logic diagram of Microsoft's custom FPGA

The FPGAs Microsoft used are custom ones built by Altera, and are designed for "massive multithreading and long-latency," the paper explains. Each FPGA crams 60 cores onto each chip, with each core supporting four simultaneous threads.

Microsoft linked the FPGAs together in a six-by-eight torus cluster, letting each FPGA per group of 48 servers talk to other FPGAs across a 20Gbps bidirectional connection.

And this is where things get interesting. Each single FPGA is not powerful enough to contain within it the necessary intelligence for some of the Bing search engine's demanding tasks, so Microsoft programmed the system to offload processing to groups of eight FPGAs – seven for the work and one for redundancy. These seven FPGAs each have distinct jobs: the first one performs feature extraction, two handle free-form expressions, one takes care of a compression stage, then three hold the scoring models and pass them back to the CPU.

The FPGAs are linked to one another via four high-speed serial links, which essentially encapsulate them into their own logical network.

"If you're on an FPGA, the neighboring FPGAs in the fabric are closer to you than your own CPU," Burger explained. "It really is like two systems, each of which has their own network, and then they're linked by a number of parallel PCIe channels." This means that each set of FPGAs are essentially running in their own high-speed network, with limited bandwidth back to the CPUs with which they talk.

By implementing this tech, it appears that Microsoft has proven that FPGAs have a place in the cloud, where they can help servers perform more intensive calculations with a great power saving.

"We have demonstrated that a significant portion of a complex datacenter service can be efficiently mapped to FPGAs, by using a low-latency interconnect to support computations that must span multiple FPGAs. A medium-scale deployment of FPGAs can increase ranking thoughput in a production search infrastructure by 95 per cent at comparable latency to a software-only solution," Microsoft's researchers write.

"Distributed reconfigurable fabrics are a viable path forward as increases in server performance level off, and will be crucial at the end of Moore's Law for continued cost and capability improvements," they contend. "Reconfigurability is a critical means by which hardware acceleration can keep pace with the rapid rate of change in data center services." ®