This article is more than 1 year old

ARM targets enterprise with 32-core, 1.6TB/sec bandwidth beastie

Think the UK boys are just mobe-chip makers? Been out of town lately?

Level-three cache sneakitudinousness

Like most elements in the CoreLink CCN-5xx microarchitecture, the L3 caches are distributed, decentralized, and scalable – in the CoreLink CCN-508, for example, there are eight L3 partitions scalable from 128KB to 4MB per partition, depending upon the partner's needs. But these cache partitions, taken together, have more on their plate than normal L3s.

"We call them L3s," Filippo said, "but that's actually a misnomer. The reality is that these are a very powerful piece of the microarchitecture." Yes, the L3 does function as an L3 cache for the compute cores – which have their own L1 and L2 caches, of course – but they also function as a high-bandwidth, flexible I/O cache for the entire system, enabling data movement not only between the CPUs and the I/O devices or accelerators hanging on the bus, but also among those other devices themselves.

Speaking of those CPU caches, the CCN-5xx system architecture has another nifty feature in that it has what's Filippo described as an adaptive exclusive/inclusive policy. As he explained, "Basically what that means is that this L3, generally speaking, is not inclusive of the caches that exist within the compute cluster." Basically what that means is that there's no redundancy between all the compute-core L2 caches and the system's L3 cache partitions, thus allowing more room for cached data, improving efficiency and performance.

In addition, the L3 cache complex is also doing more than acting as a way station for data; it also functions as the coherency manager for the whole system. "It is the point of coherency and the point of serialization," Filippo explained. "Anybody familiar with system architecture knows that that's the fundamental piece to a coherent system," meaning that it's the one specific place in which all requests to a specific cache line – a specific address – are managed.

Finally, the L3 also contains its own snoop filter, but your humble Reg reporter hastens to admit that his graduate degree in dramatic art did not prepare him well for a cogent analysis of the CoreLink CCN-5xx microarchitecture's address-line monitoring of memory locations to ensure cache coherency. Sorry.

As mentioned above, the L3 system functions as an I/O cache, so we should also touch on the coherent I/O system itself. Bottom line: it's fast – each modular I/O bridge can provide usable bandwidth of over 40GB/sec, Filippo said.

Speaking of speeds and feeds, ARM claims that when running at 2GHz, the CoreLink CCN-508 can deliver up to 1.6TB/sec of usable system bandwidth – and that's "T" as in "tera". When equipped with DDR4 memory, its four-channel memory system can nudge up to around 75GB/sec.

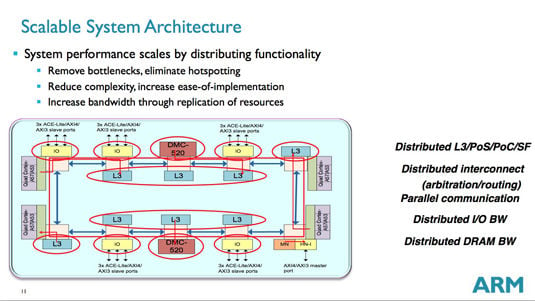

That 1.6TB/sec sounds like a boatload of bandwidth – and it is – but remember that the CCN-5xx system architecture is based on one hell of a lot of activity going on at any one time, coursing round the ring bus from modular element to modular element – check out all the lines and arrows in the presentation slide above to get an idea of how busy the bus can get.

This modularity is key to the microarchitecture's scalability. "As we build new designs," Filippo said, "staging larger and larger systems ... we basically just change some words in our description-config files, and the system builds itself."

When you get right down to it, that's the core of ARM's business philosophy: provide a broad range of IP to the world, and let chip designers mix and match them in a squillion different ways for a gazillion different use cases – such as the enterprise storage, servers, and networking markets towards which the CCN-5xx family is targeted.

Basically just change some words in the licensing-contract files, and the bottom line builds itself. ®