This article is more than 1 year old

Everything you always wanted to know about VDI but were afraid to ask (no, it's not an STD)

All you need to make virtual desktops go

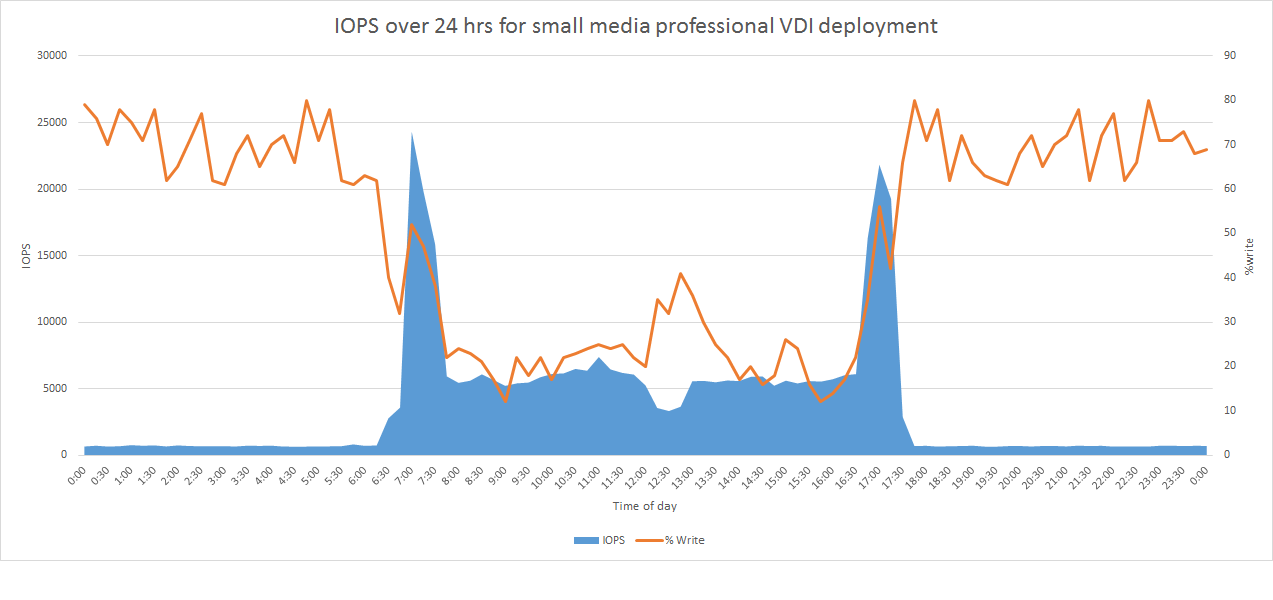

Scenario 2: The media professional

A "media professional" is a completely different beast altogether. Here you have someone doing professional image editing, video editing, simulation, AutoCAD or other "heavy" workloads. GPU virtualisation is going to make the biggest difference to them; however, their storage profile is so different from the knowledge worker that different products will suit them better.

These folks spend a lot of time reading great big files and doing lots of work with them. They do a lot more reading than writing and when they do render out their files, they almost never write those files to storage managed by the hypervisor. Those files are likely going out to some battered CIFS/SMB server halfway across the network and upgrading the speed of that server is likely to make the biggest difference to the write portion of their workload.

That said, the types of applications they use do have a tendency to both read from their own binaries as well as from temp files rather a lot. While having fast storage to render output to will make a big difference in terms of user satisfaction, making sure that you can handle a lot of read I/O will make these VMs seem a lot smoother.

This means that while popping GPUs into your VDI servers and beefing up the server where things get rendered will make the biggest difference for this group, host-based read caches should be a consideration for providing a decent user experience. Similarly, hybrid arrays and certain server SAN configurations should do the trick, though they may be quite a bit more expensive.

Another note for this workload category is that profiling network bandwidth – not just storage bandwidth – is important. Lots of sysadmins put a nice, fat 10GbE storage NIC into their VDI systems, but then leave the "user" network at 1GbE. If your users are writing their rendered files out to a CIFS/SMB server then there's a good chance that traffic will head out over your "user" network, and you need to make certain it is adequately provisioned.

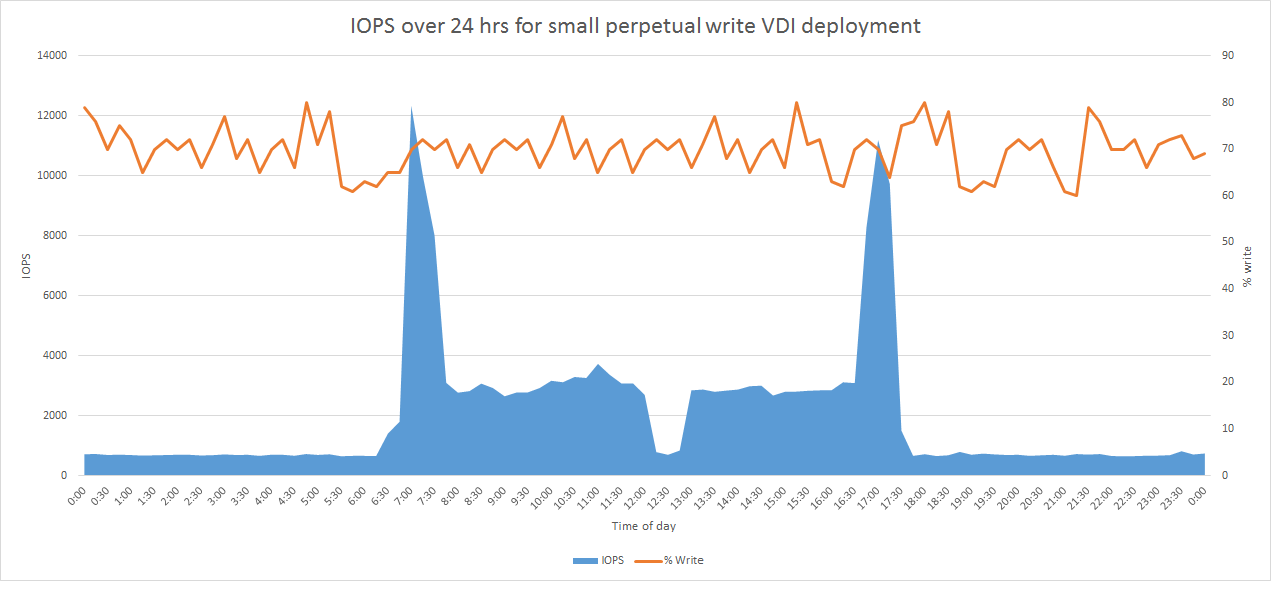

Scenario 3: The perpetual-write workloads

Another group of users that I frequently deal with are "perpetual write" folks. In general, they don't do a lot of graphically intensive work, but they sit there doing lots of fiddly writes to their local file system all day long.

One example is developers, who are constantly compiling something or other, hammering a local database copy or so forth. Analysts are another group; they're usually turning some giant pile of numbers into another giant pile of numbers and when you let them run around in groups what you see is entire servers full of users emitting write IOPS all day long.

A host-based write cache might help with this, assuming that you sized the cache high enough to absorb an entire day's worth of work into the cache and assuming it had enough time to drain to centralised storage during off hours. This is also assuming there are off hours to drain the cache.

If there isn't much in the way of downtime then attempting to use a host-based write cache can get fantastically expensive very, very quickly. Yes, host-based write caches drain even while accepting new writes, but if your centralised storage is fast enough to absorb writes from hosts hammering it nonstop in real time, why are you using a write cache?

Similarly, server SANs and hybrids can have the same issues. If their design is ultimately a write cache on top of spinning rust, their usefulness is determined by whether or not they have time to drain writes to said rust. LSI's CacheCade would be an example.

Some hybrid arrays write everything to flash then "destage" cold data to the spinning rust. These would work just fine, so long as there is enough flash on the system. This is completely different from how host-based write caches work.

Host-based write caches must drain to central storage; that's part of the design. Both hybrids and server SANs have companies in play where data is never drained from the SSD to rust. This is known as "flash first". In "flash first" setups, all data is written to the SSD, so it behaves like an all-flash array as far as most workloads are concerned. Only "cold" data is ever destaged to rust; "hot" data never gets moved or copied to rust at all.

Host-based read caches are going to be (at best) a marginal help. By caching reads they will take some pressure off the centralised storage (leaving more IOPS for writes), however, if the cumulative workload is predominantly write-based, you aren't going to see much improvement.

If you only have a few of these write-intensive workloads, then any of the write caches discussed above will make the problem simply go away. If instead these write intensive workloads make up the majority of your deployment, and your deployment is running over a thousand instances, you should be seriously considering all-flash storage because you will likely need much more flash than can be housed on a local server.