This article is more than 1 year old

Meet the man building an AI that mimics our neocortex – and could kill off neural networks

Palm founder Jeff Hawkins on his life's work

Google and Microsoft's code for deep learning is the new shallow learning

But for all the apparent rigorousness of Hawkins' approach, during the years he has worked on the technology there has been a fundamental change in the landscape of AI development: the rise of the consumer internet giants, and with them the appearance of various cavernous stores of user data on which to train learning algorithms.

Google, for instance, was said in January of 2014 to be assembling the team required for the "Manhattan Project for AI", according to a source who spoke anonymously to online publication Re/code. But Hawkins thinks that for all its grand aims, Google's approach may be based on a flawed presumption.

The collective term for the approach pioneered by companies like Google, Microsoft, and Facebook is the neural-network-inspired Deep Learning, but Hawkins fears it may be another blind path.

"Deep learning could be the greatest thing in the world, but it's not a brain theory," he says.

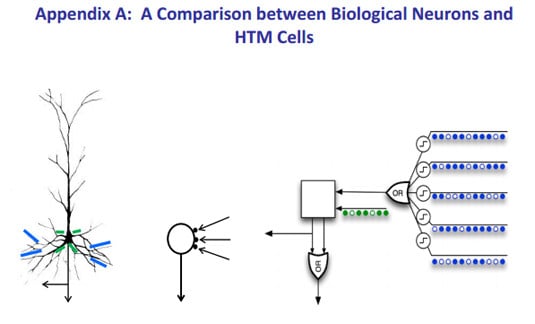

From the Hierarchical Temporal Memory white paper ... A comparison between the brain's own neuron (left), a traditional neural network neuron (center), and Hawkins' Hierarchical Temporal Memory cells (right)

Deep learning approaches, Hawkins says, encourage the industry to go about refining methods based on old technology, itself based on an oversimplified model of the neurons in a brain.

Because of the vast stores of user data available, the web companies are all trying to create artificial intelligence by building machines that compute over particular – some would say limited – types of data.

In many cases, much of the development at places like Google, Microsoft, and Facebook has revolved around computer vision – a dead end, according to Hawkins.

"Where the whole community got tripped up – and I'm talking fifty years tripped up – is vision," Hawkins told us. "They said, 'your eyes are moving all the time, your head is moving, the world is moving – let us focus on a simpler problem: spatial inference in vision'. This turns out to be a very small subset of what vision is. Vision turns out to be an inference problem. What that did is they threw out the most important part of vision – you must learn first how to do time-based vision."

The acquisitions these tech giants have made speak to this apparent flaw.

Google, for instance, hired AI luminary and University of Toronto professor Geoff Hinton and his startup DNNresearch last year to have him apply his "Deep Belief Networks" approach to Google's AI efforts.

In a talk given at the University of Toronto last year, Prof Hinton said he believed more advanced AI should be based on existing approaches, rather than a rethought understanding of the brain.

"The kind of neural inspiration I like is when making it more like the brain works better," the professor said. "There's lots of people who say you ought to make it more like the brain – like Henry Markram [of the European Union's brain simulation project], for example. He says, 'give me a billion dollars and I'll make something like the brain,' but he doesn't actually know how to make it work – he just knows how to make something more and more like the brain.

"That seems to me not the right approach. What we should do is stick with things that actually work and make them more like the brain, and notice when making them more like the brain is actually helpful. There's not much point in making things work worse."

Hawkins vehemently disagrees with this point, and believes that basing approaches on existing methods means Hinton and other AI researchers are not going to be able to imbue their systems with the generality needed for true machine intelligence.

Another influential Googler agrees.

"We have neuroscientists in our team so we can be biologically inspired but are not slavish to it," Google Fellow Jeff Dean (creator of MapReduce, the Google File System, and now a figure in Google's own "Brain Project" team, also known as its AI division) told us this year.

"I'm surprised by how few people believe they need to understand how the brain works to build intelligent machines," Hawkins said. "I'm disappointed by this."

Nuts and Boltzmann

Prof Hinton's foundational technologies, for example, are Boltzmann machines – advanced "stochastic recurrent neural network" tools that try to mimic some of the characteristics of the brain, which sit at the heart of Prof Hinton's deep belief networks [PDF].

"The neurons in a restricted Boltzmann machine are not even close [to the brain] – it's not even an approximation," Hawkins told us.

Even Google is not sure about which way to bet on how to build an artificial mind, as illustrated by its buy of UK company DeepMind Technologies earlier this year.

That company's founder, Demis Hassabis, has done detailed work on fundamental neuroscience, and has built technology out of this understanding. In 2010, it was reported that he mentioned both Hawkins' Hierarchical Temporal Memory and Prof Hinton's Deep Belief Networks when giving a talk on viable general artificial intelligence approaches.

Facebook has gone down similar paths by hiring the influential artificial intelligence academic Yann LeCun to help it "predict what a user is going to do next," among other things.

Microsoft has developed significant capabilities as well, with systems like the wannabe Siri-beater Cortana, and various endeavors by the company's research division, MSR.

Though the techniques these various researchers employ differ, they all depend on training a dataset over a large amount of information, and then selectively retraining it as information changes.

These AI efforts are built around dealing with problems backed up by large and relatively predictable datasets. This has yielded some incredible inventions, such as reasonable natural language processing, the identification of objects in images, and the tagging of stuff in videos.

It has not and cannot, however, yield a framework for a general intelligence, as it doesn't have the necessary architecture for data apprehension, analysis, retention, and recognition that our own brains do, Hawkins claimed.

His focus on time is why he believes his approach will win – something that the consumer internet giants are slowly waking up to.

It's all about time

"I would say that Hawkins is focusing more on how things unfold over time, which I think is very important," Google's research director Peter Norvig told El Reg via email, "while most of the current deep learning work assumes a static representation, unchanging over time.

"I suspect that as we scale up the applications (ie, from still images to video sequences, and from extracting noun-phrase entities in text to dealing with whole sentences denoting actions), that there will be more emphasis on the unfolding of dynamic processes over time."

Another former Googler concurs, with Prof Andrew Ng telling us via email: "Hawkins' work places a huge emphasis on learning from sequences. While most deep learning researchers also think that learning from sequences is important, we just haven't figured out ways to do so that we're happy with yet."

Prof Geoff Hinton echoes this praise. "He has great insights about the types of computation the brain must be doing," he told us – but argued that Hawkins' actual algorithmic contributions have been "disappointing" so far.