This article is more than 1 year old

Nvidia reveals CUDA 6, joins CPU-GPU shared memory party

Tesla headman: 'Biggest pain point' for developers – memory management – is now history

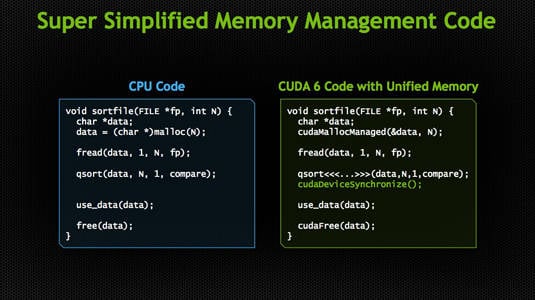

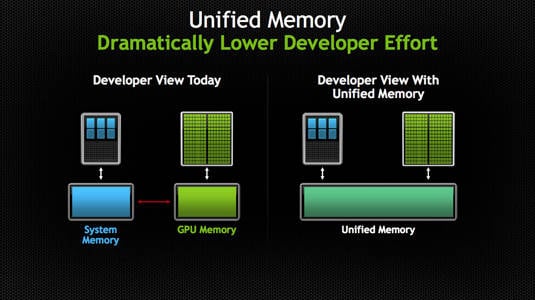

Nvidia has announced the latest version of its GPU programming language, CUDA 6, which adds a "Unified Memory" capability that, as its name implies, relieves programmers from the trials and tribulations of having to manually copy data back and forth between separate CPU and GPU memory spaces.

CUDA 6 fools a system's CPU and GPU into thinking they're dipping into the same shared memory bank

"Programmers have always found it hard to program GPUs," Sumit Gupta, the general manager of Nvidia's HPC-focused Tesla biz told The Reg, "and one of the biggest reasons for that – in fact, this is the reason – has been that there were always two memory spaces: the CPU and its memory, and the GPU and its own memory."

Being software, CUDA of course does nothing to physically unite those two memory spaces – the CPU still has its own memory and the GPU has its own chunk. To a programmer using CUDA 6, however, that distinction disappears: all the memory access, delivery, and management goes "underneath the covers," to borrow the phrase Oracle's Nandini Ramani used to describe Java 8's approach to parallel programming at this week's AMD developer conference, APU13.

From the point of view of the developer using CUDA 6, the memory spaces of the CPU and GPU might as well be physically one and the same. "The developer now can just operate on the data," Gupta says.

In other words, if a dev wants to add A to B, and A is in the CPU memory while B is in the GPU memory, the newly lucky dev can now just say "add A to B," and not give a fig about where either bit of data resides – the underlying CUDA 6 plumbing will take care of accessing A and B and munging them together.

According to Gupta, this new capability reduces programming effort by almost 50 per cent. Not being a CUDA programmer himself, your Reg reporter will have to wait for reports from the field – or in the article comments – to judge the veracity of the Tesla honcho's assertion.

To support his point, Gupta said, "We have several programmers who have told us that their biggest pain point on day one was always managing the data movement and the memory and the memory management. And by taking care of that, automatically doing that, we've significantly improved programmer productivity."

There is, of course, still some latency involved in moving the data from where, for example, the CPU can work with it to where the GPU can get its hands – or cores – on it, but the developer doesn't have to worry about writing the code to transfer it, nor does the compiler have to deal with the extra lines of code that were previously necessary to accomplish that move.

CUDA 6 adds a few other niceties such as new drop-in libraries that replace some CPU libraries with GPU libraries, and some redesigned GPU libraries that automatically scale across up to eight GPUs in a single node.

But Gupta told us that what devs have been clamoring for most avidly is to be freed from memory-management chores, which Unified Memory provides.

With CUDA 6, he said, "The programmer just blissfully programs." ®

Bootnote

In a related development, Mentor Graphics has announced that it is adding support for OpenACC 2.0 into its GCC compiler, thus adding the ability to generate assembly-level instructions for Nvidia GPUs into that industry-standard tool.