This article is more than 1 year old

EMC's XtremIO array: Everything we know about new all-flash box

Get your pre-launch skinny here

Mega-vendor EMC is going to launch its XtremIO all-flash array with general availability on 14 November. It has been on limited or directed availability for a few months and there's a fair amount of information coming out about it.

Here’s what we know so far.

The array is based on acquired XtremIO technology and features:

- 6U X-Brick nodes with N-way active controllers, 250,000 random 4K read IOPS and sub-millisecond response time;

- X-Brick has 25 x 400GB eMLC SSDs, 10TB raw capacity, 7TB usable;

- Controllers are 1U dual-socket servers;

- High-availability with non-disruptive XIOS software and firmware upgrades, hot-swap upgrades, and no single point of failure;

- 4 x 8 Gbit’s Fibre Channel and 4 x 10Gbit/E iSCSI block access interfaces;

-

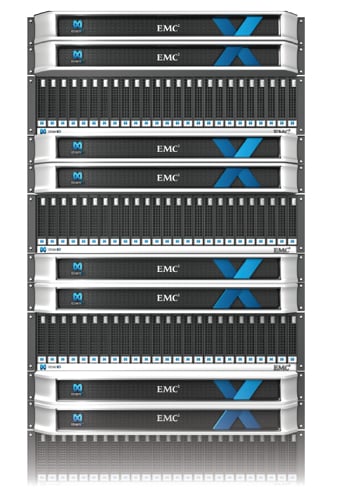

Four X-Bricks in XtremIO array

- Scalability to 4 clustered X-Bricks, linked by dual InfiniBand, delivers 1 million IOPS;

- Scalability to 8 X-Bricks has been spoken of, with an implied 2 million IOPS;

- Future scalability to 16 and beyond X-Bricks;

- Can be different capacity X-Bricks in cluster;

- Integrated workload and data balancing across SSDs and controllers;

- Always-on integrated inline cross-cluster deduplication using 4KB blocks;

- Thin-provisioning using 4KB allocation and with no fragmentation or reclamation penalty;

- VMware VAAI integration, VMware multipath I/O support with EMC claiming that the array is “the only all-flash array to fully integrate” with VAAI;

- Data volumes are thin and wide-striped across whole system;

- In-memory metadata management with metadata lookups not hitting SSDs;

- Deduplication-aware snapshot and cloning features - but no replication until 2014 – and then it will be async; and

- Management through a GUI, vCenter plugin, CLI or REST API.

Oddly, two X-Bricks take up 12U of rackspace but four take up only 22U.

Flash-specific XtremIO Data Protection (XDP) is included and, with it, SSDs can fail in-place with no data loss. XDP is “self-healing,” with double-party data protection and needs “just an eight per cent capacity overhead.”

If an SSD fails the rebuild is distributed across other SSDs and is content-aware. For example, only user data is copied, not free space. XDP doesn’t require any configuration, nor does it need hot spare drives. Instead, we understand, it uses "hot spaces" – free space in the array.

Data is stored in 4KB chunks using hashes. Hash comparisons are used for deduplication and hash values are used for distributing writes. We understand each controller runs its own copy of XIOS with the hash range distributed across XIOS copies – so hash value affects data placement. Data is only written when a full stripe exists. The idea is to reduce write levels and prevent host-spots developing.

A front-end VPLEX box can be used to provide replication now, along with RecoverPoint. XtremIO may not get synchronous replication as that would affect performance.

We’re told one X-Brick can support 2,000 or more (Citrix) virtual desktops. A reference architecture for XtremIO-supported virtual desktops can be found here (31-page PDF).

A VMware View VDI reference architecture can be found here (30-page PDF) and it supports 7,000 virtual desktops. The document says 1,000 linked clones were deployed in 75 minutes. Two X-bricks were included in a Vblock Specialized System for Extreme Applications, with an 8-node Isilon S-Series NAS storage system for user data and Horizon View persona data, and 48 Cisco UCS server blades.

There has been no mention of tiering the XtremIO arrays with back-end VNX/VMAX/Isilon arrays with automated data movement using EMC’s FAST technology. That is most probably a roadmap feature. ®