This article is more than 1 year old

The 'third era' of app development will be fast, simple, and compact

Will Intel and Nvidia join the HSA party, or insist on going it alone?

Hot Chips At the annual Hot Chips symposium on high-performance chippery on Sunday, the assembled chipheads were led through a four-hour deep dive into the latest developments on marrying the power of CPUs, GPUs, DSPs, DMA engines, codecs, and other accelerators through the development of an open source programming model.

The tutorial was conducted by members of the HSA – heterogeneous system architecture – Foundation, a consortium of SoC vendors and IP designers, software companies, academics, and others including such heavyweights as ARM, AMD, and Samsung. The mission of the Foundation, founded last June, is "to make it dramatically easier to program heterogeneous parallel devices."

As the HSA Foundation explains on its website, "We are looking to bring about applications that blend scalar processing on the CPU, parallel processing on the GPU, and optimized processing of DSP via high bandwidth shared memory access with greater application performance at low power consumption."

Last Thursday, HSA Foundation president and AMD corporate fellow Phil Rogers provided reporters with a pre-briefing on the Hot Chips tutorial, and said the holy grail of transparent "write once, use everywhere" programming for shared-memory heterogeneous systems appears to be on the horizon.

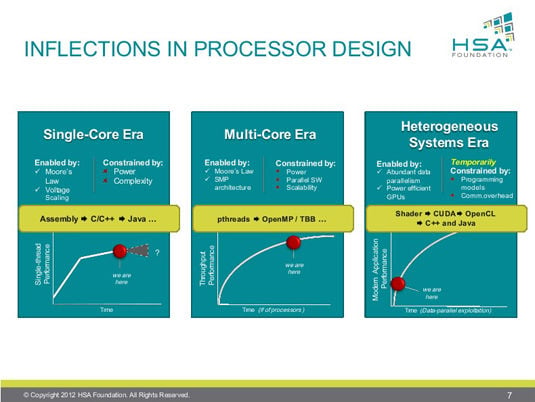

According to Rogers, heterogeneous computing is nothing less than the third era of computing, the first two being the single-core era and the muti-core era. In each era of computing, he said, the first programming models were hard to use but were able to harness the full performance of the chips.

"In the case of single core," Rogers said, "we started with assembly code, then we went to much better abstractions: structured languages, objected-oriented languages, managed languages. At each stage you give up a little bit of performance for massive improvements in productivity, and the platform volumes grow extremely fast as programmers can use the platforms much more efficiently."

The same thing happened in the multi-core era, he said, moving from direct-thread programming to directive programming to task-parallel runtimes. In heterogeneous programming, however, that progression is just beginning. "We've gone from people writing shaders directly," he said, to proprietary languages such as CUDA, to open-standard languages such as OpenCL and C++ AMP.

"But ultimately," he said, "where the platform is going with HSA is to full programming languages like C++ and Java and many others."

Exactly how HSA will get there is not yet fully defined, but a number of high-level features are accepted. Unified memory addressing across all processor types, for example, is a key feature of HSA. "It's fundamental that we can allocate memory on one processor," Rogers said, "pass a pointer to another processor, and execute on that data – we move the compute rather than the data."

Full memory coherency, for another example, eliminates the need for software to manage caches. An architecture-queuing language, Rogers said, will allow an application or a library to dispatch packets to a GPU in what he called a "vendor-agnostic" manner. To enable preemption and context switching for a variety of applications and application types, HSA will support time-slicing throughout the entire collection of processor types.

Rogers took pains to emphasize that HSA is "defined from the outset" to be an open platform, with its specifications owned by the Foundation and delivered by means of a royalty-free standard. "It's designed from the ground up to be ISA-agnostic for both the CPU and the GPU – obviously that's very important," he said, a shared goal that's reflected in the range of hardware, operating system, tools, and middleware companies that have signed on as Foundation members.