This article is more than 1 year old

Top500: Supercomputing sea change incomplete, unpredictable

A mix of CPU and hybrid systems jockey for HPC hegemony – and lucre

Dicing and slicing the trends

As El Reg goes to press, the online database for the Top500 list that can automagically generate sublists and pretty pictures to describe interesting subsets of the data has not yet been updated with info from the June list, but there are some general data points that the creators of the Top500 list – Hans Meuer of the University of Mannheim, Erich Strohmaier and Horst Simon of Lawrence Berkeley National Laboratory, and Jack Dongarra of the University of Tennessee – have put together ahead of the Monday release of the list at the International Super Computing conference in Leipzig, Germany.

First of all, the amount of aggregate floppage on the Top500 list continues to swell like government debt in Western economies. A year ago, the Top500 machines comprised a total of 123 petaflops of sustained Linpack performance; six months ago that jumped to 162 petaflops. With the June 2013 list, it's now 223 petaflops. At this pace, it will be an aggregate of an exaflops in about two years.

This is an astounding amount of performance, especially if it could all be brought to bear at once – and that's the dream every HPC center is chasing. And they want to get there by 2020 or so in a 25 megawatt power budget, probably for something that costs a hell of a lot less than billions of dollars.

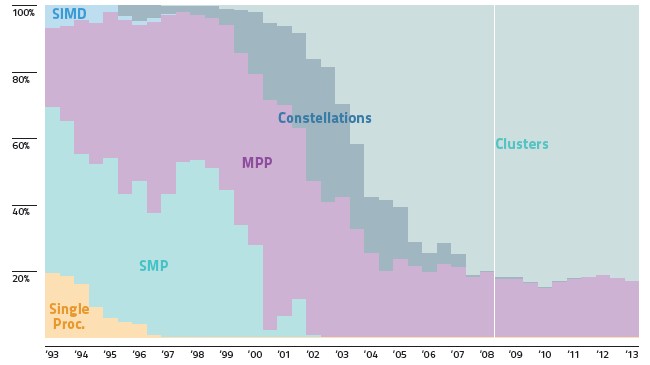

HPC systems are pretty much either clusters or massively parallel machines

This server hack wants a sailboat, a little red wagon, and a pony for Christmas, too. What the hell, throw in world peace while you are at it. (It's far easier to make the exascale design goals, let's face that fact right now. Particularly with supercomputers being a kind of weapon in their own right, as well as a means to make other kinds of weapons. But I digress. Frequently. And with enthusiasm.)

A petaflops has become kinda ho-hum, with 26 machines breaking through that barrier, and it is going to take 100 petaflops of sustained performance to make people jump.

One of the things that's driving up performance is the ever-increasing number of cores in modern processors. Across the Top500 list, 88 per cent of the machines have six or more compute cores per socket, and 67 per cent have eight or more.

A year ago, the average system on the list had 26,327 cores (including both CPUs and coprocessors of any kind) and that has now grown to 38,700. Expect that number to grow even more as heavily-cored coprocessors migrate down the list as third-party software is tuned more and more to use accelerators, and shops with custom code gain experience with offloading work to coprocessors.

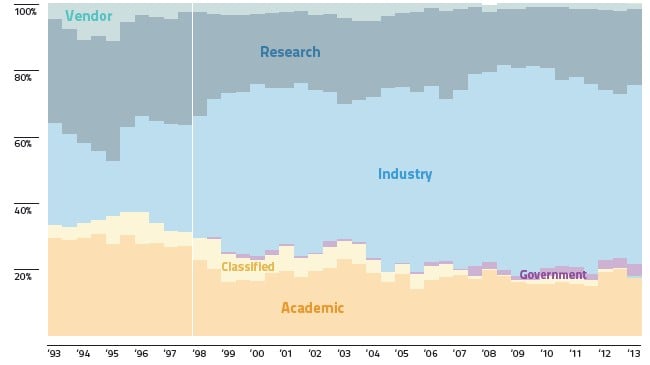

Corporations dominate the system count on the Top500, but governments dominate the flops

If you look at it by system count, Intel processor utterly dominate the Top500 with 403 systems on the list (80.6 per cent of machines). AMD comes in second with its Opteron CPUs being used in 50 machines (10 per cent); IBM is third with 42 systems using one or another variant of its Power chips (8.4 per cent).

Oddly enough, the number of machines using a coprocessor of any kind dropped from 62 on the November 2012 list to 53 machines on the current list. Of these, 11 are using Intel's Xeon Phi coprocessors, three use AMD Radeon graphics cards, and 39 use Nvidia Tesla cards.

We can't do a full drilldown on the interconnects used in the Top500 yet because the list generator has not yet been updated with the data from the June 2013 list. But the summary data for the list gives us some inkling of what is going on in terms of the networks that are used to lash nodes together so they can share work and results.

In the November 2012 list, InfiniBand switches of one kind or another were used in 223 systems (44.6 per cent). Ethernet is coming on strong – as it does from time to time – with a total of 216 machines on the June 2013 list (43.2 per cent), up from 189 machines on the list six months ago. The big jump for Ethernet, the Top500 list makers said, was the adoption of 10Gb/sec Ethernet on 75 of those systems' Ethernet systems.

In terms of countries, the United States still leads, with 253 of the 500 systems. China now has 65 systems on the list (down a bit) and Japan has 30 (also down a bit). The United Kingdom has 29 supercomputers that made the cut this time around, which means at least 96.6 teraflops of sustained performance on the Linpack test. France has 23 machines and Germany has 19. Across all countries, Europe has 112 systems, up from 105 on the November list, but that is still lower than the number of machines in Asia, which fell by five systems to 118 this time around.

People complain sometimes about the Linpack test – as happens with all benchmark tests. Dongarra teamed up with Jim Bunch, Cleve Moler, and Gilbert Stewart to create the Linpack suite back in the 1970s to measure the performance of machines running vector and matrix calculations.

Dongarra kept a running tally of machines used on the test that he would put out once a year (he graciously used to fax it to me every year – and it was a long document), with everything from the original IBM and Apple PCs running Linpack all the way up to the hottest SMPs and MPPs stacked up from first to last in the order of their performance. This list was even more fun because it showed all kinds of machines – hundreds of them – running Linpack, rather than different scales of a handful of different systems running the test.

Linpack is not being used as a sole means of making a purchasing decision on an HPC system, and it never claimed to be the best test to measure all relative performance differences between supercomputers of different scales and architectures. But it's fun – and we sure do need that in the dry supercomputing arena. What's more, the Linpack test gets people thinking about different ways of glomming computing capacity together.

Linpack is also good for spotting trends in system design and projecting how they might be more widely adopted in the broader HPC market. That certainly was the case with federated SMP systems taking on and beating vector machines, and Linux-based clusters taking on everything a decade and a half ago.

And we might be seeing the next wave coming with accelerators. No matter what anyone tells you today, we really won't know until a couple of years from now if accelerators are the answer. ®