This article is more than 1 year old

Is it time for the great Jihad against networked storage?

Big boys look wide open with eyes wide shut

Blocks and Files Dheeraj Pandy is running Nutanix as if the company is on a crusade against networked storage. Data delivery latency from networked storage is plain unacceptable, it seems, and clustered virtualised servers should run and present their local storage as part of a pool.

There's more of course with big-iron converged systems being wrong too; the way to go is to grow IT by embracing scale-out peer-to-peer modules and not have multiple silos for compute and for storage. Bundling and mildly integrating separate server, storage and networking products under a single SKU is not the way to go either, in Pandy land; they being no more than three silos in a SKU wrapper. This approach is just skewed.

Nutanix reckons it is the fastest growing IT startup ever at this stage in its growth, just three and a half years old and with an $80 million annual run rate.

Should networked storage suppliers be worried?

Basically Nutanix uses a VSA (Virtual Storage Appliance) approach to storage with each clusterable 4-node block contributing its storage to a pool run by the Nutanix Distributed File System (NDFS). The file-based storage features VM-centric snapshots, array-based cloning, DR, compression, error-detection, and thin provisioning. Snaps and clones are instantaneous and performed using sub block-level change tracking at the VM and file level.

It is also multi-tiered:-

- The hottest data is stored on Fusion-io PCIe flash cards

- The next hottest is stored on SATA SSDs

- Warm data is stored on 1TB SATA hard disk drives

- Cold data can be sent to a remote - yes, networked - filer or to the cloud.

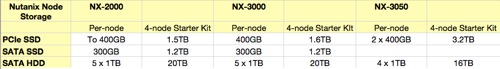

Nodes combine compute, storage and network ports and there are three kinds of node, each with different storage capabilities, as the table illustrates:-

Storage on Nutanix nodes.

Storage expansion

What strikes you is just how limited this is. You can't separately scale compute or storage resources as far as I can see from checking Nutanix' website. Only 1TB disk drives are available and we guess this is to maximise disk I/O through having lots of spindles. Yet Nutanix makes a point of saying its systems can be used for Big Data. Well, okay then, but if you wanted to chew through 1PB of data you'd need 45 NX-3000 nodes, eleven 4-node blocks plus one more node, and you might feel that's a bit CPU heavy.

It would be nice if there was a storage expansion tray capability per node for situations where the data is definitely big but the compute is not. For example you could have a compute-reduced 2U enclosure with the same 20 1TB drives, and so double up on disk capacity per node. Or you could go 4U and have 20 x 3.5-in drives with up to 4TB capacity/drive. There are a number of ways you could spin this.

You could take a similar tack with the compute resource and strengthen that at the expense of storage capacity. Then Nutanix would be able to scale compute and storage separately.

Pandy tells Blocks and Files about a forthcoming system and the use of Fusion-io's cost-reduced ioScale flash cards:

"We are using ioScale to power the largest converged infrastructure in the world. A 4-figure large cluster with a single fabric. [The] 6000 will actually be using SATA SSDs from Intel (s3700). But we'll keep our options open on [a future Fusion-io] ioDrive 3."

We asked him about PernixData and what he thought of that startup.

"Pernix is good people, but they need to cross the chasm and figure out whether they can build a true-blue distributed file system with all enterprise-grade data management capabilities. Otherwise, it becomes harder to not be compared to ioTurbine, FlashSoft, VFCache, vFlash, and a myriad of me-too's that also do caching. Accelerators sitting as middlemen have never been billion dollar companies, period. Performance is only a part of what customers want to solve for. Peace of mind - reliability, availability, simplicity of management - is just as important in storage. And that translates to a whole bunch of NetApp-class features that then demand a real clustered file system."

Deduplication and mainstream competition

One other apparent lack in the storage area is deduplication and it may be that compression offers "good enough" storage optimisation but, equally, doing deduplication can increase storage efficiency - particularly in applications such as VDI and, also, across a Nutanix cluster generally where there must be lots of repeated data in all the hundreds of VMs that will be running.

To return to our starting point, should mainstream networked storage vendors be worried about Nutanix?

If they build themselves a clusterable server with VSA software in it, such as an HP BladeSystem with P4000 VSA software, server flash, an SSD or two and some small form factor disk drives, then they have a Nutanix-like building block and can take it from their with pricing and packaging.

I would have thought Dell, HP and IBM could do this relatively easily and give the resulting box to their channel and make life harder for Nutanix' channel partners. EMC, NetApp and HDS would have to partner with a server vendor, Lenovo maybe for EMC. Cisco would need a storage partner and either EM or NetApp could fulfill that role.

Then, of course, there is Nutanix' software, its main value I feel, but the majors could build their own equivalent if they had a notion to.

What I'm saying is that Nutanix is not "that" disruptive unless the mainstream vendors let it be, and if they do then they deserve everything Nutanix throws at them.

A final point. Nutanix' customers may not be on a "destroy networked storage" jihad. Instead they could just be liking Nutanix a whole lot because it is a great system on which to run their VMs and scale up their server virtualisation efforts. How Nutanix actually designs and use its storage they could care less about. It works; it's great, I'll have another block please; that's all they might care about.

Clearly Nutanix is on a roll and growing its business fast. The mainstream vendors better be watching it and working out potential neutralisation strategies because, if they let it, this could be a great company. ®