This article is more than 1 year old

Honey, I BLEW UP the International SPACE STATION - in full 3D

CTO's radical rig blasts Sandra Bullock into Spaaace

A few years ago the idea of accelerating a BlueArc filer would have seemed bizarre; it's got its own hardware acceleration. But now media special effects processing can be so mind-blowingly intensive that the hardware accelerated filer itself needs accelerating.

The case that's illustrating this point is the new George Clooney film Gravity, directed by Alphonse Cuarón in 3D format, in which Gorgeous George and Sexy Sandra Bullock play the roles of astronauts stranded in orbit several hundred miles above the Earth when the International Space Station blows up.

A trailer has been released and a still from it is shown below. Well now; let's count the various fragments of the Space Station which have to be represented in each frame and have their trajectories and lighting effects computed. Play the trailer, all 1 minute 31 secs of it, and imagine the compute and data storage access delivery power needed to create, craft and render that. And that's just one and half minutes of a movie that's going to run for one and half hours or so.

We know the basics of how this is done; a bunch of SFX artists use workstations to craft each frame and then the frames are rendered into the movie, with each frame undergoing an intricate and highly involved rendering process to create the image from basic components that are tweaked, twiddled, and twisted to get the uber-realistic finished result.

Gravity's SFX have been produced by the UK's FrameStore which has great street cred in the field, having worked on Avatar, Skyfall, and Harry Potter and the Deathly Hallows. Previously it has used Infortrend storage - we wrote about that here in 2008. The Gravity work involved HDS (BlueArc) filers.

Framestore's Chief Technical Officer is Steve MacPherson. He says Gravity is "the most computationally demanding film Framestore has ever done … Framestore had an unprecedented level of CG imagery being created and a huge number of people working on this material simultaneously.”

The firm began working on Gravity more than two years ago and saw that it would need more than 15,000 processor cores working at the peak rendering load. Framestore's IT setup has a central storage resource providing file access both to the render nodes and also to artists' workstations.

As the rendering workload builds up it can suck up all the system's storage bandwidth leaving nothing behind for the artists. This isn't acceptable; they were working on things like Sherlock Holmes: Game of Shadows and Lincoln which couldn't simply stop. But nor is it OK to buy a whole new storage setup just for the rendering.

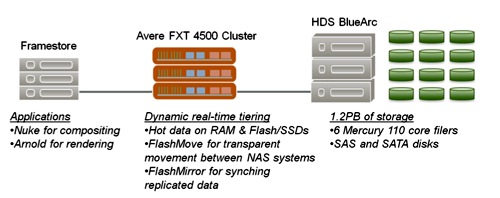

The storage resource needed partitioning so that the artists could carry on working while the rendering went ahead, ideally without being held up by the storage. Framestore designed itself a central file pool based on 1.2PB of a 6-node clustered HDS (BlueArc) filer system using SAS and SATA drives, front-ended by six Mercury 110 heads with a 10GbitE network and a low-latency Arista core switch. But their rendering cores needed even more storage oomph and that was going to have to come from adding another layer of storage resource between the HDS filer and the render cores.

Framestore CTO Steve MacPherson

MacPherson looked at solid state storage, flash arrays and the idea of using CacheFS software on storage closer to the render cores. In the event he settled on three multi-tiered Avere 4500 Edge Filers in a cluster with 114GB of DRAM and 3TB of flash per node.

These sit in the data path between the render cores and the big HDS filer cluster. MacPherson said this setup had an "ability to deliver the type of metrics, analysis and identification of hot files essential to production efficiency."

Once Framestore established the correct configuration "We were able to just step back and let it do its thing.”

Framestore Gravity IT set up.

It was simpler for FrameStore to have Avere caching accelerators keeping hot, active data in their multi-layered caches than go for all-flash arrays which would need some kind of data selecting and moving software to put hot data in them, or for the CacheFS idea.

This is, el Reg supposes, a perfect example of a niche big data app, in the simple sense that the data was big and it had to be chewed up and digested quickly. It's a testament to the importance of software that merely sticking the hot files in a flash array doesn't work. The hot files can change during the rendering process and the storage accelerator software you use needs to cope with that.

Naturally you could simply have a humungous all-flash filer - but 1.2PB of that, while delivering the data access bandwidth needed, would have cost (roughly) gazillions of dollars. So MacPherson ended up turbo-charging his turbo-charged filer and we all get to enjoy perfectly rendered International Space Station fragments blasting across the screen while Gorgeous George and Sexy Sandra gyrate between the flying bits. ®