This article is more than 1 year old

Linux in 2013: 'Freakishly awesome' – and who needs a fork?

Features, performance, security, stability: pick, er, four

LCS2013 If there was a theme for Day One of the Linux Foundation's seventh annual Linux Collaboration Summit, taking place this week in San Francisco, it was that the Linux community has moved way, way past wondering whether the open source OS will be successful and competitive.

"Today I wanted to talk about the state of Linux," Jim Zemlin, executive director of the Linux Foundation, began his opening keynote on Monday. "I'm just going to save everybody 30 minutes. The state of Linux is freakishly awesome."

Zemlin said that each day some 10,519 lines of code are added to the Linux kernel, while another 6,782 lines are subtracted from it. All told, the kernel averages around 7.38 changes per hour – a phenomenal rate for any code base.

Zemlin went on to liken Linux to a multi-million dollar R&D project, on which over 400 companies collaborate – some of which, at the same time, are fierce competitors.

"This incredible platform is now more than just an operating system. Linux is really now becoming a fundamental part of society – one of the greatest shared technology resources known to man," Zemlin said.

He added, "I mean, it runs all of our stock markets, most of our air-traffic control systems, internet, phones, you name it ... most of the world's telecommunications systems ... this is really now beyond a movement and an operating system, this is now this real, shared, societal, important piece of work."

Bigger and better, and delivered faster

In a separate keynote session later on Monday, kernel contributor and LWN.net editor Jonathan Corbet noted that even as successful as Linux has been to date, the pace of kernel development is actually still accelerating.

Kernel 3.3 shipped on 18 March, 2012, and in the 13 months since, 3,172 developers have contributed some 68,000 change sets into the mainline kernel. The kernel is now 1.53 million lines bigger than it was a year ago.

Kernel 3.8 was the project's most active development cycle ever, with around 12,400 change sets merged for that one release alone. And the releases keep coming faster and faster. Just a few years ago, a new kernel shipped about every 80 days, while today a release cycle longer than 70 days is unusual. Kernel 3.9, which could ship as soon as this weekend, will have been in development for only around 63 days.

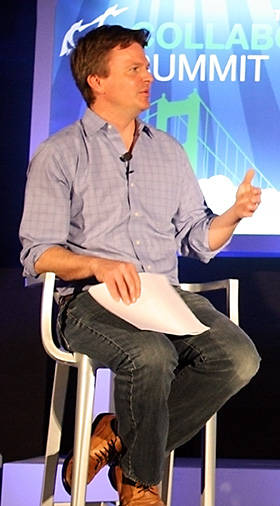

Jim Zemlin: "Linux is going swimmingly."

"Even though we're getting busier and more active, we've gotten the process so smoothly functioning at this point that we're able to get the releases out more frequently while we're at it," Corbet observed.

Contributions from individual volunteers continue to decline, however, with the volume of changes submitted by individuals now at 11.8 per cent, down from nearly 20 per cent some years ago.

But Corbet chose to spin even this figure in a positive light. The decline in unaffiliated submitters, he said, was probably due to the nature of the job market today – namely, that any developers who have the drive and determination to get code accepted into the mainline kernel will probably find jobs in short order.

Furthermore, he said, while it's true that corporate contributions make up the majority of kernel changes today and have done so for some time, no single company has contributed more than 10 per cent of the code for any given kernel.

Although overall Linux kernel development is going well, however – or "swimmingly," as Zemlin put it – it's not without its challenges. New divisions and new debates have emerged, owing to the changing nature of the Linux user base and the wide variety of audiences the kernel now serves. According to Corbet, some of these battles reach to the very deepest levels of the kernel code.

"We used to have a lot of fights about CPU scheduling some years ago, when we were trying to figure out how you pick which process to run next," Corbet explained. "We pretty well solved that problem. You can always do better, but we don't argue about that anymore ... Instead of which process you run, it's more a question of where do you run it."