This article is more than 1 year old

Oak Ridge lab: Behold, I Am TITAN, hear my 20 petaflop ROAR

One giant leap for a GPU, one small step for exascale

The push to exascale and out to zettascale

"Titan is validation that accelerated computing is here," says Nichols, who is not as excited about hardware as he is about doing science with the hardware. The important thing is that Oak Ridge started working with Cray and Nvidia on porting applications from Jaguar's parallel x86 architecture to the hybrid CPU-GPU architecture 18 months ago. "We want to be able to do science on day one."

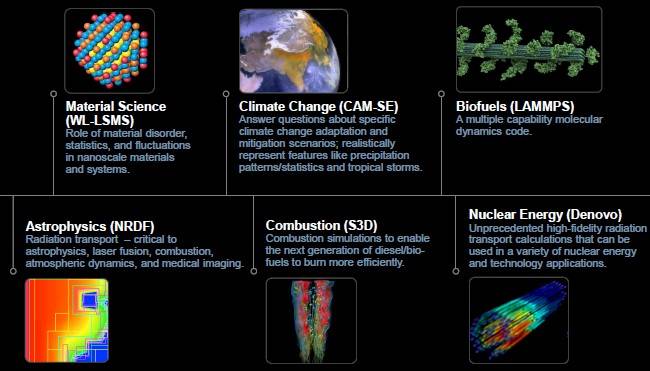

A number of different codes have already been ported to run on Titan, as you can see below:

Key workloads on the Titan supercomputer

These run the gamut, and they all have one thing in common. Researchers are already planning how they might use a machine with around ten to fifteen times the performance of Titan. This future box, which is in the planning stages right now for delivery in around 2016, was known as OLCF-4 in the Oak Ridge planning documents we saw a year ago and was based on the future "Cascade" machines with the "Aries" interconnect from Cray.

That was theory, not contract, and Nichols says that Oak Ridge is talking to Cray, Intel, IBM, Appro International, and others for this future procurement. Nichols tells El Reg that something on the order of "200 to 300 petaflops was a good stretch goal" for the performance of this machine.

The problem is not adding machines and cabinets to a cluster to build a bigger badder box to push up to exascale, but that an exascale machine in 2019 or 2020 is expected by Nichols to cost somewhere around $200m to $250m using extrapolated current technology. Oak Ridge gets about $100m a year to fund its computing lab, with roughly a third for systems; a third for electricity for power, cooling, and computing; and a third for staff salaries. So an exascale machine in 2020 or so is now currently more expensive than Oak Ridge has been paying for each successive computer. But an exascale-class machine is needed to fully simulate an internal combustion engine (something that is near and dear to the US Department of Energy, which funds Oak Ridge) or to do a whole earth weather simulation at a 1km resolution, just to name two applications.

Steve Scott, who left the CTO job at Cray in August 2011 to become CTO for the Tesla line at Nvidia, acknowledges the challenges in getting to exascale, but is optimistic that we can reach that level of performance and push on. "Five years from now, we will be talking about zettascale. I am pretty bullish that we can get there," says Scott.

Everybody wants that, but there are considerably engineering challenges to get an exascale system into a 20 megawatt power envelope, which most people say is the practical upper limit for an exascale machine. It would be nice if it didn't cost so much, too.

Parallel program for inciting researchers to program in parallel

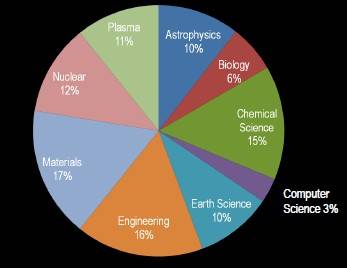

The US Department of Energy shares the supercomputers it builds at Oak Ridge and at Argonne National Laboratory. The supers have their jobs allocated to them through the Innovative and Novel Computational Impact on Theory and Experiment (Incite) program, which made its first awards to academia in 2004 to let them run their jobs.

Under the Incite rules, you can't get time on the system unless you can demonstrate that your job will scale across at least 25 per cent of the system. This stands in stark contrast to the machines funded by the National Science Foundation, which have thousands of users getting much smaller time (and often core) slices of the boxes.

How the DOE allocates computing resources

at Oak Ridge and Argonne

In addition to Titan at Oak Ridge, the Incite program slices up time on two IBM machines: the "Mira" 10 petaflops BlueGene/Q machine and the "Intrepid" 557 teraflops BlueGene/P box.

As part of the rollout of Titan, the DOE announced that in 2013 it will allocate 4.7 billion core-hours to 61 science and engineering projects through Incite. About 1.84 billion core-hours will be allocated on Titan with 2.83 billion core-hours will be given away on the Mira and Intrepid machines. The average award on Titan is 58 million core hours, which works out to running a job across the entire machine for eight days. (Not that it is necessarily allocated that way.) The average award on Mira is 78 million core hours, according to the DOE.

Roughly speaking, according to Nichols, about half of the capacity managed by Incite goes to DOE research and half to outside academics. There are three times as many applicants to the program than awards.

And there's a lot more capacity to play with. Back in 2004, the original Incite awards granted 5 million core-hours, and there is now a three orders of magnitude increase in capacity available. To date, over 10 billion core-hours of computing have been run through Incite. You can read all about the 2013 Incite awards on these three supercomputers here. ®