This article is more than 1 year old

Scality opens Ring for close scrutiny

First object storage to be openly performance tested

Ring scaling

Using six storage devices per server node and logically partitioning them into two devices, ESG scaled the Ring from three server nodes and 36 logical nodes to five server nodes and 60 logical nodes, and found performance scaled in a straight line, from 41,573 get objects/sec to 60,410, with 385,000 get objects/sec projected for a 24-server node ring with 288 logical nodes.

ESG said: "As each server node is added to a Scality Ring, the overall performance of the system is increased using the CPU, disk, bus, and networking resources of the new server. An object-based Scality Ring that leverages the latest Intel server CPU and SSD technologies can be used to create an object-based storage solution with performance that exceeds the capabilities of a traditional block-based disk array."

ESG also found a five-server node ring delivered 211,424 MP3 audio files simultaneously, with a 128Kb/sec bit stream rate. This equates to an output of 26.43GB/sec and ESG said this "rivals that of high-performance computing systems".

If anything that understates the case. For comparison our records show a DataDirect Networks SFA10K-X delivers 17GB/sec per rack of 4U 60-drive enclosures. A Panasas PAS 2 array does 15GB/sec per rack. Scality's Ring is in fine company and more than holding its own.

All these numbers look good. When we looked at the test configuration though, we were intrigued.

Test configuration

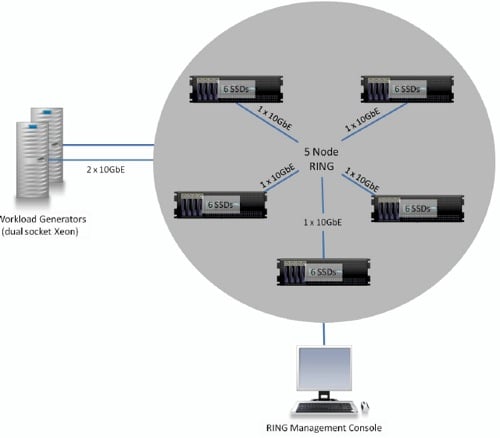

ESG's lab testing was performed with three to five Scality RING server nodes connected to each other via a single 10GbE cable. They were each configured with 24 Intel Xeon CPU cores, 24GB of RAM and six 600GB Intel SSD drives. The software divided each SSD into two partitions, which created twelve I/O demons (software nodes) per server node.

ESG's Scality Ring configuration

The general object storage system, like Scality's Ring 1, has a disk-drive based design. Yet, in this ESG test, each Ring server node was a flash-based object storage node, not a disk-based one, yet its performance was "a very good result compared to the I/O per second performance of industry leading block-based dual controller disk arrays."

Why did Scality and ESG choose to compare an SSD-based Ring with HDD-based drive array?

Scality's CEO, Jérôme Lecat, said: "We are convinced that, contrary to standard opinion, our object-based storage is indeed faster than SAN for parallel load, but how could we prove it?

We studied how others (SAN, NAS, Scale-out NAS) reported performance numbers, and we found that in the way they have designed their test, most of the IOPS performance comes from RAM/Cache/SSD, not from the access to HDD. All storage systems have some controller memory and some mechanism for cache and/or tiering. With some optimisation, it is easy to make a test mostly hit that portion of the system rather than the disks.Isilon, a scale-out NAS which is comparable to us in many ways, had the same approach when they did their famous IOPS world record with ESG (pdf) a year ago. You can read on page 6 of this report that [the] average response time was less than 3ms [which] is too short for reading data from a 10,000rpm disk.

He said that assumes that the industry at large assumes "our software, with its totally scalable distributed meta-data architecture, must introduce a significant delay. Once people understand our architecture, they do not challenge us on the power of parallelism, but on the latency cost for atomic operations. We decided that testing on SSD was the best way to measure the delay inherent to our software."

Latency

"The ESG Lab test successfully demonstrate that our system can write or read objects to/from SSD in less than 7ms, and that this number is very stable with load and with the addition of nodes. From this test, and from our production experience, we can extrapolate that a two-tier architecture only built with HDD (no SSD) would do an average 40ms for reads with 7,200rpm disks, and only 35ms with 10,000rpm disks, similar to those used by Isilon for their own ESG tests."

Lecat said DataDirect's Web Object Scalar DDN WOS2 had a 40ms latency on its HDD operations. He thinks that, by using SSDs, Scality could lower the latency significantly. Also, analysis showed that most of the latency came from the Ethernet network and not from Scality's software in the server nodes.

It could take the SSD latency down further, possibly to 3ms, with Infiniband node-to-node links rather than Ethernet, but Lecat said: "The reality is that, for most file applications, 40ms of latency is totally acceptable, and for those who need a lower latency, putting in some SSD is not a concern."

Yes, SSD would boost real-life object storage performance but it isn't generally needed, as far as Lecat is concerned: "We agree that an all SSD storage would not make sense at petabyte level ... Typically, in a petabyte scale environment, having just 5 per cent of the capacity in SSD greatly improves the performance, at a very reasonable cost. This being said, we only recommend SSD when the applications requires response time faster than 40ms."