This article is more than 1 year old

IBM bit-twiddlers want point releases for big iron

Software gurus to 'refactor' Big Blue's systems

The bit-twiddlers took over IBM's server business a year and a half ago, and it appears that they are starting to think about systems as if they were code, as if they could do dot releases in a nearly steady stream and keep their revenues from spiking up and crashing down all the time.

It has taken a long time for IBM to build a software business that could dwarf its server hardware business, and in the past 20 years as that was happening, there has been a massive amount of consolidation across IBM's server lines. The AS/400 and RS/6000 hardware platforms started merging 15 years ago and IBM took all the best parts of the AS/400 platform, both hardware and software, and used them, as well as the fat profits from the AS/400 business, to prop up and then extend its AIX server business. The RS/6000 people took over the AS/400 biz a long time ago. Then the mainframe and Power lines were merged a few years back and the System z and Power chips now share many common circuits (even though they still have different instruction sets). But then a funny thing happened. IBM's software people took over all of the systems businesses.

In July 2010, IBM's Software Group took over Systems and Technology Group, putting Steve Mills in charge of the entire systems enchilada, from chips to bits. Tom Rosamilia, who was tapped to be general manager of the converged Power Systems and System z divisions in August 2010, ran the mainframe line for a short stint before then and spent years running IBM's WebSphere division with Software Group and running various database and mainframe systems software development efforts before that.

Rosamilia started out as a System/390 programmer working on MVS code for IBM back in 1983 and importantly was in charge of all software development on the IBM's mainframes in 1998 when the company decided to put Linux on all of its boxes and started to earnestly converge its hardware lines as much as possible.

Ahead of that systems transition in the summer July 2010, which saw the software folks take over the hardware divisions at Big Blue, it was little noted that Ambuj Goyal, the general manager of IBM's Information Management division within Software Group, which is the part of IBM that creates database, data warehousing, and analytics software, was named general manager of development and manufacturing for the Systems and Technology Group. (IBM still talks as if these two groups are separate when it reports its financials, but that could very well change soon since it is getting harder to keep the revenue streams separate.)

Goyal ran IBM's database business from 2005 through 2009, and before that was in charge of the transformation of the Notes/Domino stack to include portals and productivity apps. He's been chief technology officer for application servers and messaging software in Software Group and at one point in the 1990s had 1,500 researchers across seven IBM labs reporting to him trying to cook up future technologies. Goyal led the creation of WebSphere middleware and the RS/6000 SP PowerParallel supercomputers and the software that ran on it that turned it into the Deep Blue chess master.

In other words, you would have to look very hard to find a better person within IBM to completely rethink the systems business. And that apparently is the task that Goyal has been handed so IBM can meet its 2015 revenue and profit targets for Systems and Technology Group. He has 23,000 engineers and programmers at his disposal, working in 37 labs in 17 countries, as well as the Microelectronics Division and IBM's chip fabs, to rethink what systems should look like.

A presentation I obtained given by Goyal gave last year – presumably to IBMers working in Systems and Technology Group – didn't lay out specific near-term product plans but rather gave a strategic overview of how Goyal believes IBM should build systems. During the presentation, he used a very specific term derived from the software business – "refactoring" – to describe how it needs to be done for IBM to get innovation into the field faster.

I know what factoring means in mathematics, but not being a software guy, the term refactoring is not something I had encountered before. It refers to making lots of tiny changes to a set of source code that doesn't modify its external function, but improves how that function is delivered. To do this refactoring function, you have to break down code into functional units and iteratively improve them as you can. This sounds dirt simple, and was done by many forward-thinking programmers, but refactoring wasn't really even discussed academically until the early 1990s. The idea is to make many small tweaks that don't disrupt the code, do them quickly and do them often, instead of trying to do a big-bang version upgrade with a feature dump and all of the woes that creates for development and test. The canonical book on refactoring, called Refactoring: Improving the Design of Existing Code, is written by Martin Fowler, Kent Beck, John Brant, William Opdyke, and Don Roberts.

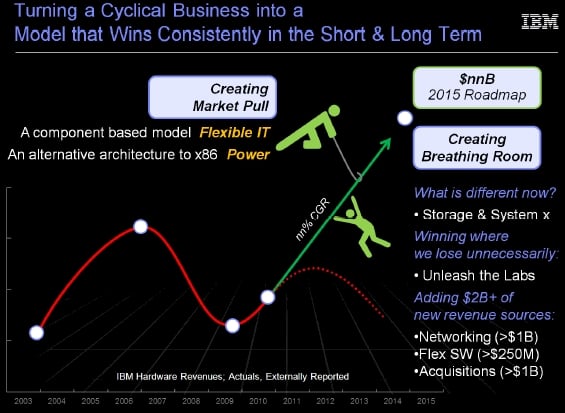

The problem that IBM is trying to address in its system business is one that plagues all hardware manufacturers: The business is cyclical. It takes two or three years to bring out a new processor and build, test, and deliver the components that wrap around that processor to turn it into a complete system. All of this boom-and-bust cycling is mostly outside of IBM's control – because it doesn't control the X86 processor cycle or the development of many peripherals and specifications that go into its systems. And because system designs are largely monolithic, IBM and its competitors sometimes fall behind.

For instance, it was only last fall that selected models of the Power7 machines got PCI-Express 2.0 peripheral slots. And here we are at the dawning of the PCI-Express 3.0 era this quarter, when Intel launches the Xeon E5 processors – which sport on-chip PCI-Express 3.0 controllers.

PCI-Express 3.0 offers twice the bandwidth of its predecessor, and in an increasingly virtualized and networked world, this really does matter. A lot of commercial workloads are I/O bound, not CPU or memory bound, so being able to add 10 Gigabit Ethernet, 40 Gigabit Ethernet, 40 Gigabit InfiniBand, and 56 Gigabit InfiniBand cards to servers and then slice them up into virtual network interfaces that can handle the I/O load is important. You could credibly argue that today's workloads require PCI-Express 4.0, and that isn't even off the drawing boards yet.

Turning the IBM systems biz from cyclical to linear

IBM, Goyal told his staff, wants to turn that cyclical business into a more predictable and sustainable model, and that means refactoring hardware designs, and making systems more modular so they can be tweaked more frequently instead of being revamped every couple of years. (I talked about modular system board and server designs back in August 2008, which just goes to show you how obvious the idea is. That no one has really done it at the level of granularity that is needed just shows you how difficult this is to do at an electronics level.)

Refactoring is the same iterative approach IBM now employs for its semi-annual updates to both the AIX and IBM i (formerly OS/400) operating systems, where support for new hardware features are slipped into the OSes as a patch set instead of waiting for a full release later down the road. This is, to a certain extent, how commercial open source software distributors like Red Hat deliver updates to their software stacks, usually two or three times a year as well. The idea is do updates without breaking application compatibility and therefore not requiring customers to go through another application qualification process on the new hardware/software combo.

In IBM's case, refactoring means breaking storage array upgrade cycles free from server launches and getting storage software function into arrays separately from operating system release cycles so both sides of storage – hardware and software – can be iterated at their own pace. IBM has been doing this gradually over the past several years.

You'll notice one thing very important in that chart above. Power chips are pitched as the alternative to X86 processors. And, according to the notes embedded in Goyal's presentation, the company is planning on taking the architectural battle to the X86 chip and standing up Power as the only credible alternative. (Those members of the ARM collective who want the same chips used in smartphones and tablets to grow up and take over the PC and server businesses would no doubt argue that Power had its chance in embedded systems, PCs, and laptops and blew it.)

"We cannot let Intel roam freely in the marketplace without fighting the architecture battle," Goyal's presentation notes say. "And we are going to fight that battle with the Power architecture, and across the board with z, p, i, x. We will fight the system level battles, but we must fight the architectural battle as well."

This could get interesting, if IBM is really serious. A three-way fight between Power, x86, and ARM would inject a whole lot of excitement into the stodgy systems racket. ®