This article is more than 1 year old

Future of computing crystal-balled by top chip boffins

Bad news: It's going to be tough. Good news: You won't be replaced

Is IA headed for the boneyard?

Our next question was obvious: would changing applications mean changing the IA architecture? (Okay, "IA architecture" is an unnecessarily redundant and repetitive repetition, but you know what we mean...) Pawlowski firmly rejected that idea. "No. IA will still be there. We'll still be executing x86 instructions."

Which makes sense, seeing as how x86 processors have been decoding IA instructions into micro-operations – or µops – since the days of Intel's P6 architecture, which first appeared in the Pentium Pro.

"You see, that's the beauty of an architecture like the P6," Pawlowski said, "because the instruction comes into the front end, but what's put out the back end and executed on the machine itself is something completely different. But it still runs the program, even though the micro-instruction may not look anything like the macro-instruction, the IA instruction."

From architect Pawlowski's point of view, however, the days of microprocesser architects saying to process technologists "you build those tiny transistors and we'll figure out how to use them" are drawing to an end.

"The two groups, microarchitects and process-technology techs," he told us, "have mostly worked in their own worlds. As the process guys are coming up with a new process, they'll come and say, 'Okay, what kind of circuits do you need? What do you think you're going to look at?' And so we'll tell them, and they'll go off and they'll develop the device and give us device models, and we'll be able to build the part."

He also implied that process techs have saved the archtects' hiney's more than once. "I'm kind of embarrassed to say this," he said, "but the device guys have come through many times. If there was something that we needed, they were able through process to make whatever tweaks they needed to make to let the architecture continue the way it was."

Those last-minute saves may no longer be possible in the future. "Now, I think, the process is going to be more involved in defining what our architecture is going to look like as we go forward – I'm talking by the end of the decade. We're on a pretty good ramp where we are, but as I look how things are going to change by the end of the decade, there's going to be a more symbiotic relationship between the process engineers and the architects," Pawlowski said.

"The process guys and the architecture guys are going to be sitting together."

Your brain is safe – for now

All this increasing computational efficiency, decreasing power requirements, and increasing chip complexity begs one important question: what's all this computational power going to be used for?

Addressing that question at the 40th birthday party of the Intel 4004, Federico Faggin was quick to dismiss the idea – for the foreseeable future, at least – that computational machines would be able to mimic the power of the human brain.

"There is an area that is emerging which is called cognitive computing," he said, defining that area of study as being an effort to create computers that work in a fashion similar to the processes used by the human brain.

"Except there is a problem," Faggin said. "We do not know how the brain works. So it's very hard to copy something if you don't know what it is."

He dismissed research in this area. "There is a lot of research," he said, "and every ten years, people will say 'Oh, now we can do it'."

He also included himself among those researchers. "I was one who did the early neural networks in one of my companies, Synaptics," he said, "and I studied neuroscience for five years to figure out how the brain works, and I'll tell you: we are far, far away from understanding how the brain works."

Federico Faggin

A goal far beyond building a computing system that could mimic processes used by the human brain, would be embuing a computer with intelligence – and Faggin wasn't keen on that possiblity, either.

"Can they ever achieve intelligence and the ability of the human brain?" he asked, referring to efforts by the research community. "'Ever' is a strong word, so I won't use the word 'ever', but certainly I would say that the human brain is so much more powerful, so much more powerful, than a computer."

Aside from mere synapses, neural networks, and other brain functions, Faggin said, there are processes that we simply haven't even begun to understand. "For example," he said, "let's talk about consciousness. We are conscious. We are aware of what we do. We are aware of experiences. Our experience is a lived thing. A computer is a zombie. A computer is basically an idiot savant."

He also reminded his audience that it is man who built the computer, and not the other way around. "[A computer's] intelligence is the intelligence of the programmer who programmed it. It is still not an independent, autonomous entity like we are," he said.

"The computer is a tool – and as such, is a wonderful tool, and we should learn to use it ever more effectively, efficiently. But it's not going to be a competition to our brain."

The purpose of the computer, said the man who helped bring them into so many aspects of our lives, is to help us be "better human beings in terms of the things that matter to a human, which are creativity, loving, and living a life that is meaningful."

Bootnote

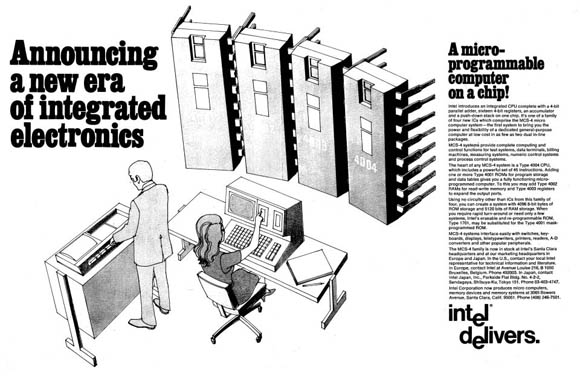

After we whipped together our "Happy 40th birthday, Intel 4004!" article earlier this month, we were handed a copy of the November 15, 1971 Electronics News advert that launched the Intel 4004. We though you might want to take a gander:

Great for 'data terminals, billing machines, measuring systems, and numerical control systems' (click to enlarge)

®