This article is more than 1 year old

SGI to put Intel's Xeon E5s in ICE X systems

Opteron 6200s put on ice

Getting double-stuffed

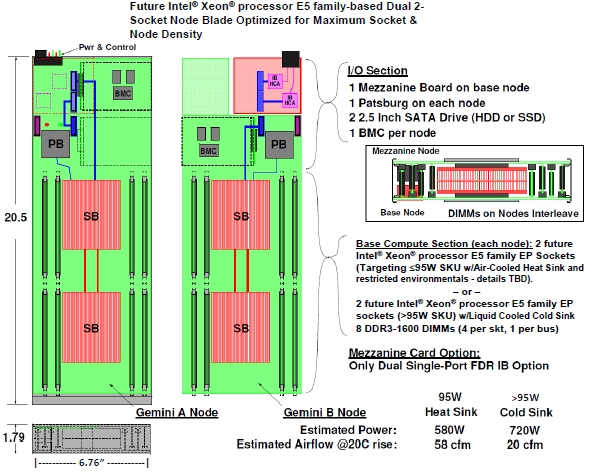

The second new blade server designed for the ICE X machines is called "Gemini" internally and the IP-115 in the SGI product catalog. As the name implies, the Gemini blade is actually a double blade, and they snap together, stacking the CPUs on top of each other with interlocking air-cooled or water-cooled heat sinks. The blades do not implement symmetric multiprocessing over those four sockets, but they do share power and InfiniBand mezzanine cards.

Each half of the Gemini blade has two Xeon E5 processors, but only four memory slots instead of eight. They are spread out to help get rid of heat, and they interleave on the boards so the double-stacked blade is not too tall. Each blade has its own Patsburg chipset and BMC, of course. The base node on the bottom has room for the two disk or flash drives and power and control for both blades. The top node is where the networking mezz card goes, and you can only use the dual single-port card, which gives you a single FDR InfiniBand link per two-socket server and that means you can only do single-rail InfiniBand interconnections.

The Gemini blade might be dense, but you always give up something when you cram a lot of electronics into the same space. In this case, you give up half the memory (only eight slots) and half the disks (only two drives covering two blades), and you cannot use the 130 watt processors if you want to stick with air cooling. In fact, the target is to use 95 watt or cooler Xeon E5 chips if you want to stick with air cooling only, and if you want to use hotter parts, then you need to have the water-cooled heat sink slapped onto the four processor cores. Kinyon says that Gemini twin blade will burn about 580 watts tops with air cooling and 720 watts tops if you use 32°C (89.6°F) water with the water-cooled sink on Xeon E5s running at 95 watts. You can push the "cold sink" to around a kilowatt per Gemini twin blade with standard memory and the hotter 130 watt Xeon E5 processors.

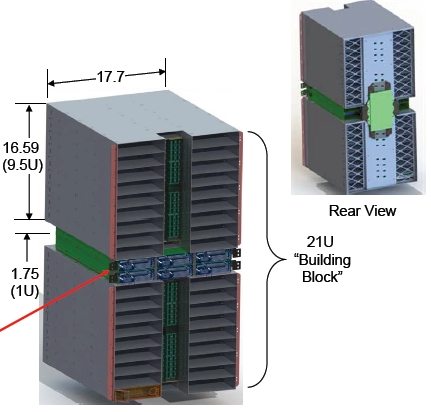

As part of the ICE X design, SGI is making a few changes to the chassis, which holds two columns of nine blades in a 9.5U space plus two switch modules.

First, the InfiniBand switch modules are in the center of the chassis, mounted vertically in the front, rather than on the outside left and right edges of the chassis with the prior Altix ICE machines. At 56Gb/sec speeds, keeping the wires as short as possible gives the cleanest possible signal, says Kinyon, and that means centralizing the switch.

SGI is offering two switch blades for the ICE X machines. There is a single-port FDR switch ASIC that has 18 ports connected to the backplane and has 18 QSFP connects to the external network. This switch is intended for all-to-all and fat tree networks, but can also be used for small hypercube or extended hypercube setups, too. The top-end switch has two 36-port SwitchX ASICs on the blade that is designed for the large systems that deploy hypercube or enhanced hypercube networks. Each ASIC has nine ports to the backplane, three to the adjacent switch ASIC, and 24 ports out to the external network.

Kinyon says that when the OpenFabrics Enterprise Distribution (OFED) remote direct memory access (RDMA) drivers for InfiniBand support mesh and torus interconnects in 2D or 3D, SGI will support these as well with its switch blades. Kinyon says this should happen in about six months.

Another change with the new ICE X chassis is that the power modules are also pulled out of the chassis and now can be shared across multiple chassis, thus:

The ICE X blade server chassis

You can put three power modules in for each chassis and still have n+1 redundancy by having some of the six power units back each other up. This is cheaper and burns less money and juice.

The ICE X chassis can be used in the 24-inch wide D series racks from SGI, which will allow for up to 72 nodes of the Dakota blades (144 sockets) and up to 16 power modules and four chassis in a single rack. The Gemini blades go into the M series racks, which are 28 inches wide. You run the power distribution modules on the outside left and right of the chassis (up to eight per chassis or up to 32 per rack) and that gives you 144 nodes and 288 sockets per rack. The Altix ICE 8400 machines could cram only 64 nodes, or 128 sockets, in a 30-inch wide rack. Either way, D or M series, Dakota or Gemini blade, SGI is packing in a lot more computing in the same space – which is what HPC customers, Hadoop customers, and cloud customers want. This would make a pretty impressive Oracle RAC database cluster, too.

SGI is taking orders for the ICE X machines now and will be doing its manufacturing release in December, with first shipments expected in January. The Dakota blades and D series racks will come out first, with the Gemini blades and M series racks coming in March. Pricing information was not available at press time. ®