This article is more than 1 year old

IBM uncloaks 20 petaflops BlueGene/Q super

Lilliputian cores give Brobdingnagian oomph

Oomph and gunk

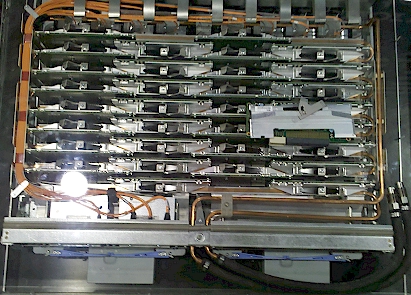

Here's a photo of the BlueGene/Q compute node (pardon my photography, but the lighting conditions were awful on the show floor — and I am also not great with a lens):

IBM's BlueGene/Q 17-core compute node, blue gunk included

The chip in the middle of the compute node is the BGQ processor, which has the Power cores as well as memory controller and various interconnect features on it. The compute node is not fully populated with its DDR3 main memory, which is why some of it has blue gunk on it, which is covering the sockets where memory will be plugged in.

The interesting thing about the BlueGene/P design is that it will be water cooled, with a spring-loaded aluminum jacket wrapping around the front and back of the compute node, which slides into its midplane socket on the compute drawer right between two copper pipes full of water.

When you press the BGQ compute node into its slot, there is a clip you push down, and that compresses the aluminum against the BGP processor and memory chips on the node and against two adjacent, squared-off copper pipes filled with water. There is no special thermal contact material to keep the chips in contact with the aluminum or the aluminum in contact with the copper tubing. The spring provides 100 pounds of force and everything stays in contact so the heat can be drawn off the processor and memory and whisked away by water coursing through the pipes, thanks to thermodynamics.

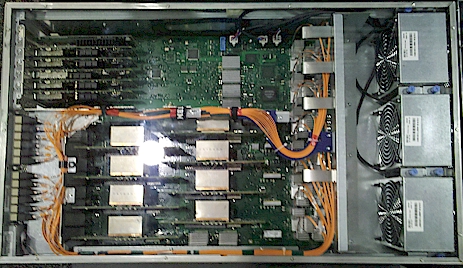

The BlueGene/Q compute drawer

Smith said that the system design would allow BlueGene/Q to be cooled with water at 60 to 65 degrees Fahrenheit, which is fairly warm for a water-cooled system but increasingly normal as system makers realize they are overcooling both data centers and components because "that's the way we have always done things." There are no fans in the compute drawer, just two power supplies and pipes for water inlet and outflow.

The compute drawer has an interconnect that is fed by a fiber optic links from each compute node (the orange wires in the photo) and this interconnect snaps into the midplane to link it to the other compute drawers and compute nodes in the BlueGene/Q cluster. The water comes in and cools the optical interconnection chips first, then swishes through the compute nodes.

The BlueGene/Q compute drawer has 32 compute modules (each a server in the cluster), and each node will have 16GB of DDR3 main memory (1GB per core). A compute drawer has 512 cores, 2,048 threads, and 512GB of memory. A BlueGene/Q rack holds 32 of these compute drawers, which are half-depth, which means 16 in the front and 16 in the back. That's a stunning 1,024 server nodes in a rack (16,384 cores and the same gigabytes of memory) and 1.57 million cores dedicated to processing calculations, with another 98,304 cores for running the Linux kernel Big Blue uses for the BlueGene machines.

Another interesting fact: IBM is using a 5D mesh/torus interconnect to lash together the BlueGene/Q nodes, which quite possibly could mean it is moving backwards through time as well as across universes in the multiverse.

Actually, Smith said the way to think about the 5D interconnect was that you create a hypercube linkage between nodes, and then you link the vertices of the hypercubes together to make the 5D torus mesh. I know you had no problem at all visualizing that, but I'm not entirely sure that this is an accurate description of a 5D mesh/torus, so let's move on.

With the BlueGene/Q design, IBM is breaking apart the I/O nodes from the compute nodes for two reasons. First, by breaking them up, they can scale independently of each other and users who need less I/O can add more compute to a given rack and therefore take up less space to get a given amount of work done. Also, the I/O processors, which are based on the same BGQ modules, are not so densely packed that you need to cool them with water.

The BlueGene/Q I/O node

The BlueGene/Q I/O drawer has eight nodes and eight slots for adding in 10 Gigabit Ethernet or InfiniBand PCI-Express peripheral cards (which you can see on the upper left).

The Sequoia super that Lawrence Livermore will be getting in 2012 — IBM said it'd be in late 2011 back when the deal was announced in February 2009, so there's been some apparent slippage — will consist of 96 racks and will be rated at 20.13 petaflops. Argonne National Laboratory said back in August that it wanted a BlueGene/Q box, too, and it will have 48 racks of compute drawers for a total of 10 petaflops of floating-point power.

On the November 2010 ranking of the Top 500 supercomputers that was announced this week at SC10 in New Orleans, IBM had slapped together a half-rack of BlueGene/Q iron (well, more literally aluminum and copper, as you saw), and that machine was able to hit 65.3 teraflops of performance on the Linpack test against a peak theoretical performance of 104.9 teraflops. That works out to a 62.3 per cent efficiency. That 1/192nd of the Sequoia BlueGene/Q machine ranked 114 on the Top 500 list, by the way.

El Reg was not able to find out if the BlueGene/Q interconnect was goosed in the machines in terms of bandwidth and latency, but presumably there has been lots of work here to balance the extra processor performance. A rack is now rated at somewhere around a peak 209.7 teraflops in the Q generation, compared to a 13.9 peak in the P generation. That's a huge leap in raw performance, and presumably one that requires faster interconnects to be more efficient.

If IBM did not substantially change the interconnect, that might explain why the BlueGene/L at Lawrence Livermore (ranked number 12 on the list at 478.2 teraflops) has an 80.2 per cent efficiency comparing sustained Linpack versus peak theoretical performance, and the BlueGene/P at Argonne (ranked number thirteen at 458.6 teraflops) has an efficiency at 82.3 per cent.

The Jugene 825.5 teraflops BlueGene/P super at Forschungszentrum Juelich in Germany is also delivering an 82.3 per cent efficiency on the Linpack test. By comparison, BlueGene/Q is not terribly efficient. But it is also early days in the design. It is still, after all, a prototype, just like BlueGene/L was in 2005 and BlueGene/P was in 2007. ®