This article is more than 1 year old

InfiniBand and 10GbE break data centre gridlock

Bandwidth bonanza in the data centre

Alacritech has revved its TOE technology to produce a 10GbE NIC (network interface card) with TOE technology that supports either stateful Windows Server 2003/ Server 2008 Chimney TCP/IP processing where Windows is the TCP/IP initiator, or stateless Open Solaris, Linux, Mac OS X and non-Chimney Windows where the card carries out that function. It's called an SNA (Scalable Network Accelerator) and we can think of it as a TONIC, a TOE and NIC combined. Alacritech's marketing director, Doug Rainbolt, says the pricing has moved closer to that of a 10GbE NIC to encourage offload take-up. He also says the card has been built to work well with VMware and Hyper-V.

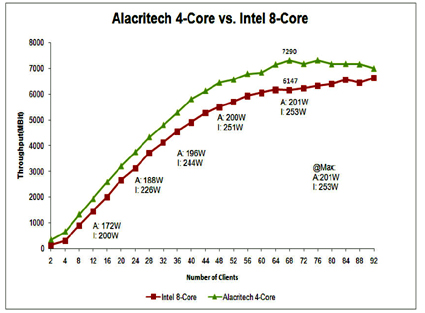

Look, this is no idle thing. He has a Netbench chart (right) showing a four-core server running the SNA outperforming an equivalent eight-core server with no SNA, no TOE. Taken on its, merits that means you could add the SNA to an eight-core server and recover four cores previously maxed out doing TCP/IP processing. You could double the number of virtual machines in that server or buy a four-core and SNA to start with instead of the greater sum needed for the eight-core.

The product will cost $1,299 and be available in Q1 next year.

To InfiniBand and beyond

QLogic has announced 40Gbit/s quad data rate (QDR) InfiniBand switches and an HCA (Host Channel Adapter) which connects the cable from the switch to a server. The company based its 20Gbit/s InfiniBand products on Mellanox chipsets but has now developed its own ASICs for the HCA and 12000 series switch.

The switch can be configured for performance, meaning 72-648 ports, with no over-subscription, or port-count with 96-864 ports and the possibility of over-subscription reducing performance.

Both the HCA and the switches will be available by the end of the year but no pricing information is available. QLogic's EMEA marketing head, Henrik Hansen, says that the switch can sub-divide its overall InfiniBand fabric into separate virtual fabrics and deliver different qualities of service to these V-fabrics, which is equivalent to what VLANs (Ethernet) and Cisco VSANs (Fibre Channel) can do.

He says the switches have deterministic latency too: "If you run the switches up to 90 per cent full speed on all ports we maintain the latency. Above that it falls off." He reckons competing switches have latency fall-offs starting when they go over 70 per cent load.

QLogic is punting these products into the HPC market and not as data centre network consolidation platforms, a point which Mellanox adds to its InfiniBand marketing pitch.

Balancing processing and network I/O

Without much faster network pipes the greater efficiencies of virtualised and bladed servers can't be fully realised. It is apparent from the Alacritech Netbench chart that coupling virtualised and multi-core servers with balanced network bandwidth (and offloaded network processing) will send their utilisation up and enable server consolidation to deliver data centre cost-savings. This is something that's going to be essential in the quarters ahead to persuade cash-hoarding customers to buy IT products.

The autobahns and interstates cost a lot of money but they helped generate much, much more wealth than they cost to build. So too with faster data centre network pipes. Spend money to free up bottle-necked and choked servers to save a whole lot more by consolidating even more stand-alone servers into virtual machines and freeing up floor space, lowering energy costs and so forth into the bargain. Faster network pipes solve data centre gridlock. ®